Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 28-44 |

| Gender | Male, 54.9% |

| Happy | 45% |

| Calm | 52.1% |

| Angry | 46.9% |

| Sad | 45.1% |

| Surprised | 45.5% |

| Confused | 45% |

| Disgusted | 45% |

| Fear | 45.3% |

Feature analysis

Amazon

| Poster | 100% | |

Categories

Imagga

| streetview architecture | 65.6% | |

| interior objects | 33.6% | |

Captions

Microsoft

created by unknown on 2019-11-09

| a vintage photo of a group of people posing for the camera | 77.5% | |

| a vintage photo of a group of people posing for a picture | 77.4% | |

| a vintage photo of a person | 77.3% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-18

| a photograph of a poster of a statue of a woman holding a sword | -100% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2025-02-05

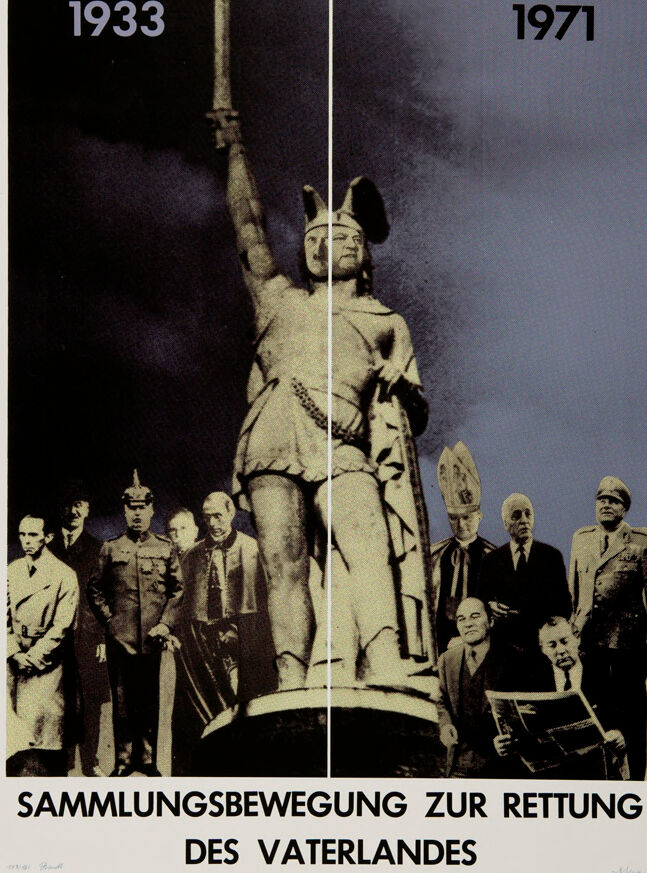

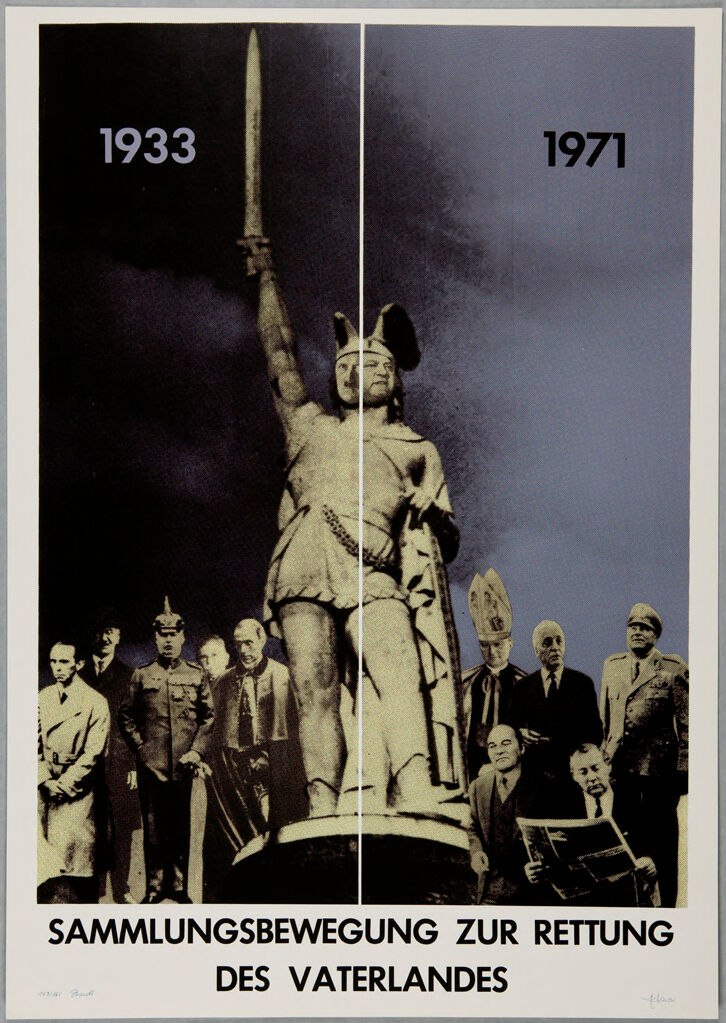

The image is a comparative illustration showing a statue of a warrior standing prominently with one arm outstretched holding a sword upward. The left side of the image is dated 1933 and the right side is dated 1971. Below the statue, there are groups of people standing on each respective side, aligning with the years presented. At the bottom of the image, there is a German caption that reads, "SAMMLUNGSBEWEGUNG ZUR RETTUNG DES VATERLANDES," which translates to "collection movement for the salvation of the fatherland."

Created by gpt-4 on 2025-02-05

The image is divided into two parts, with the left side labeled "1933" and the right side labeled "1971." The two sides appear to be a continuation of one another, showing a statue holding a sword aloft. Below the dividing line is a phrase in German that translates to "Collection movement to save the fatherland." The style of the image suggests a commentary or historical juxtaposition. The bottom section includes a signature or attribution, and various elements, such as the statue and background figures, tie into the given dates, indicating specific historical or political contexts.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-02-05

This image appears to be a political poster or artwork split between two time periods - 1933 and 1971. The central focus is a statue or monument of what appears to be a warrior or knight figure holding a sword upward, wearing medieval-style armor and a horned or winged helmet. The statue is surrounded by groups of people in both time periods, dressed in the attire typical of their respective eras. The German text at the bottom reads "SAMMLUNGSBEWEGUNG ZUR RETTUNG DES VATERLANDES" which translates to "Collection Movement for the Salvation of the Fatherland." The image appears to be making some kind of historical or political commentary about Germany across these two time periods, using the consistent presence of the monument as a connecting element.

Created by claude-3-opus-20240229 on 2025-02-05

The image is a black and white poster or photograph showing a group of men, likely political or military leaders, gathered around a statue on a pedestal. The years 1933 and 1971 are printed at the top, suggesting a comparison or progression between those two time periods.

The statue depicts an armored knight or warrior holding a spear or lance. The men are wearing suits and military uniforms from the early-to-mid 20th century period. Some are standing, while others are seated.

The text at the bottom is in German and reads "SAMMLUNGSBEWEGUNG ZUR RETTUNG DES VATERLANDES", which translates to something like "Collection Movement to Save the Fatherland". This suggests the image relates to some patriotic or nationalist movement in Germany between 1933-1971.

The high contrast, grainy style gives it the look of an old monochrome lithograph print or newspaper photo. Overall, it appears to be documenting an important gathering or ceremony regarding German political history and identity in the 20th century, with the statue serving as a symbolic focal point.

Created by claude-3-haiku-20240307 on 2025-02-05

The image appears to be a historical poster or propaganda material from 1933 and 1971, depicting a significant event or movement related to the "Sammungsbewegung zur rettung des vaterlands" or "Collecting movement for the salvation of the fatherland". The image shows a large statue or sculpture of a warrior-like figure holding a weapon in the center, surrounded by a group of men, some in military uniforms, posing for the camera. The text indicates that this movement spanned from 1933 to 1971, suggesting a long-term political or ideological campaign. However, as the image contains a human face, I will refrain from identifying or naming any individuals shown.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-15

The image is a poster from 1971, featuring a striking black-and-white photograph of a statue of a woman in ancient Greek attire, holding a sword aloft. The statue is positioned centrally, with a group of men in suits and military uniforms standing behind it.

Key Elements:

- Statue: A woman in ancient Greek attire, holding a sword aloft.

- Men: A group of men in suits and military uniforms standing behind the statue.

- Background: A dark background with a light-colored border around the edges.

- Text: The years "1933" and "1971" are displayed in the top-left and top-right corners, respectively. The text "SAMMLUNGSBEWEGUNG ZUR RETTUNG DES VATERLANDES" is written in German at the bottom of the poster.

Overall Impression:

The image appears to be a historical or political poster, possibly related to the rise of Nazism in Germany. The contrast between the ancient Greek statue and the modern men in suits and military uniforms may symbolize the conflict between tradition and modernity, or the clash between different ideologies. The use of a dark background and bold text emphasizes the importance of the message being conveyed.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-15

The image is a poster that appears to be in German, featuring a statue of a man with his right arm raised and holding a sword. The statue is surrounded by men in various uniforms and attire, including military, religious, and civilian clothing.

Key Elements:

- Statue: A prominent figure with his right arm raised and holding a sword, likely symbolizing strength, courage, or victory.

- Surrounding Men: A diverse group of individuals in different uniforms and attire, suggesting a gathering or assembly of people from various backgrounds and professions.

- Text: The poster features text in German, which translates to "Sammlungsbewegung zur Rettung des Vaterlandes" (Collection Movement for the Salvation of the Fatherland). This suggests that the poster is promoting a nationalistic or patriotic message.

- Dates: The poster includes two dates: 1933 and 1971. These dates may refer to significant events or anniversaries related to the movement or organization being promoted.

Overall Impression:

The image conveys a sense of unity, patriotism, and national pride. The statue and surrounding men suggest a strong sense of community and shared values, while the text and dates provide context for the poster's message. The overall tone is one of seriousness and solemnity, emphasizing the importance of the movement or organization being promoted.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-02-05

The image is a poster that shows a statue of a man holding a sword and a shield. The statue is divided into two parts. The left side of the statue is in black and white, and the right side is in color. There are people standing behind the statue, and some of them are wearing hats and uniforms. The poster has the words "SAMMLUNGSBEWERUNG ZUR RETUNG DES VATERLANDES" written at the bottom.

Created by amazon.nova-lite-v1:0 on 2025-02-05

This image is a split-screen photograph with a black-and-white image on the left and a blue-and-white image on the right. The left side shows a statue of a woman holding a sword, with several people standing behind her. The right side shows a group of people standing in front of a statue of a man holding a sword. The image has a white border and the text "1933" and "1971" is written on the top left and right corners, respectively. The text "Sammlungsbewegung zur Rettung des Vaterlandes" is written in the bottom center of the image.

Google Gemini

Created by gemini-2.0-flash on 2025-06-03

The poster is divided into two distinct sections, each bearing a year: 1933 on the left and 1971 on the right. The imagery in both sections is monochromatic, with the left side in darker tones and the right side in lighter shades.

At the forefront of both sections is a statue of a warrior figure. The statue depicts a man wearing a winged helmet, holding a sword aloft in his right hand. He is dressed in what appears to be ancient attire.

Below the statue in the 1933 section, there are several men standing. One is in a military uniform, adorned with a pointed helmet, while others are in civilian attire. In the 1971 section, the men standing below the statue are also dressed in civilian clothes, with one notable figure wearing a bishop's miter. One man is reading a newspaper.

The text at the bottom of the poster reads "Sammlungsbewegung zur Rettung des Vaterlandes" in bold, sans-serif font.

Created by gemini-2.0-flash-lite on 2025-06-03

Here's a description of the image:

This is a political poster. It's divided vertically into two sections, a common technique to illustrate a contrast or comparison. The left side of the image features the year "1933," and the right side has "1971."

Central Figures:

- Statue: A large, statue of a warrior, presumably intended to represent a historical or national hero. The warrior is in a powerful pose, holding a sword high above his head.

- Group of Figures: Beneath the statue are a group of men, and the men are presented on both sides of the statue.

Overall Impression:

The poster's design strongly suggests a call to action, potentially aimed at reminding people about their role in safeguarding the country. The use of both visual and text elements creates a powerful, urgent message.