Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-35 |

| Gender | Female, 93.3% |

| Happy | 0.9% |

| Fear | 56.6% |

| Sad | 7.4% |

| Calm | 23.1% |

| Confused | 1.4% |

| Disgusted | 0.2% |

| Angry | 4.3% |

| Surprised | 6.2% |

Feature analysis

Amazon

| Painting | 93.3% | |

Categories

Imagga

| paintings art | 98.5% | |

| streetview architecture | 1.4% | |

Captions

OpenAI GPT

Created by gpt-4 on 2025-01-30

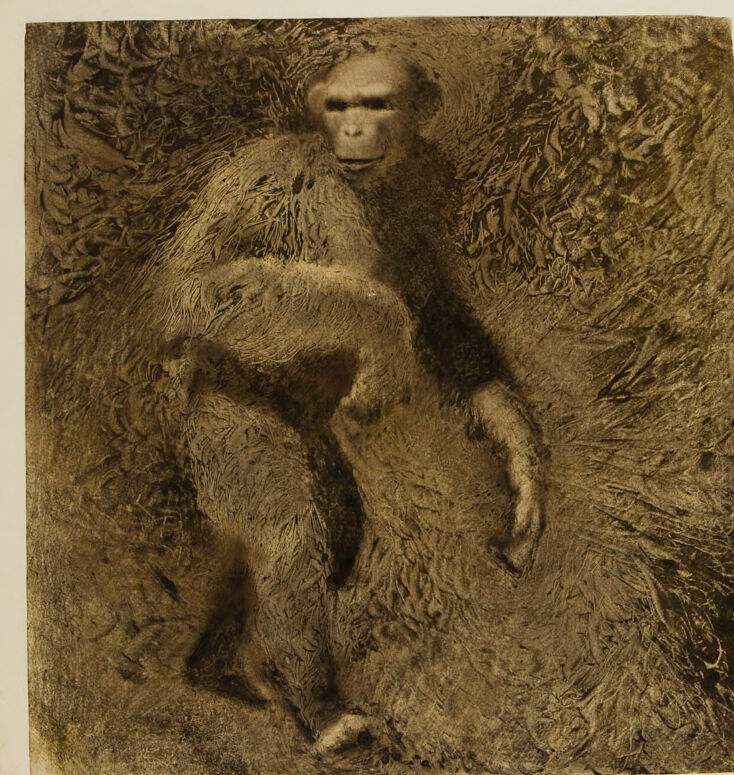

The image depicts a piece of artwork featuring an animal lying down on its back in a relaxed posture. The style is reminiscent of an etching or print, with detailed lines forming the texture and contours of the animal's fur. The background has a detailed, almost grassy texture that provides contrast with the softer lines of the animal. The art piece contains some text at the bottom, which indicates a signature and possibly a title or description of the piece, suggesting it might be a print of an original artwork. The palette of the image is sepia-toned, adding an element of antiquity or a vintage look to the image.

Created by gpt-4o-2024-05-13 on 2025-01-30

The image depicts a textured, artistic representation of a figure that resembles a humanoid ape-like creature. The figure is covered in a fur-like texture and appears to be standing or walking upright. The background features intricate, swirling patterns that add depth and complexity to the artwork. The style is somewhat abstract, with an emphasis on texture and form rather than detailed realism.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-01-30

This appears to be an old sepia-toned photograph or artistic print showing a chimpanzee in what seems to be a textured or carved relief setting. The chimp is shown in a standing posture, and the image has a distinctive artistic quality with rich textures and shadows. The background appears to have a rough, carved or etched quality, giving the whole composition a sculptural feel. The photograph has a brown/sepia coloring typical of early photographic processes, and shows good detail in capturing the ape's fur and features.

Created by claude-3-opus-20240229 on 2025-01-30

The sepia-toned image depicts a primate, likely a chimpanzee or monkey, sitting in a naturalistic setting of foliage and branches. The animal appears to be an adult based on its size and features. Its fur is shaggy and covers most of its body, with the face, hands and feet visible. The posture suggests the primate is at rest, possibly contemplating its surroundings. The sketch-like quality and monochromatic tones give the artwork an antique feel, perhaps from the late 19th or early 20th century, when such naturalist illustrations were common. The detailed rendering captures the essence of the primate's form and character.

Created by claude-3-haiku-20240307 on 2025-01-30

The image appears to depict a sculpture or relief of a hairy, ape-like creature emerging from a textured, rocky background. The figure has a simian-like face and body, covered in thick fur or hair. The overall impression is one of a primitive, prehistoric or mythological creature embedded within the natural landscape.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-15

The image is a sepia-toned photograph of a gorilla, likely taken in a zoo or wildlife sanctuary. The gorilla is standing on its hind legs, with its front legs bent at the elbows and its hands resting on its thighs. It has a large, furry body with a prominent head and facial features, including a flat nose and a prominent jawline. The gorilla's eyes are cast downward, giving it a contemplative expression. The background of the image is out of focus, but it appears to be a naturalistic setting, possibly a forest or jungle environment. The overall atmosphere of the image is one of serenity and calmness, with the gorilla appearing relaxed and at ease in its surroundings. The image is signed by the photographer, who is identified as "Roth" or "Rothschild." The signature is located in the bottom-right corner of the image, and it is written in a flowing script. The image also includes some handwritten notes or captions, which are difficult to read due to the low quality of the image. However, they appear to be descriptive comments about the gorilla and its behavior. Overall, the image is a beautiful and intimate portrait of a gorilla, capturing its natural beauty and personality in a unique and captivating way.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-15

The image features a sepia-toned artwork depicting a monkey, likely a chimpanzee or gorilla, standing upright on two legs. The monkey's face is blurred, and its body is covered in thick fur. It appears to be walking or standing in a forested area, surrounded by trees and foliage. The artwork is rendered in a textured style, with visible brushstrokes and layering of paint or ink. The overall effect is one of depth and dimensionality, with the monkey appearing to emerge from the surrounding environment. At the bottom of the image, there are several lines of illegible text, which may include the artist's signature, title of the work, or other relevant information. The background of the image is a light beige color, which provides a neutral contrast to the darker tones of the artwork. Overall, the image presents a striking and evocative representation of a primate in its natural habitat.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-30

The image is a monochromatic picture of a chimpanzee. The chimpanzee is standing on the ground and looking towards the right. The chimpanzee seems to be carrying a baby chimpanzee on its chest. The ground is covered with dry leaves. The picture has a watermark in the bottom left corner, and the artist's signature is in the bottom right corner. The picture is bordered by a white border.

Created by amazon.nova-lite-v1:0 on 2025-01-30

The image shows a monochromatic print of a monkey. The monkey appears to be sitting on the ground, with its body covered in a layer of fur. The monkey's face is turned towards the left side, and it seems to be looking at something. The monkey's body is covered in a layer of fur, and its face is covered in a layer of fur as well. The image has a slightly blurry effect, which adds to the overall mood of the print.