Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-38 |

| Gender | Female, 53.6% |

| Calm | 51.4% |

| Angry | 45.4% |

| Disgusted | 45.1% |

| Surprised | 47.1% |

| Sad | 45.7% |

| Confused | 45.3% |

| Happy | 45.1% |

Feature analysis

Amazon

| Person | 92.4% | |

Categories

Imagga

| events parties | 36.8% | |

| food drinks | 27.3% | |

| interior objects | 13.1% | |

| people portraits | 11.9% | |

| nature landscape | 4.2% | |

| cars vehicles | 2.3% | |

| text visuals | 1.2% | |

| paintings art | 1.1% | |

Captions

Microsoft

created on 2019-05-22

| a group of people wearing costumes | 48.2% | |

| a group of people posing for a photo | 48.1% | |

| a group of people posing for the camera | 48% | |

OpenAI GPT

Created by gpt-4 on 2024-12-03

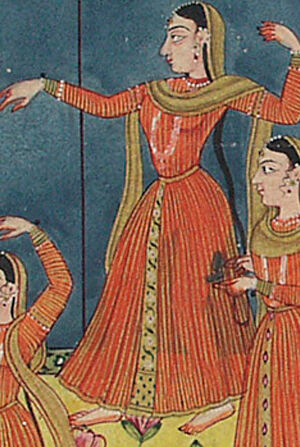

This is a traditional Indian miniature painting depicting a group of figures, likely women, dressed in vividly colored historical attire. They are adorned with detailed dresses, ornamented with patterns, and feature what appears to be traditional Indian jewelry such as necklaces and bracelets. The scene is set outdoors, indicated by the flowers in the foreground and the blue background which might symbolize the sky. The artwork is highly stylized, typical of miniature paintings from the Indian subcontinent, with attention to the fine detailing of the clothes and the environment. Repetition in the stance and attire of the figures suggests a form of assembly or procession. The top of the image features what seems to be the inside of a tent or a canopied structure, indicating that the gathering is happening under a form of shelter.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This is a classical Indian miniature painting depicting a group of women in traditional dress performing what appears to be a dance or ceremonial gathering. The figures are wearing long, flowing orange-red dresses (possibly anarkali suits or similar traditional garments) with gold dupattas or scarves. The scene is set under a decorated red canopy or ceiling with a pattern of small dots or designs. The background is a deep blue-green color, and the floor is decorated with stylized pink flowers against a yellow ground. One figure appears to be leading the group, standing taller with raised arms in a dance pose. The painting demonstrates the characteristic style of Indian miniature art, with its detailed costumes, flat perspective, and decorative elements. A musical instrument (possibly a drum) can be seen being held by one of the figures in the group.

Created by claude-3-haiku-20240307 on 2024-11-27

This image appears to depict a group of people, likely women, wearing elaborate traditional Indian clothing in bright orange and red colors. The figures are arranged in a row, with one central figure seeming to be leading or directing the others. The background features a patterned roof or canopy overhead, as well as flowers and a starry night sky. The overall style of the artwork suggests it is an illustration or painting from a traditional Indian artistic tradition.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-28

This image is a painting of a group of women in traditional Indian attire, with one woman dancing and the others watching or playing instruments. The women are dressed in long, flowing robes with intricate designs and patterns, and they wear headscarves or veils. The woman dancing is in the center of the image, with her arms outstretched and her body moving in a fluid motion. The other women are arranged around her, some of them playing instruments such as drums or flutes, while others simply watch the dancer. The background of the image is a deep blue color, with a red and gold border at the top. The overall effect of the image is one of elegance and sophistication, with the women's traditional clothing and the beautiful background creating a sense of luxury and refinement. The image appears to be a representation of a traditional Indian dance performance, with the women showcasing their skills and beauty in a celebratory atmosphere.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-24

The image depicts a vibrant scene of women in traditional Indian attire, set against a backdrop of a blue sky with clouds. The women are dressed in long, flowing red dresses with intricate gold accents and headscarves, exuding an air of elegance and sophistication. In the foreground, one woman stands out, her arms outstretched as if conducting an orchestra or leading a procession. She is surrounded by eight other women, each engaged in various activities such as playing musical instruments, dancing, or simply standing in contemplation. The background of the image features a blue sky with white clouds, adding a sense of depth and atmosphere to the scene. The overall mood of the image is one of joy, celebration, and cultural richness, capturing the essence of Indian tradition and heritage. The image appears to be a painting or illustration, possibly from an ancient Indian manuscript or scroll. The level of detail and intricacy in the artwork suggests that it may have been created by a skilled artist or craftsman, using traditional techniques and materials. Overall, the image presents a captivating glimpse into the world of ancient India, showcasing the beauty, elegance, and cultural significance of traditional Indian attire and customs.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-05

The image is a painting of a group of women performing a dance. The women are dressed in traditional Indian attire, with bright orange dresses and yellow scarves. They are arranged in a row, with one woman in the center raising her hands. The background is a blue wall with a red roof. The painting has a traditional, old-fashioned style, with a simple and elegant composition.

Created by amazon.nova-pro-v1:0 on 2025-01-05

A painting depicts a group of women in traditional attire, possibly dancing and singing. They are wearing orange dresses with a yellow cloth around their necks. One of them is holding a musical instrument. They are standing on a yellow surface with flowers. Behind them is a blue wall with a red roof. The painting has a border that is blue on the top and bottom and green on the sides.