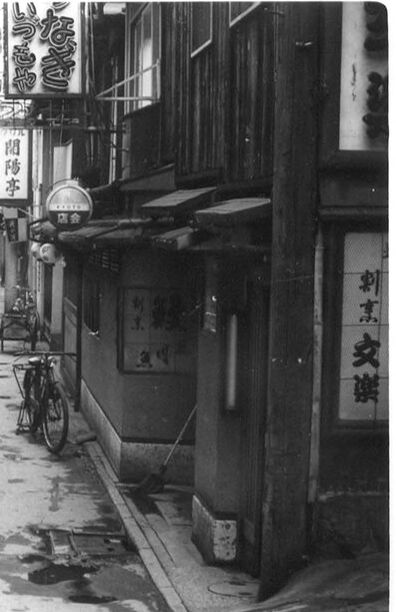

Machine Generated Data

Tags

Amazon

created on 2023-10-05

| City | 100 | |

|

| ||

| Road | 100 | |

|

| ||

| Street | 100 | |

|

| ||

| Urban | 100 | |

|

| ||

| Clothing | 99.9 | |

|

| ||

| Alley | 99.9 | |

|

| ||

| Path | 99.3 | |

|

| ||

| Person | 99 | |

|

| ||

| Neighborhood | 98.7 | |

|

| ||

| Person | 97.6 | |

|

| ||

| Architecture | 94.7 | |

|

| ||

| Building | 94.7 | |

|

| ||

| Bicycle | 91.4 | |

|

| ||

| Transportation | 91.4 | |

|

| ||

| Vehicle | 91.4 | |

|

| ||

| Bicycle | 91.3 | |

|

| ||

| Machine | 91 | |

|

| ||

| Wheel | 91 | |

|

| ||

| Person | 86.4 | |

|

| ||

| Walking | 83 | |

|

| ||

| Wheel | 78.5 | |

|

| ||

| Overcoat | 74 | |

|

| ||

| Coat | 73.2 | |

|

| ||

| Accessories | 70.6 | |

|

| ||

| Bag | 70.6 | |

|

| ||

| Handbag | 70.6 | |

|

| ||

| Face | 64.1 | |

|

| ||

| Head | 64.1 | |

|

| ||

| Sidewalk | 57.7 | |

|

| ||

| Outdoors | 56.7 | |

|

| ||

| Pedestrian | 55.8 | |

|

| ||

| Walkway | 55.1 | |

|

| ||

| Shelter | 55.1 | |

|

| ||

Clarifai

created on 2018-05-10

Imagga

created on 2023-10-05

Google

created on 2018-05-10

| town | 94.4 | |

|

| ||

| black and white | 90.7 | |

|

| ||

| alley | 88.2 | |

|

| ||

| street | 88 | |

|

| ||

| neighbourhood | 84.4 | |

|

| ||

| monochrome photography | 79 | |

|

| ||

| road | 75.5 | |

|

| ||

| monochrome | 65.4 | |

|

| ||

| facade | 57.3 | |

|

| ||

Microsoft

created on 2018-05-10

| outdoor | 95.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 99.7% |

| Sad | 100% |

| Surprised | 6.8% |

| Fear | 6.8% |

| Confused | 0.7% |

| Disgusted | 0.5% |

| Calm | 0.5% |

| Angry | 0.2% |

| Happy | 0.1% |

AWS Rekognition

| Age | 18-26 |

| Gender | Female, 93% |

| Fear | 92.3% |

| Calm | 14.8% |

| Surprised | 6.6% |

| Sad | 3.1% |

| Confused | 1.3% |

| Happy | 1.3% |

| Disgusted | 0.5% |

| Angry | 0.4% |

AWS Rekognition

| Age | 16-24 |

| Gender | Male, 85.7% |

| Sad | 53.1% |

| Calm | 36% |

| Confused | 18.1% |

| Surprised | 9.5% |

| Fear | 7.8% |

| Angry | 5.3% |

| Disgusted | 1.9% |

| Happy | 1.4% |

Feature analysis

Categories

Imagga

| streetview architecture | 97.5% | |

|

| ||

| paintings art | 2.1% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a group of people standing in front of a building | 79.2% | |

|

| ||

| a group of people standing outside of a building | 79.1% | |

|

| ||

| a group of people standing next to a building | 77.9% | |

|

| ||

Text analysis

Amazon

***

las

so

POSTA

-水た4%

-

水

た

4

%