Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 12-22 |

| Gender | Female, 95.9% |

| Disgusted | 0% |

| Sad | 2.2% |

| Happy | 7.2% |

| Angry | 0.4% |

| Confused | 0.1% |

| Surprised | 0.2% |

| Fear | 0% |

| Calm | 89.9% |

Feature analysis

Categories

Imagga

| streetview architecture | 95.1% | |

| paintings art | 2.7% | |

| nature landscape | 2% | |

Captions

Microsoft

created on 2020-04-24

| a painting hanging on a wall | 83.8% | |

| a painting on the side of a building | 74.2% | |

| a painting on the wall | 74.1% | |

Azure OpenAI

Created on 2024-12-04

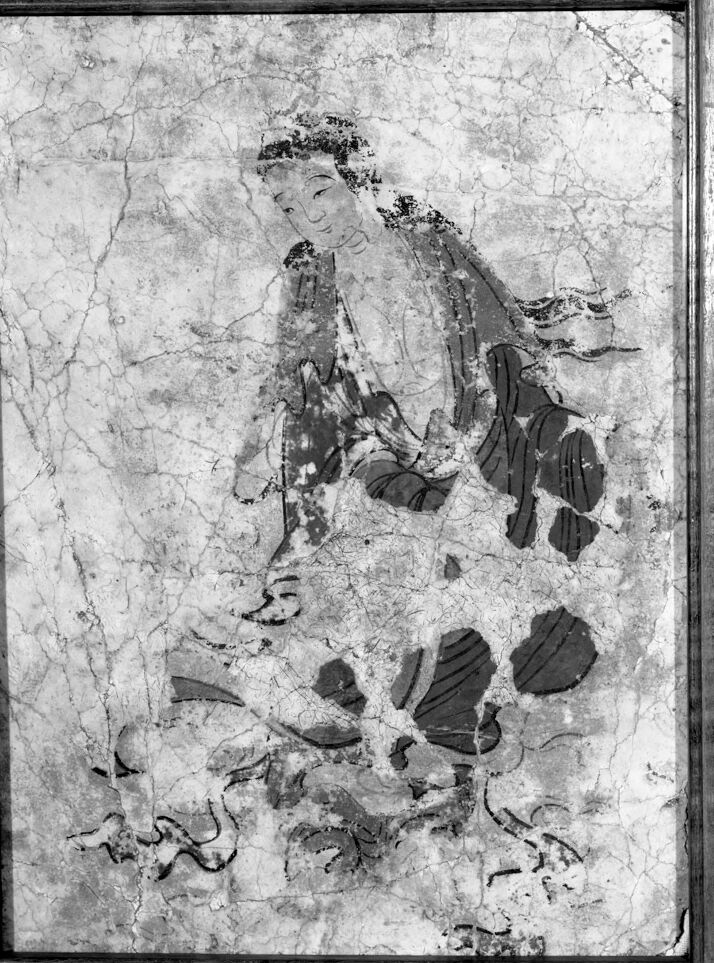

The image displays what appears to be an ancient wall painting or fresco framed within a modern frame. The painting is monochromatic and depicts a figure that might represent a historical or mythological character based on the clothing and posture. The figure is seated and adorned in elaborate attire, with distinguishable drapery and perhaps some headdress or hairstyle that suggests a cultural context. The background features incomplete imagery, possibly floral or plant elements, and there is significant cracking and damage throughout the artwork, indicative of its age and the degradation over time. This gives the piece a historical and archaeological significance.

Anthropic Claude

Created on 2024-11-27

The image appears to be a faded and cracked black and white photograph or painting of a figure. The figure has a serene, smiling expression and appears to be wearing ornate clothing or robes. The background is heavily textured and cracked, suggesting this is an old, weathered artwork. The composition focuses on the central figure, which seems to be the main subject of the image.

Meta Llama

Created on 2024-11-22

The image depicts a black and white photograph of an ancient painting, framed in wood. The painting is on a marble-like background and features a figure in a robe, possibly a monk or priest, with a serene expression. The figure is adorned with a headband and holds a staff in their right hand, while their left hand is placed on their lap. The painting is surrounded by a wooden frame, which adds a sense of depth and context to the overall image. The image appears to be a historical artifact, possibly from an ancient civilization or a religious institution. The use of marble-like background and the depiction of a figure in a robe suggest that the painting may have been created during a specific time period or cultural context. The serene expression on the figure's face and the staff in their hand may indicate that the painting is related to a religious or spiritual theme. Overall, the image provides a glimpse into the artistic and cultural practices of a bygone era, and its historical significance is evident in the level of detail and craftsmanship that has gone into creating the painting.