Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 12-22 |

| Gender | Female, 52.9% |

| Happy | 46.3% |

| Surprised | 45.1% |

| Calm | 51.3% |

| Fear | 45.1% |

| Sad | 46.8% |

| Disgusted | 45.1% |

| Confused | 45.1% |

| Angry | 45.3% |

Feature analysis

Amazon

| Person | 98.3% | |

Categories

Imagga

| streetview architecture | 85.9% | |

| paintings art | 11.9% | |

| nature landscape | 1.4% | |

Captions

OpenAI GPT

Created by gpt-4 on 2024-12-04

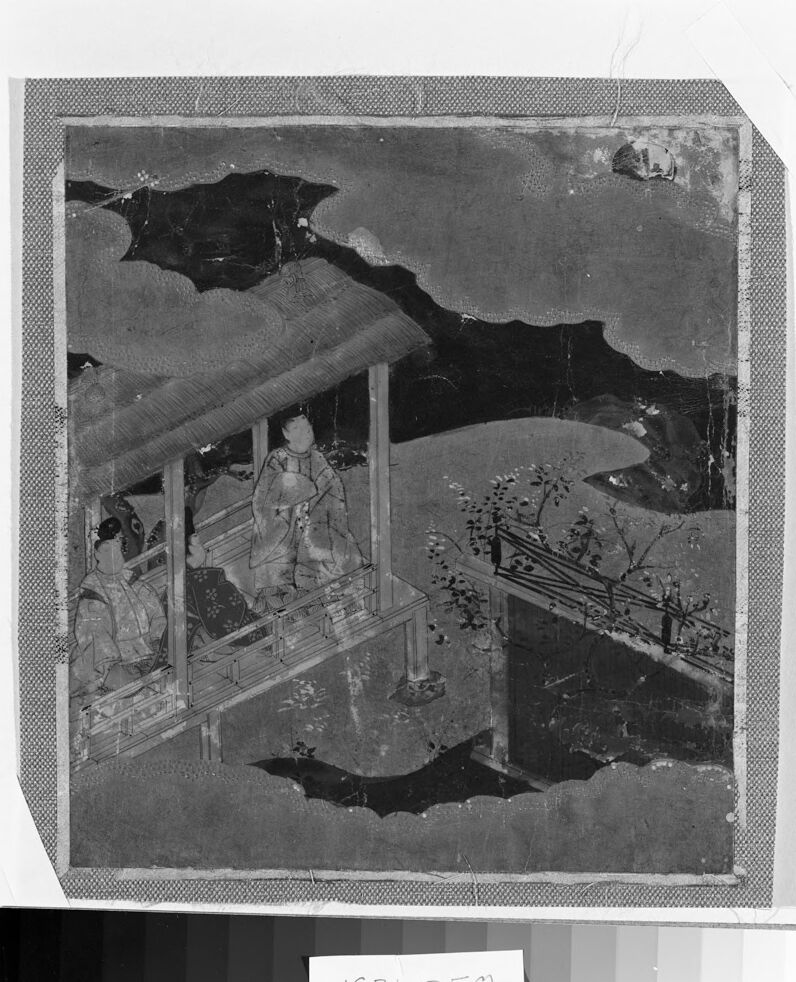

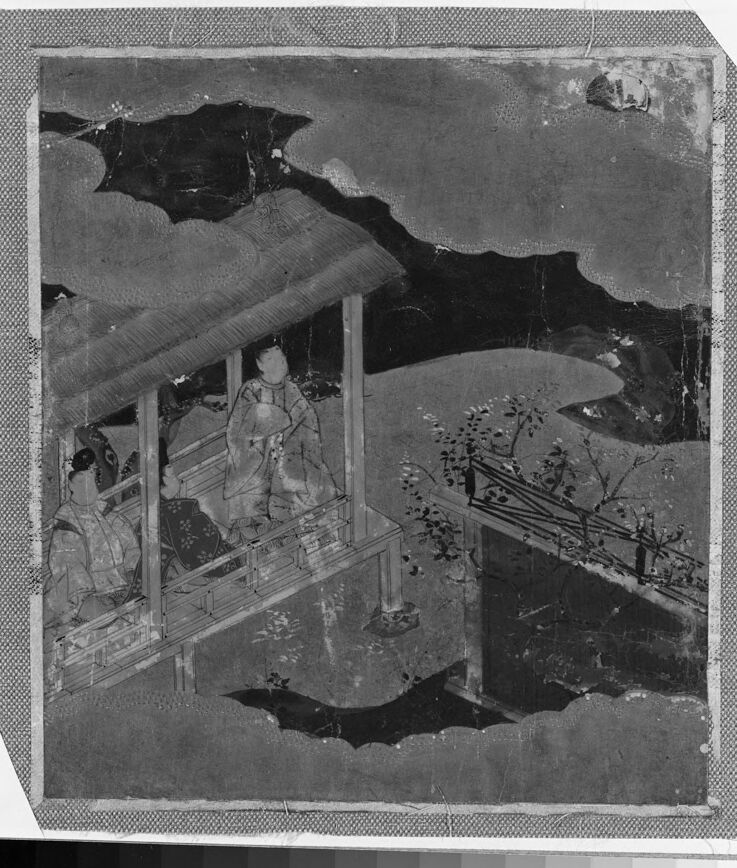

This is a black and white photograph of a piece of artwork, most likely an East Asian painting. The painting appears to be quite old, given the visible damage such as tears, holes, and areas where the artwork has faded or is missing. The scene depicted in the painting portrays an outdoor setting with architectural elements of wooden construction, suggesting a veranda or balcony. There are two figures seated inside this structure, wearing traditional East Asian garments, indicating their likely historical and cultural context. One of the figures is holding a fan. Trees and what appears to be a body of water are also part of the scene, contributing to a tranquil, picturesque setting. The details of the environment suggest a moment captured from a historical narrative or a scene of leisure from a bygone era. The photo also includes some sort of label, tag, or annotation at the bottom with the numbers "1931,250," which might refer to an inventory or accession number for the artwork’s cataloging within a collection or an archive. It is notable that because this is a photograph of the artwork, we are unable to discern the true colors or some of the finer details that would be present in the original piece.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This appears to be a traditional Japanese artwork, likely from a historical narrative scroll or screen painting. The black and white image shows figures on what appears to be a wooden veranda or platform, rendered in a classical Japanese artistic style. The composition includes architectural elements like wooden posts and railings, and there appears to be some landscape elements in the background, including what might be trees or foliage. The image has some damage or deterioration, visible as dark patches or missing sections, particularly around the edges. The number "1931.250" is visible at the bottom of the photograph, suggesting this might be a museum catalog or archive reference number.

Created by claude-3-haiku-20240307 on 2024-11-27

The image appears to be a black and white photograph depicting a traditional Japanese or Asian landscape scene. It shows a structure, possibly a pavilion or small building, set against a mountainous background with a cloudy sky overhead. The structure has a distinctive roof and appears to be surrounded by trees or foliage. The overall scene has a serene and atmospheric quality, suggesting a peaceful, natural setting.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-29

The image is a black-and-white photograph of a Japanese painting, which appears to be a fragment of a larger work. The painting depicts a scene with three figures on a balcony or veranda, surrounded by a landscape featuring trees and hills in the background. The figures are dressed in traditional Japanese attire, suggesting that the painting is from the Edo period (1603-1868) or earlier. The painting is rendered in a style characteristic of Japanese art from this time period, with bold lines, vibrant colors, and intricate details. The use of negative space and the emphasis on natural forms are also typical of Japanese art from this era. The photograph itself appears to be an old print, possibly from the early 20th century. It has been mounted on a piece of paper or cardboard, which has been torn and worn over time. A handwritten note at the bottom of the image reads "1931, 250," which may indicate the date and catalog number of the photograph. Overall, the image provides a glimpse into the artistic traditions of Japan during the Edo period, as well as the techniques and materials used in photography during the early 20th century.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-24

The image presents a black-and-white photograph of an ancient painting, showcasing a scene from Japanese history. The painting is framed by a border and features a woman standing on a balcony, gazing out at a serene landscape. She is dressed in traditional Japanese attire, including a kimono and obi, and is surrounded by lush greenery and a tranquil body of water. **Key Features:** * **Woman on Balcony:** The central figure in the painting is a woman standing on a balcony, looking out at the landscape. * **Traditional Attire:** She is dressed in a kimono and obi, which are traditional Japanese garments. * **Lush Greenery:** The surrounding environment is characterized by lush greenery, adding to the serene atmosphere. * **Tranquil Body of Water:** A tranquil body of water is visible in the background, contributing to the peaceful ambiance. * **Ancient Painting:** The painting itself is an ancient work of art, providing a glimpse into Japan's rich cultural heritage. * **Black-and-White Photograph:** The image is a black-and-white photograph, which may have been taken for preservation or documentation purposes. **Overall Impression:** The image conveys a sense of serenity and tranquility, inviting the viewer to step into the peaceful world depicted in the painting. The use of traditional Japanese attire and the serene landscape further emphasize the cultural significance of the artwork.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-05

The image is a monochromatic artwork, possibly a painting or a print, featuring a scene with a bridge and a building. The bridge appears to be made of wood and has a roof, with a few people sitting on it. The building is a traditional Japanese-style structure with a roof and a small balcony. The artwork is framed with a white border and has a watermark with the number "1931, 250" in the bottom right corner. The image has a slightly blurry quality, which may be due to the age or condition of the artwork.

Created by amazon.nova-pro-v1:0 on 2025-01-05

The image is a monochromatic painting of a scene with a few people. The painting is in black and white, and it appears to be a monochromatic painting. The painting features a person sitting on a bench, and a few people are sitting beside him. A person is standing and holding a fan in his hand. A fence is located behind them. A few trees are planted on the other side of the fence.