Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

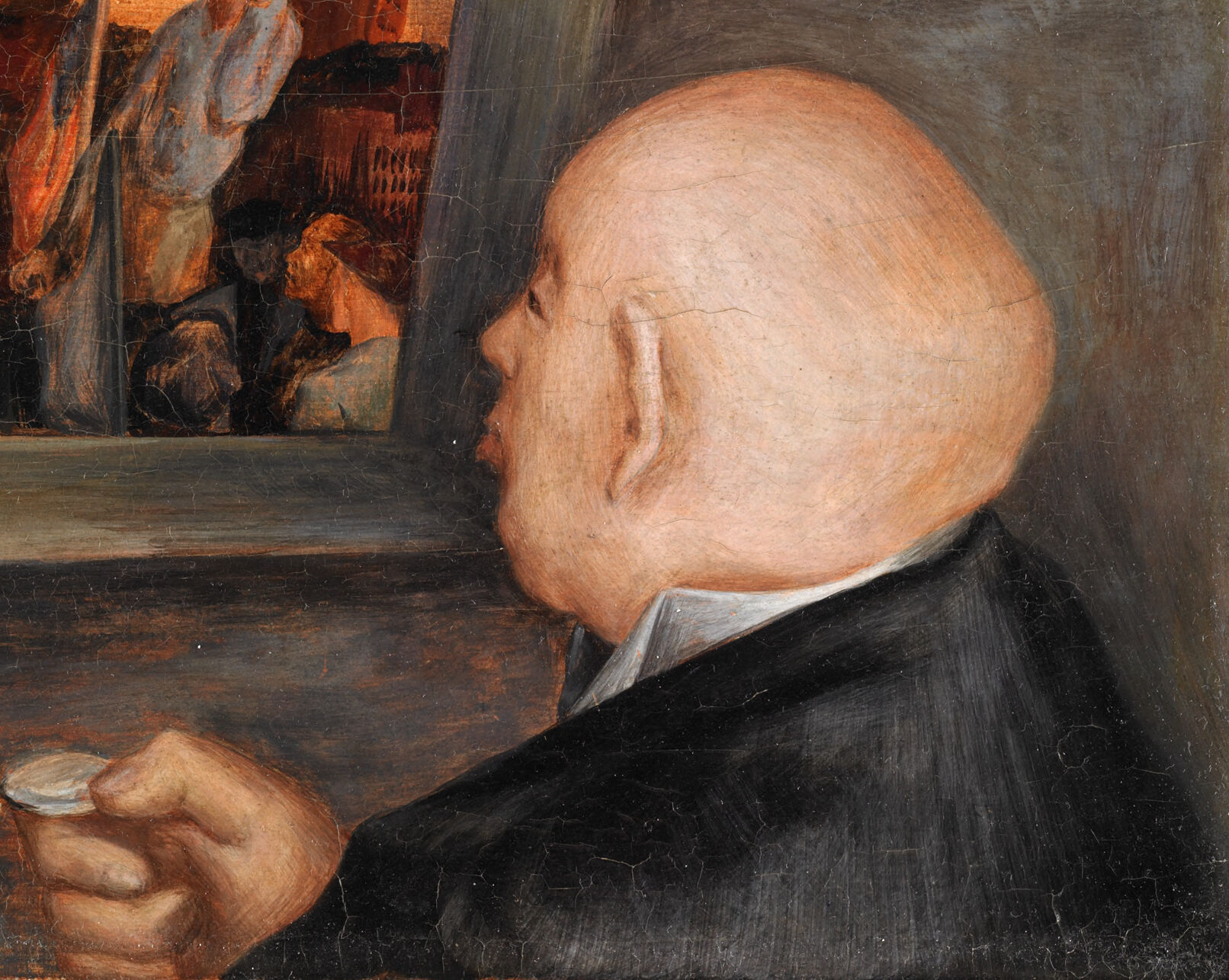

AWS Rekognition

| Age | 26-43 |

| Gender | Male, 76.9% |

| Angry | 15.3% |

| Confused | 4.5% |

| Calm | 26.3% |

| Sad | 46.5% |

| Happy | 1.1% |

| Surprised | 5.4% |

| Disgusted | 0.8% |

Feature analysis

Amazon

| Painting | 99.9% | |

Categories

Imagga

| events parties | 48.8% | |

| food drinks | 41.3% | |

| people portraits | 7% | |

| interior objects | 2% | |

| text visuals | 0.5% | |

| pets animals | 0.2% | |

| nature landscape | 0.1% | |

Captions

Microsoft

created on 2018-04-19

| a man sleeping next to a fireplace | 17% | |

| a man is sleeping next to a fireplace | 13.6% | |

| a man sitting next to a fireplace | 13.5% | |

OpenAI GPT

Created by gpt-4 on 2024-01-26

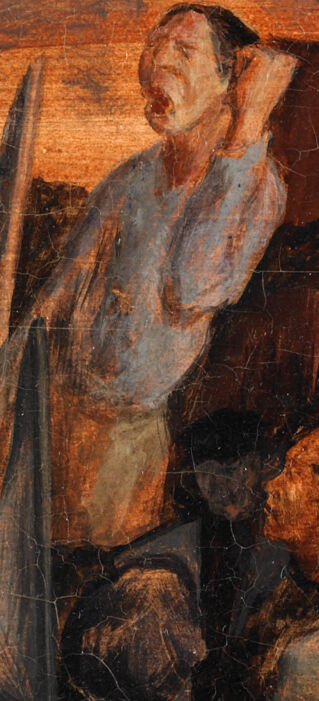

This image depicts an individual sitting in profile to the right, facing a mirror that reflects an interior scene different from the setting the individual is in. The reflected scene appears to be a bustling environment with various individuals and activity. The individual's attention is directed towards the mirror, and in their hand are two oval items, which they appear to be examining closely. The painting's perspective is designed to invite the viewer to look over the individual's shoulder, into the mirror and the scene depicted within.

Anthropic Claude

Created by claude-3-haiku-48k-20240307 on 2024-03-29

The image depicts an elderly man intently observing a painting which appears to show a group of figures in an industrial or urban setting. The man is seated, his head tilted slightly as he examines the artwork in front of him. The painting within the painting features vibrant colors and a dynamic, crowded scene. The overall impression is one of contemplation and engagement with the artistic work.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-25

The image depicts a painting of an older man in a black suit, holding a pocket watch and gazing at another painting. The man is balding, with a receding hairline, and has a white shirt visible under his jacket. He holds the pocket watch in his right hand, which is positioned in front of him. The painting he is looking at is framed in white and features a scene of people gathered around a woman who appears to be speaking or singing. The background of the painting is dark, with what seems to be a factory or industrial setting. The overall atmosphere of the image suggests that the man is deeply engaged in contemplating the painting, possibly reflecting on its themes or messages.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-23

The image depicts a painting of a man holding a small, round object in his hand, with a mirror reflecting a scene of people in the background. The man is bald and wears a black suit jacket over a white collared shirt. He holds the object, which resembles a pocket watch, in his right hand. In the background, a mirror reflects a scene of people gathered in a room, with one person standing on a table or platform, shouting or speaking to the others. The atmosphere of the image suggests that the man in the foreground is observing or listening to the scene unfolding in the mirror, possibly with a sense of interest or concern.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-04

In the painting, there is a bald man wearing a black suit. He is holding a watch in his hand, and he is looking at the painting on the wall. The painting on the wall is a picture of a man standing in front of a group of people. The man is holding a flag and raising his hand. The group of people are looking at the man.

Created by amazon.nova-lite-v1:0 on 2025-01-04

This is an oil painting depicting a man wearing a suit and holding a watch in his hand. He is looking at a framed painting on the wall, which shows a scene of people in a room with a man in the center, possibly shouting or speaking. The painting on the wall is set against a backdrop of an industrial landscape with chimneys and buildings. The painting has a dark, moody atmosphere, and the man in the foreground appears to be deep in thought or contemplation.