Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 7-17 |

| Gender | Female, 100% |

| Calm | 21.8% |

| Angry | 19.1% |

| Happy | 14.6% |

| Surprised | 13.2% |

| Confused | 11.4% |

| Fear | 10.1% |

| Disgusted | 8.6% |

| Sad | 5.1% |

Feature analysis

Amazon

| Person | 93.5% | |

Categories

Imagga

| paintings art | 95.4% | |

| pets animals | 4.4% | |

Captions

Microsoft

created by unknown on 2022-06-18

| a group of people posing for a photo | 52.5% | |

| a group of people riding on the back of a horse | 41.2% | |

| a group of people pose for a photo | 41.1% | |

Clarifai

created by general-english-image-caption-blip on 2025-04-29

| a photograph of a couple of figurines of a man and a woman | -100% | |

OpenAI GPT

Created by gpt-4 on 2025-02-18

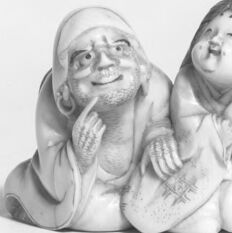

The image shows two small figurines against a white background. On the right is a figurine that resembles a standing turtle or tortoise, anthropomorphized with a humanoid posture. It seems to be wearing a coat or cloak with its front shell exposed, creating an amusing and whimsical appearance. The surface of the figurines indicates they might be carved from a material like ivory, depicting fine details and shading that highlight the craftsmanship involved in creating these objects. The left side of the image, where another figurine presumably exists, is not described due to alterations in the image.

Created by gpt-4o-2024-05-13 on 2025-02-18

The image shows two small figurines positioned against a plain background. On the left side, there are two figures clothed in detailed, patterned garments, seated closely together. Their heads are slightly inclined toward one another, creating a sense of interaction. On the right side stands a unique figurine resembling a bipedal frog, also wearing a patterned robe and carrying a sack over its shoulder, giving it a whimsical and anthropomorphic appearance. The overall composition focuses on these distinct and finely crafted figures.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2025-02-18

The image shows three ceramic figurines in a stark black and white photograph. On the left are two smiling figures, one with a long beard and the other appearing younger. On the right is a figurine of what appears to be a dragon or other fantastical creature. The photograph has a minimalist, high-contrast style that emphasizes the textures and forms of the ceramic pieces.

Created by claude-3-opus-20240229 on 2025-02-18

The black and white image shows three small decorative figurines or statues. They appear to be made of ceramic, porcelain or a similar material.

The leftmost figurine depicts a smiling, chubby person sitting cross-legged with their hands on their knees. The middle figurine shows a young child or baby sitting with a happy expression. The rightmost figurine is of a cartoon-like animal character, possibly a bear or dog, standing upright and wearing some kind of garment or accessory.

The figurines are photographed against a plain white background, making their shapes and details stand out clearly. The image has an artistic composition focusing solely on these three whimsical ceramic figures.

Created by claude-3-5-sonnet-20241022 on 2025-02-18

This black and white photograph shows three small decorative figurines that appear to be Japanese netsuke - miniature sculptures that were historically used as toggles for pouches in traditional Japanese dress. The image features two figures sitting together on the left, appearing to be in conversation or sharing a moment, and a single standing figure on the right who appears to be carrying something. The figurines seem to be carved in intricate detail, likely from ivory or a similar material, and demonstrate the fine craftsmanship typical of netsuke art. The white background and studio-style lighting emphasizes the three-dimensional quality and detailed carving of these small sculptures.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-15

The image presents a black and white photograph of two small, intricately carved figurines.

The figurine on the left features two individuals, one seated and the other standing, both with their hands clasped together in front of them. They are attired in robes, with the seated figure wearing a head covering. The figurine on the right depicts a person with their arms raised, wearing a robe and a hat.

The background of the image is a plain white surface, with a black border surrounding the photograph. The overall atmosphere of the image suggests that it may be a product photo for an online store or catalog, showcasing the figurines as decorative items.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-15

The image features a black-and-white photograph of three small, intricately carved figurines. The first two figurines are positioned on the left side of the image, while the third is situated on the right.

The first figurine depicts an elderly man with a long white beard and a head covering, seated in a contemplative pose with his chin resting on his hand. Adjacent to him is a woman with long hair, also seated and gazing upwards. Both figurines appear to be crafted from white stone or ivory.

On the right side of the image, a third figurine is visible. This figurine represents a turtle with a human-like body, adorned with a cloak draped over its shoulders. The turtle's head is turned towards the viewer, and it appears to be crafted from a combination of white and dark-colored stone or ivory.

The background of the image is a plain white surface, which suggests that the photograph may have been taken in a studio setting. A thin black border surrounds the edges of the image, adding a touch of elegance to the overall composition.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-18

The image features three figurines, two of which are seated and one standing, captured in a monochromatic filter. The figurines are placed against a plain white background, which makes the details of the figurines stand out. The two seated figurines are positioned close to each other, while the standing figurine is placed to the right of them. The seated figurines appear to be engaged in conversation, with one of them gesturing with its hand. The standing figurine has a more serious expression and is wearing a robe. The figurines are intricately designed, with attention to detail in their facial features and clothing.

Created by amazon.nova-pro-v1:0 on 2025-02-18

In the black-and-white image, there are two groups of figurines. The first group is on the left side, featuring two figurines of a man and a woman. They are sitting and facing each other, with the man's hands raised. The woman is smiling and looking at the man. The second group is on the right side, featuring a figurine of a frog. It is standing and carrying something on its back.