Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 45-66 |

| Gender | Female, 55.9% |

| Happy | 14.3% |

| Confused | 11.1% |

| Calm | 11.8% |

| Surprised | 7.7% |

| Disgusted | 1.5% |

| Sad | 50.7% |

| Angry | 2.9% |

Feature analysis

Amazon

| Person | 87.7% | |

Categories

Imagga

| paintings art | 99.1% | |

Captions

Microsoft

created on 2019-01-18

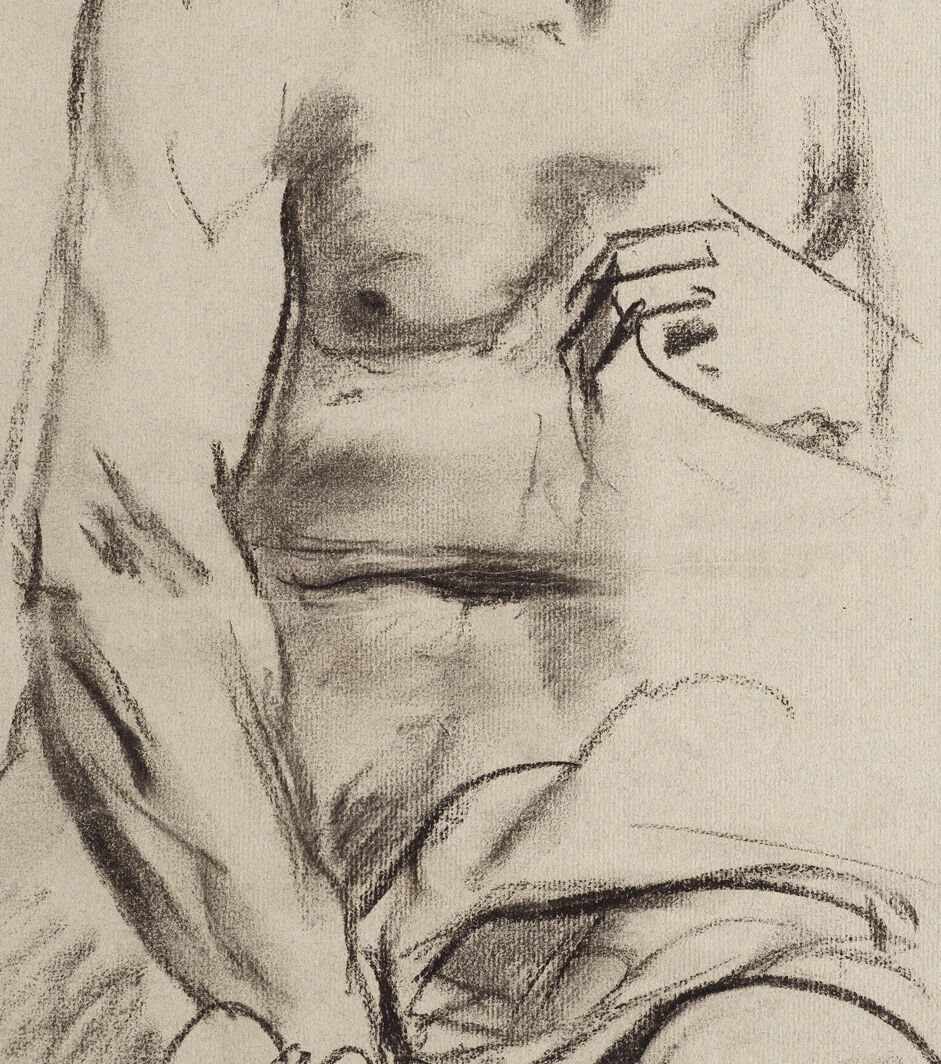

| a close up of a book | 57.2% | |

| close up of a book | 51.2% | |

| a hand holding a book | 51.1% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-31

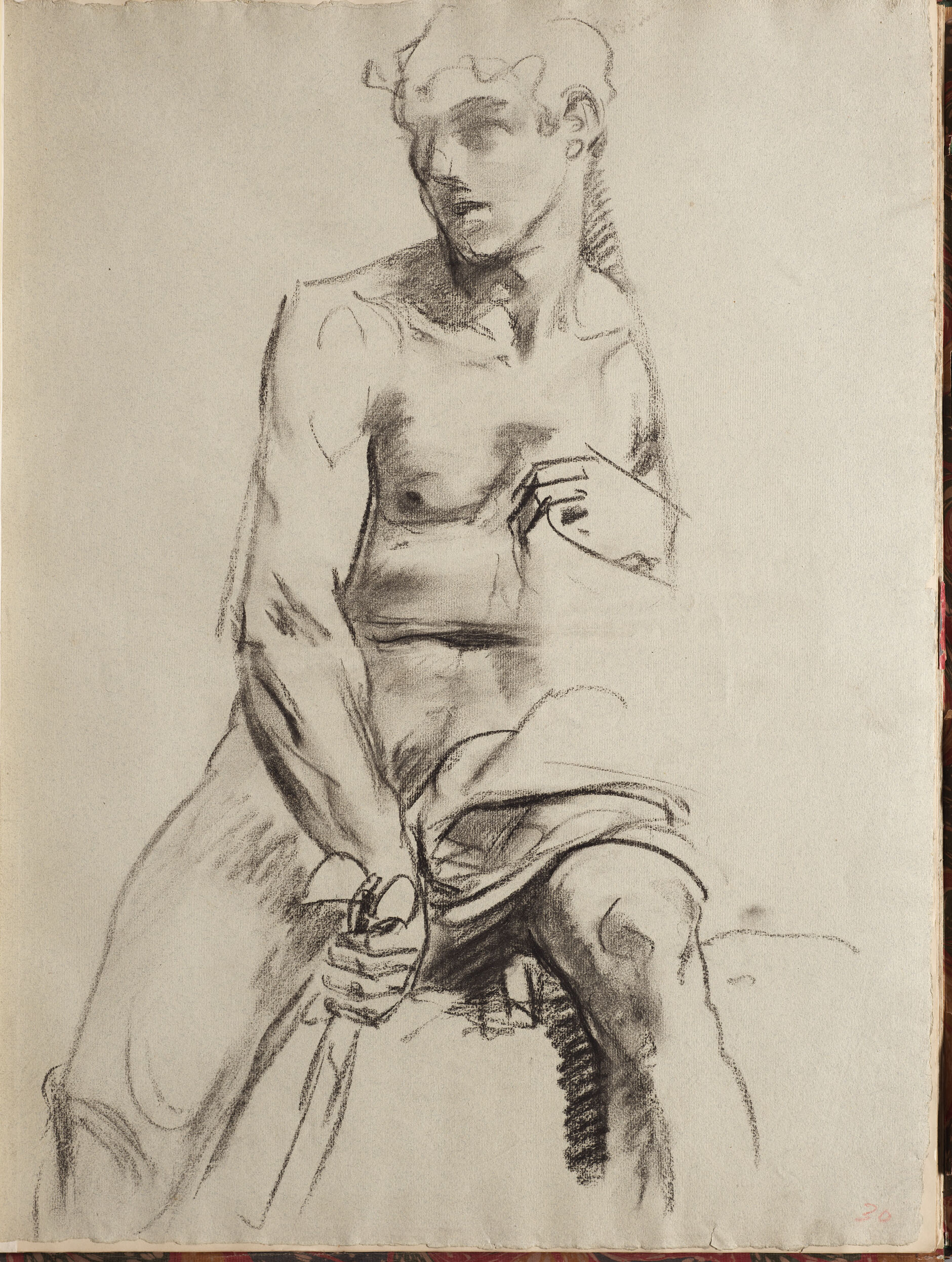

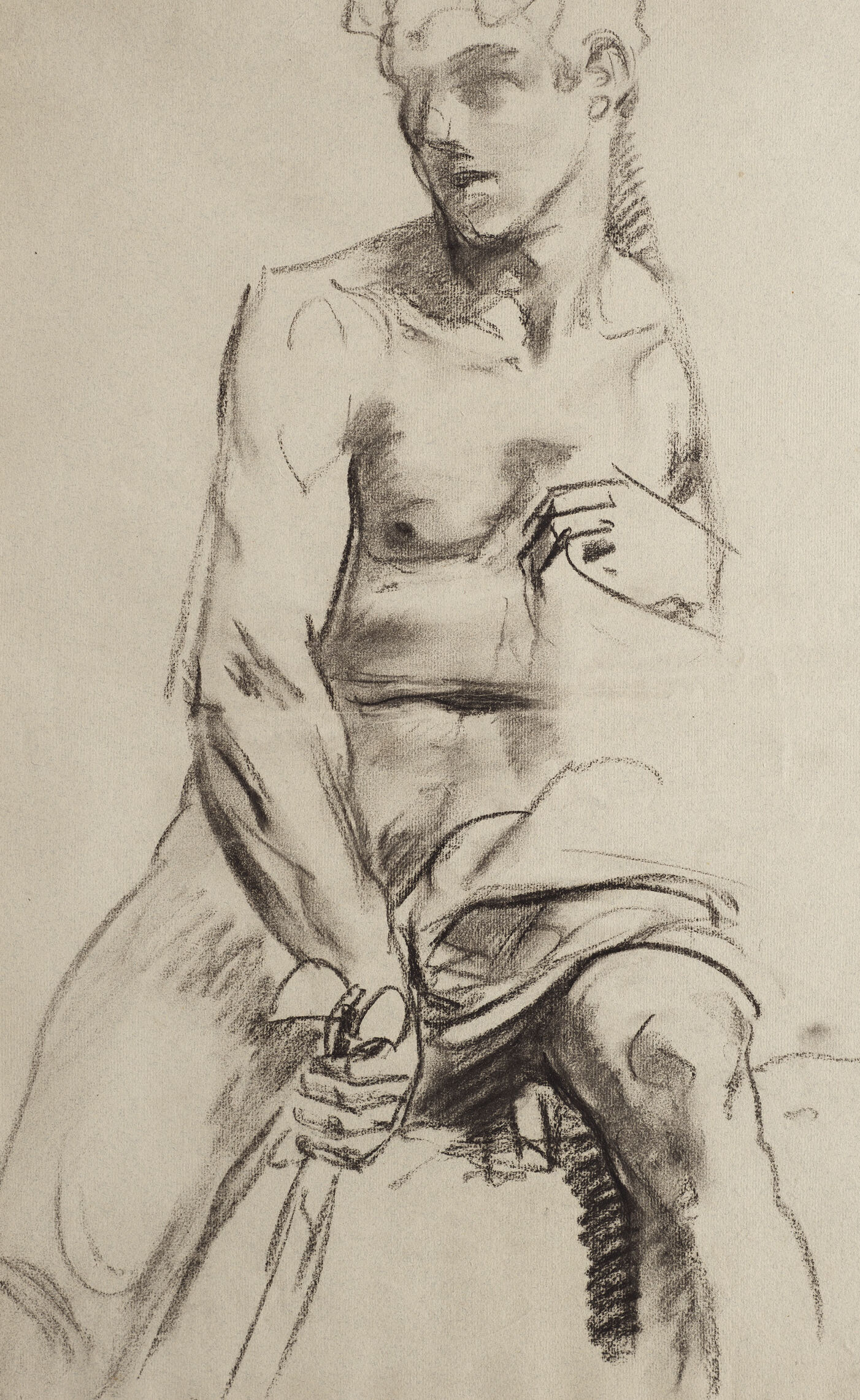

This image is a sketch of a male figure, depicted from the torso up. The figure is muscular and is sitting down with a partially draped cloth over his lap. One of the figure's arms is bent at the elbow, and the hand is resting close to the chest, while the other hand appears to be gripping an object, possibly a piece of the draped fabric. The sketch is done in a loose, expressive style, emphasizing the contours and musculature of the body.

Created by gpt-4 on 2024-11-17

This is a charcoal sketch of a seated figure with their upper body visible, showing well-defined musculature. The individual appears to be holding something in their left hand, which rests on their thigh. The right hand is also visible but it's not clear what it is doing due to the drawing being cut off at that point. The work is rendered in a realistic style, capturing shadows and highlights to create a three-dimensional effect. The paper's edges appear frayed, suggesting age, and it's bordered by a patterned edge, indicating it may have been mounted or framed in a decorative manner. The artistry indicates a focus on form and anatomy.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-27

This is a charcoal or graphite figure drawing of a male figure shown from roughly the waist up. The drawing has a classical, academic style with strong attention to form and anatomy. The subject appears to be partially draped in fabric that falls around the lower portion of the torso. The upper body is unclothed, showing muscular definition in the chest and shoulders. The drawing technique uses loose, gestural strokes while still maintaining clear structure and form. The shading creates dramatic contrast and helps define the musculature. It's reminiscent of the style of figure drawings done in traditional art academies, where studying the human form was a fundamental part of artistic training.

Created by claude-3-haiku-20240307 on 2024-11-17

The image appears to be a charcoal or pencil sketch of a male figure. The figure is shown in a seated or reclining position, with a muscular upper body exposed. The figure has an angular, angular jawline and a slightly pensive or brooding expression. The rendering is loose and expressionistic, with bold lines and shading that capture the form and mood of the subject.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-24

This image presents a charcoal sketch of a male figure, rendered in a loose and expressive style. The subject is depicted from the waist up, with his head turned to the left and his body facing forward. He wears a draped cloth around his lower torso, which is tied at the front with a knot. The artist's use of charcoal creates a sense of movement and energy in the drawing, with bold lines and gestural marks that convey a sense of spontaneity. The overall effect is one of dynamic tension, as if the figure is about to spring into action. The background of the image is a plain, off-white surface, which serves to highlight the figure and create a sense of depth and dimensionality. The edges of the paper are visible, giving the impression that the drawing is a work in progress, rather than a finished piece. Overall, this image is a captivating example of figurative drawing, showcasing the artist's skill and creativity in capturing the human form.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-29

The image is a charcoal sketch of a male figure, possibly a study for a larger work or a standalone piece of art. The male figure is depicted in a seated position, with his left arm resting on his thigh and his right arm extended, holding an object that is not clearly visible. He is wearing a loincloth, and his body is turned slightly to the left, with his head facing forward. The background of the sketch is a light gray color, which helps to highlight the details of the figure. The overall atmosphere of the sketch is one of contemplation and introspection, as the figure appears to be lost in thought. The use of charcoal creates a sense of texture and depth, adding to the overall impact of the piece. The sketch is likely a study for a larger work, as it appears to be a detailed and precise representation of the male figure. The artist's use of charcoal and the level of detail in the sketch suggest that they are working on a more complex piece, possibly a painting or a sculpture. Overall, the sketch is a beautiful and evocative representation of the male figure, and it provides a glimpse into the artist's process and creative vision.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-26

The image shows a sketch of a man sitting on a chair. The man is naked and appears to be looking at something in front of him. The sketch is in black and white and has a simple and rough style. The man's body is drawn with a few lines, and the details of his face and hands are not clear. The sketch is on a white sheet of paper with a red border on the top and bottom.

Created by amazon.nova-pro-v1:0 on 2025-02-26

The image depicts a black-and-white drawing of a man. He is sitting on a stool, and his right hand is holding a stick. He is looking to his left. His right leg is crossed over his left leg. He is wearing a piece of clothing that covers his lower body. The drawing is on a piece of paper that is placed on top of another piece of paper.