Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 22-34 |

| Gender | Female, 93.3% |

| Happy | 4.1% |

| Calm | 85.3% |

| Disgusted | 1.4% |

| Surprised | 5.2% |

| Confused | 0.3% |

| Angry | 3.3% |

| Fear | 0.2% |

| Sad | 0.3% |

AWS Rekognition

| Age | 12-22 |

| Gender | Male, 87.9% |

| Happy | 0.1% |

| Surprised | 0% |

| Confused | 0.1% |

| Disgusted | 0% |

| Calm | 99.1% |

| Sad | 0.5% |

| Fear | 0% |

| Angry | 0.2% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

| Person | 92.7% | |

Categories

Imagga

| paintings art | 100% | |

Captions

Microsoft

created on 2020-04-25

| an old photo of a person | 55.5% | |

| old photo of a person | 48.4% | |

| a photo of a person | 48.3% | |

Azure OpenAI

Created by gpt-4o-2024-05-13 on 2024-12-15

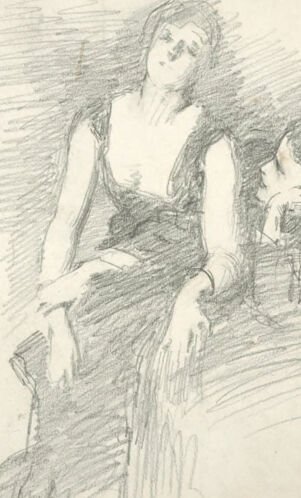

This image is a pencil sketch on paper depicting two women in a relaxed, possibly melancholic pose. The central female figure appears to be leaning back with her eyes closed and her arms resting limply at her sides. Her long dress flows down and spreads out on the ground. Next to her, a second female figure is positioned lower, resting her face near the central figure's arm. The background is filled with hatching lines, indicating shading and depth around both figures. The sketch conveys a sense of calm, intimacy, and contemplation. The edges of the paper have a slightly worn or aged appearance, with faint spots and smudges scattered on the surface.

Created by gpt-4 on 2024-12-15

The image shows a pencil sketch of two figures on a piece of paper with some aged spots and mild discolouration. The sketch itself appears somewhat unfinished or hastily drawn. It depicts two individuals, with the one on the left sitting in a contemplative pose, resting their face on one hand while their elbow is propped up on a surface, possibly a table or a leg. This figure has a stylized hairdo that implies motion or unsettlement. The second figure is reclined in what appears to be the lap or against the bent leg of the first figure, with their head resting sideways, eyes closed, which gives an impression of sleep or perhaps despair. The linework is loose and expressive, suggesting a moment captured quickly or a study of poses. There is a stark contrast between the detailed rendering of the figure on the left and the more relaxed lines of the figure on the right. The background is roughly shaded, providing a sense of space but not a lot of context, focusing the viewer's attention on the figures themselves.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-15

The image appears to be a pencil sketch or drawing depicting a female figure seated on what looks like a chair or bench. The figure is shown in a relaxed, contemplative pose, with her head resting on her hands. The background is rather indistinct, with some sketchy lines and shading suggesting a setting or environment, but the focus is clearly on the central female figure. The drawing has a soft, ethereal quality, with the lines and shading creating a sense of movement and expression in the figure.

Created by claude-3-5-sonnet-20241022 on 2024-12-15

This is a pencil sketch showing a figure in what appears to be an evening gown or formal dress. The figure is seated and leaning back in a relaxed pose, with their head tilted upward. The drawing style is loose and expressive, with quick, gestural lines creating the form and suggesting movement. There's a sense of drama in the pose, enhanced by the shading and background treatment. The sketch has a vintage quality to it, and appears to be on aged paper with some spots or discoloration. The artist used simple but effective shading techniques to create depth and form in the drawing.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-15

The image presents a sketch of a woman in a black dress, seated and leaning against a table or chair, with her right hand resting on her lap and her left hand on her head. The sketch is rendered in pencil on a piece of paper, with visible pencil marks and smudges, suggesting a work in progress or a study piece. The woman's attire consists of a sleeveless black dress, possibly a long-sleeved dress with the sleeves rolled up, although the sketch is not detailed enough to confirm this. Her hair is dark and pulled back, and she wears a bracelet on her right wrist. The background of the sketch is a light-colored paper, with some faint pencil marks and smudges visible. The overall atmosphere of the sketch is one of contemplation or relaxation, as the woman appears to be lost in thought, with her head tilted slightly to the side and her eyes cast downward. The sketch is likely a study or practice piece, rather than a finished work of art, given its rough and unfinished appearance.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-15

The image is a pencil drawing of a woman sitting on a chair, with her head tilted back and her eyes closed. She is wearing a long dress that appears to be black or dark-colored, and her hair is pulled back into a bun. The background of the drawing is white, with some faint lines and marks visible. The overall effect of the drawing is one of serenity and calmness, as if the woman is lost in thought or meditation. The use of soft, gentle lines and subtle shading creates a sense of depth and dimensionality, while the simplicity of the composition focuses attention on the subject's face and expression. The drawing may have been created as a study or exercise in capturing the subtleties of human emotion and expression, or it could be a more finished piece intended to evoke a specific mood or atmosphere. Regardless of its purpose, the drawing is a beautiful example of the artist's skill and attention to detail.