Machine Generated Data

Tags

Amazon

created on 2021-04-03

Clarifai

created on 2021-04-03

Imagga

created on 2021-04-03

Google

created on 2021-04-03

| Hair | 98.4 | |

|

| ||

| Outerwear | 95.1 | |

|

| ||

| Hairstyle | 95.1 | |

|

| ||

| Photograph | 94.1 | |

|

| ||

| Shirt | 93.8 | |

|

| ||

| Black | 89.7 | |

|

| ||

| Black-and-white | 86.5 | |

|

| ||

| Chair | 85.3 | |

|

| ||

| Style | 84.1 | |

|

| ||

| Black hair | 78.3 | |

|

| ||

| Monochrome photography | 76.4 | |

|

| ||

| Monochrome | 75.6 | |

|

| ||

| Beauty | 75.3 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Vintage clothing | 73 | |

|

| ||

| Event | 71 | |

|

| ||

| Room | 70.3 | |

|

| ||

| Classic | 69.7 | |

|

| ||

| Photo caption | 67.7 | |

|

| ||

| Stock photography | 65.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

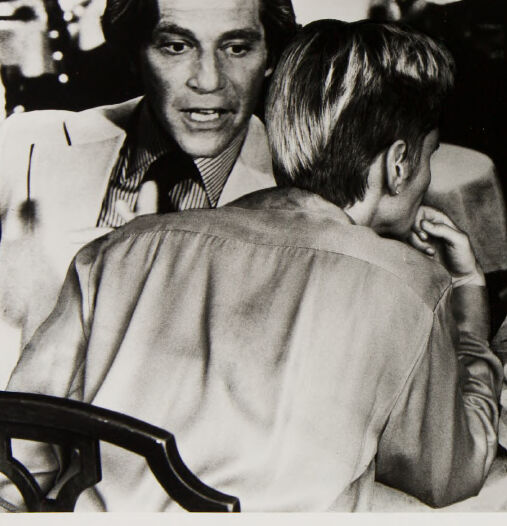

| Age | 42-60 |

| Gender | Male, 99.8% |

| Calm | 49.8% |

| Angry | 12% |

| Surprised | 9.9% |

| Happy | 9.1% |

| Fear | 8% |

| Sad | 5.3% |

| Disgusted | 3.9% |

| Confused | 2% |

AWS Rekognition

| Age | 29-45 |

| Gender | Female, 99.5% |

| Calm | 91.1% |

| Sad | 7% |

| Angry | 1% |

| Fear | 0.4% |

| Confused | 0.2% |

| Surprised | 0.2% |

| Disgusted | 0.1% |

| Happy | 0.1% |

AWS Rekognition

| Age | 22-34 |

| Gender | Female, 91.7% |

| Happy | 57.9% |

| Calm | 11.5% |

| Surprised | 9.4% |

| Confused | 9.3% |

| Sad | 6.2% |

| Fear | 3.5% |

| Disgusted | 1.3% |

| Angry | 0.9% |

AWS Rekognition

| Age | 6-16 |

| Gender | Male, 75% |

| Sad | 95.1% |

| Fear | 4.7% |

| Calm | 0.1% |

| Angry | 0% |

| Confused | 0% |

| Happy | 0% |

| Surprised | 0% |

| Disgusted | 0% |

Microsoft Cognitive Services

| Age | 50 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 33 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Possible |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very likely |

Feature analysis

Categories

Imagga

| people portraits | 58.3% | |

|

| ||

| paintings art | 41.2% | |

|

| ||

Captions

Microsoft

created on 2021-04-03

| George Segal, Glenda Jackson posing for a photo | 95.8% | |

|

| ||

| George Segal, Glenda Jackson posing for the camera | 95.7% | |

|

| ||

| George Segal, Glenda Jackson in an old photo of a person | 94% | |

|

| ||

Text analysis

Amazon

TC12

TC 12

TC

12