Machine Generated Data

Tags

Amazon

created on 2021-12-14

Clarifai

created on 2023-10-25

Imagga

created on 2021-12-14

| plow | 74.6 | |

|

| ||

| tool | 61.3 | |

|

| ||

| barrow | 24.1 | |

|

| ||

| handcart | 20.4 | |

|

| ||

| man | 20.1 | |

|

| ||

| wheeled vehicle | 19 | |

|

| ||

| vehicle | 18.1 | |

|

| ||

| outdoor | 16.8 | |

|

| ||

| old | 16 | |

|

| ||

| field | 15.9 | |

|

| ||

| people | 14.5 | |

|

| ||

| landscape | 14.1 | |

|

| ||

| outdoors | 13.4 | |

|

| ||

| sky | 13.4 | |

|

| ||

| summer | 12.9 | |

|

| ||

| vintage | 12.4 | |

|

| ||

| person | 12 | |

|

| ||

| male | 11.3 | |

|

| ||

| countryside | 11 | |

|

| ||

| travel | 10.6 | |

|

| ||

| black | 10.2 | |

|

| ||

| sport | 10 | |

|

| ||

| rural | 9.7 | |

|

| ||

| work | 9.6 | |

|

| ||

| lifestyle | 9.4 | |

|

| ||

| silhouette | 9.1 | |

|

| ||

| environment | 9 | |

|

| ||

| vacation | 9 | |

|

| ||

| active | 9 | |

|

| ||

| fun | 9 | |

|

| ||

| transportation | 9 | |

|

| ||

| sun | 8.8 | |

|

| ||

| working | 8.8 | |

|

| ||

| wreckage | 8.8 | |

|

| ||

| building | 8.7 | |

|

| ||

| grass | 8.7 | |

|

| ||

| outside | 8.6 | |

|

| ||

| industry | 8.5 | |

|

| ||

| two | 8.5 | |

|

| ||

| adult | 8.4 | |

|

| ||

| leisure | 8.3 | |

|

| ||

| protection | 8.2 | |

|

| ||

| danger | 8.2 | |

|

| ||

| activity | 8.1 | |

|

| ||

| farm | 8 | |

|

| ||

| mountain | 8 | |

|

| ||

| day | 7.8 | |

|

| ||

| destruction | 7.8 | |

|

| ||

| life | 7.8 | |

|

| ||

| track | 7.8 | |

|

| ||

| cloud | 7.7 | |

|

| ||

| truck | 7.7 | |

|

| ||

| grunge | 7.7 | |

|

| ||

| dirt | 7.6 | |

|

| ||

| part | 7.5 | |

|

| ||

| machine | 7.4 | |

|

| ||

| shovel | 7.3 | |

|

| ||

| dirty | 7.2 | |

|

| ||

| road | 7.2 | |

|

| ||

| sunset | 7.2 | |

|

| ||

| sand | 7.1 | |

|

| ||

Google

created on 2021-12-14

| Motor vehicle | 84.8 | |

|

| ||

| Adaptation | 79.2 | |

|

| ||

| Helmet | 60.9 | |

|

| ||

| History | 60.5 | |

|

| ||

| Event | 60.3 | |

|

| ||

| Monochrome | 58.8 | |

|

| ||

| Art | 55.7 | |

|

| ||

| Monochrome photography | 51.3 | |

|

| ||

| Blue-collar worker | 50.6 | |

|

| ||

| Sitting | 50.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

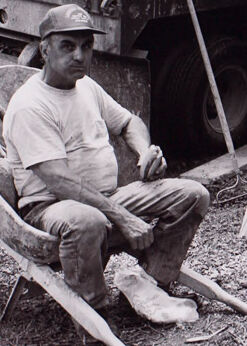

| Age | 29-45 |

| Gender | Male, 99.4% |

| Calm | 66.9% |

| Sad | 25.8% |

| Angry | 3.5% |

| Confused | 2.5% |

| Disgusted | 0.4% |

| Surprised | 0.4% |

| Happy | 0.2% |

| Fear | 0.2% |

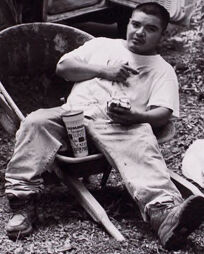

AWS Rekognition

| Age | 22-34 |

| Gender | Male, 97.1% |

| Calm | 89.4% |

| Sad | 3.7% |

| Angry | 3.3% |

| Confused | 1.5% |

| Fear | 1% |

| Surprised | 0.5% |

| Disgusted | 0.4% |

| Happy | 0.3% |

AWS Rekognition

| Age | 39-57 |

| Gender | Male, 98.1% |

| Angry | 94.2% |

| Calm | 5.3% |

| Confused | 0.2% |

| Sad | 0.2% |

| Disgusted | 0.2% |

| Surprised | 0% |

| Happy | 0% |

| Fear | 0% |

Microsoft Cognitive Services

| Age | 49 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 100% | |

|

| ||

Captions

Microsoft

created on 2021-12-14

| a person sitting on the ground | 54.9% | |

|

| ||

Text analysis

Amazon

FORD

COUNT

DEVITIONS

GATTER

wan GATTER

wan

COUNT

FORD

COUNT

FORD