Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 65.6% |

| Sad | 99.9% |

| Calm | 24.6% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Angry | 0.7% |

| Disgusted | 0.3% |

| Happy | 0.3% |

| Confused | 0.2% |

Feature analysis

Amazon

| Person | 99.8% | |

Categories

Imagga

| paintings art | 64.2% | |

| streetview architecture | 27.4% | |

| text visuals | 7.4% | |

Captions

Microsoft

created on 2022-06-25

| a man standing next to a woman | 42.3% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-29

Created by gpt-4 on 2024-12-05

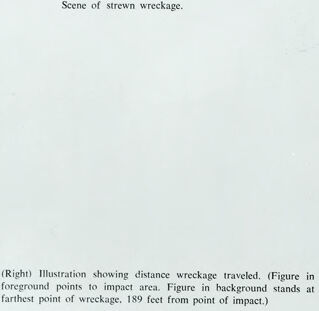

This is a black-and-white photograph that appears to be part of an investigative or accident report, given its format and content. The photo is split into two different scenes: On the left side, there's a caption that reads "Scene of strewn wreckage." The picture shows a desert landscape with scattered debris, indicating some sort of violent event that has spread pieces of wreckage over an area. On the right side, there's another photo depicting a road that runs through a desert. There are two figures: one close to the camera in the foreground and another farther down the road. The person closest to the camera is standing beside the road, pointing to the left, presumably towards the point of impact or the source of the strewn wreckage. Patches of debris are visible along the side of the road, suggesting a trajectory path of the wreckage that was thrown from the point of impact. The caption under this photo explains that it's an illustration showing the distance the wreckage traveled, with the person in the foreground pointing towards the impact area, while the figure in the background stands at the farthest point of wreckage, 189 feet from the point of impact. The spiral binding on the left edge indicates that this is likely a printed document or part of a compiled investigation file.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-26

This appears to be a black and white investigative or documentary photograph showing a scene of debris or wreckage scattered across a desert landscape. The image is from what seems to be a spiral-bound book or report, with visible binding on the right side. There are two parts to the image - an upper photo showing scattered debris, and a lower photo showing the full extent of the debris field. Someone in a white shirt is standing at one end of the debris field, pointing to what appears to be an impact area. According to the caption, this person is marking the distance wreckage traveled, which was 189 feet from the point of impact. The terrain is very rocky and barren, typical of a desert environment. The caption describes it as a "scene of strewn wreckage."

Created by claude-3-haiku-20240307 on 2024-12-05

The image shows a scene of strewn wreckage on a desert landscape. In the foreground, there is a large area covered in debris and rocks. A man is standing on the side of the road, looking out over the wreckage. In the background, there is a faint road or path visible, with more debris and vehicles in the distance. The image appears to be a black and white illustration or photograph, with the caption providing additional details about the distance the wreckage has traveled and the location of the impact point.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-23

The image depicts a black-and-white photograph of a man standing on the side of a road, with a desert landscape in the background. The man is dressed in a white shirt and dark pants, and he appears to be examining something on the ground. In the foreground, there is a caption that reads "Scene of strewn wreckage." Below this caption, there is a smaller text that explains the scene: "(Right) Illustration showing distance wreckage traveled. (Figure in foreground points to impact area. Figure in background stands at farthest point of wreckage, 189 feet from point of impact.)" The overall atmosphere of the image suggests that it may be related to an accident or incident involving a vehicle, possibly a car or airplane. The presence of the man examining the wreckage and the caption indicating the distance traveled by the wreckage imply that the image is documenting the aftermath of an event.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-05

The image is a black-and-white photograph of a man standing on a desert road, with a caption and text below it. The purpose of the image appears to be educational or informative, as it includes a caption and text that provide context and explanation. Here are the key features of the image: * **Man on the Road** + Standing on the side of a desert road + Wearing a white shirt and dark pants + Looking down at the ground * **Caption** + Located below the photograph + Reads "Scene of strewn wreckage." * **Text** + Located below the caption + Provides additional information about the scene + Includes a diagram illustrating the distance of the wreckage from the point of impact Overall, the image suggests that the man is investigating or examining the scene of an accident or incident, and the caption and text provide context and explanation for the image.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-25

The image is a black-and-white photograph of a person standing on the side of a road, looking at the ground. The road is empty, and there is a car in the distance. The ground is rocky, and there are some plants and bushes on the side. The image is part of a spiral-bound book, and there is a text on the left side of the image. The text reads, "Scene of strewn wreckage."

Created by amazon.nova-pro-v1:0 on 2025-02-25

The image is a page from a spiral-bound book, possibly a technical manual or a scientific report. It contains two photographs on the right side and a diagram with text on the left side. The photographs depict a desert landscape with a road running through it. In the top photograph, a man stands on the side of the road, wearing a white shirt and dark pants, looking down at the ground. The bottom photograph shows the same man, now standing on the opposite side of the road, still looking down. The road appears to be a two-lane highway with a white line marking the center. The desert landscape is characterized by sparse vegetation, rocky terrain, and a clear blue sky. The diagram on the left side of the page contains a drawing of a road with a white line and a series of points labeled "Scene of strewn wreckage." The text below the diagram provides an explanation of the distance traveled by the wreckage and its impact area.