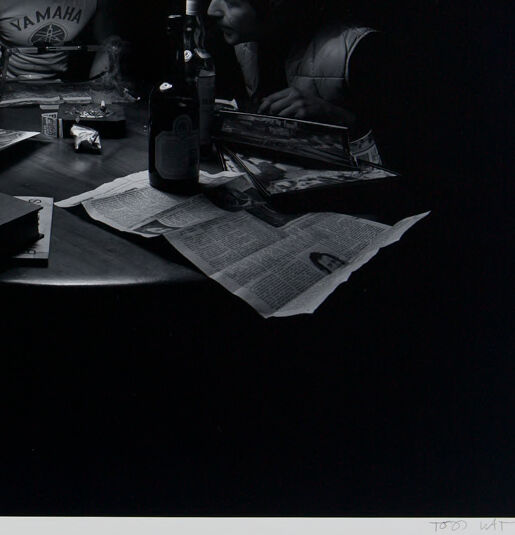

Machine Generated Data

Tags

Amazon

created on 2022-01-08

| Person | 99.4 | |

|

| ||

| Human | 99.4 | |

|

| ||

| Person | 98.8 | |

|

| ||

| Restaurant | 92.1 | |

|

| ||

| Table | 91.9 | |

|

| ||

| Furniture | 91.9 | |

|

| ||

| Sitting | 89.5 | |

|

| ||

| Dining Table | 89.2 | |

|

| ||

| Person | 88.6 | |

|

| ||

| Food | 77.7 | |

|

| ||

| Pub | 73.8 | |

|

| ||

| Bar Counter | 71.8 | |

|

| ||

| Meal | 67.7 | |

|

| ||

| Food Court | 60 | |

|

| ||

| Overcoat | 58.8 | |

|

| ||

| Clothing | 58.8 | |

|

| ||

| Coat | 58.8 | |

|

| ||

| Apparel | 58.8 | |

|

| ||

| Dish | 55.9 | |

|

| ||

Clarifai

created on 2023-10-25

| monochrome | 99.5 | |

|

| ||

| people | 99 | |

|

| ||

| art | 98.3 | |

|

| ||

| light | 97.8 | |

|

| ||

| portrait | 97.6 | |

|

| ||

| girl | 96.9 | |

|

| ||

| room | 96.6 | |

|

| ||

| woman | 96.6 | |

|

| ||

| furniture | 95.8 | |

|

| ||

| studio | 95.8 | |

|

| ||

| indoors | 95.6 | |

|

| ||

| model | 95.4 | |

|

| ||

| shadow | 94.6 | |

|

| ||

| book bindings | 94.6 | |

|

| ||

| table | 94.2 | |

|

| ||

| man | 94.2 | |

|

| ||

| adult | 93.6 | |

|

| ||

| still life | 92.8 | |

|

| ||

| desk | 92 | |

|

| ||

| book series | 91.8 | |

|

| ||

Imagga

created on 2022-01-08

| person | 27.4 | |

|

| ||

| black | 23.7 | |

|

| ||

| people | 23.4 | |

|

| ||

| notebook | 22.3 | |

|

| ||

| musical instrument | 21.5 | |

|

| ||

| portable computer | 20.8 | |

|

| ||

| adult | 19.2 | |

|

| ||

| man | 18.9 | |

|

| ||

| sexy | 17.7 | |

|

| ||

| male | 17 | |

|

| ||

| attractive | 16.8 | |

|

| ||

| body | 16.8 | |

|

| ||

| lady | 16.2 | |

|

| ||

| personal computer | 16 | |

|

| ||

| dark | 15.9 | |

|

| ||

| music | 15.3 | |

|

| ||

| model | 14.8 | |

|

| ||

| pretty | 14.7 | |

|

| ||

| studio | 14.4 | |

|

| ||

| portrait | 13.6 | |

|

| ||

| women | 13.4 | |

|

| ||

| wind instrument | 13.4 | |

|

| ||

| chair | 13.3 | |

|

| ||

| silhouette | 13.2 | |

|

| ||

| computer | 13.2 | |

|

| ||

| love | 12.6 | |

|

| ||

| human | 12 | |

|

| ||

| looking | 12 | |

|

| ||

| laptop | 11.2 | |

|

| ||

| one | 11.2 | |

|

| ||

| sitting | 11.2 | |

|

| ||

| style | 11.1 | |

|

| ||

| hair | 11.1 | |

|

| ||

| digital computer | 10.7 | |

|

| ||

| night | 10.7 | |

|

| ||

| dance | 10.6 | |

|

| ||

| business | 10.3 | |

|

| ||

| spotlight | 10 | |

|

| ||

| sax | 9.8 | |

|

| ||

| fashion | 9.8 | |

|

| ||

| office | 9.6 | |

|

| ||

| performance | 9.6 | |

|

| ||

| rock | 9.6 | |

|

| ||

| dancer | 9.5 | |

|

| ||

| light | 9.4 | |

|

| ||

| brass | 9.3 | |

|

| ||

| face | 9.2 | |

|

| ||

| disk jockey | 9.2 | |

|

| ||

| desk | 9.1 | |

|

| ||

| performer | 8.9 | |

|

| ||

| guitar | 8.8 | |

|

| ||

| nude | 8.7 | |

|

| ||

| percussion instrument | 8.7 | |

|

| ||

| party | 8.6 | |

|

| ||

| men | 8.6 | |

|

| ||

| thinking | 8.5 | |

|

| ||

| musician | 8.5 | |

|

| ||

| teenager | 8.2 | |

|

| ||

| sensuality | 8.2 | |

|

| ||

| working | 8 | |

|

| ||

| lifestyle | 8 | |

|

| ||

| businessman | 7.9 | |

|

| ||

| art | 7.9 | |

|

| ||

| smile | 7.8 | |

|

| ||

| hands | 7.8 | |

|

| ||

| sex | 7.8 | |

|

| ||

| dancing | 7.7 | |

|

| ||

| expression | 7.7 | |

|

| ||

| two | 7.6 | |

|

| ||

| room | 7.6 | |

|

| ||

| passion | 7.5 | |

|

| ||

| broadcaster | 7.4 | |

|

| ||

| sunset | 7.2 | |

|

| ||

| romance | 7.1 | |

|

| ||

| posing | 7.1 | |

|

| ||

Google

created on 2022-01-08

| Tableware | 96.2 | |

|

| ||

| Table | 93 | |

|

| ||

| Drinkware | 92.2 | |

|

| ||

| Bottle | 88.2 | |

|

| ||

| Black-and-white | 81.9 | |

|

| ||

| Flash photography | 80.9 | |

|

| ||

| Font | 80.1 | |

|

| ||

| Tints and shades | 77.3 | |

|

| ||

| Art | 73 | |

|

| ||

| Monochrome photography | 72.8 | |

|

| ||

| Darkness | 72.3 | |

|

| ||

| Event | 70.8 | |

|

| ||

| Serveware | 70.6 | |

|

| ||

| Monochrome | 68.7 | |

|

| ||

| Room | 66.1 | |

|

| ||

| Stock photography | 65.7 | |

|

| ||

| Glass bottle | 64.6 | |

|

| ||

| Visual arts | 64.5 | |

|

| ||

| Still life photography | 63.9 | |

|

| ||

| Sitting | 63.4 | |

|

| ||

Microsoft

created on 2022-01-08

| text | 98.3 | |

|

| ||

| person | 81.1 | |

|

| ||

| black and white | 72.7 | |

|

| ||

| human face | 61.7 | |

|

| ||

| laptop | 61 | |

|

| ||

| clothing | 60.6 | |

|

| ||

| man | 50.3 | |

|

| ||

| dark | 39.8 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 35-43 |

| Gender | Male, 99.9% |

| Sad | 96.6% |

| Calm | 1.5% |

| Angry | 0.9% |

| Confused | 0.6% |

| Disgusted | 0.2% |

| Fear | 0.1% |

| Surprised | 0.1% |

| Happy | 0% |

AWS Rekognition

| Age | 41-49 |

| Gender | Male, 99.9% |

| Calm | 97.7% |

| Sad | 1.9% |

| Fear | 0.1% |

| Angry | 0.1% |

| Disgusted | 0.1% |

| Surprised | 0% |

| Confused | 0% |

| Happy | 0% |

AWS Rekognition

| Age | 31-41 |

| Gender | Male, 99.9% |

| Calm | 73% |

| Angry | 12.5% |

| Sad | 7.5% |

| Fear | 3% |

| Confused | 1.9% |

| Disgusted | 0.9% |

| Surprised | 0.7% |

| Happy | 0.4% |

Microsoft Cognitive Services

| Age | 24 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 82.5% | |

|

| ||

| food drinks | 13.4% | |

|

| ||

| people portraits | 2% | |

|

| ||

Captions

Microsoft

created on 2022-01-08

| a group of people sitting at a table | 88.2% | |

|

| ||

| a group of people sitting around a table | 87.7% | |

|

| ||

| a group of people sitting at a table in a dark room | 86.5% | |

|

| ||

Text analysis

Amazon

YAMAHA

WAT

TOO WAT

2/45

TOO

VAMAHA

TOD WAT

VAMAHA

TOD

WAT