Machine Generated Data

Tags

Amazon

created on 2022-01-08

| Person | 99.6 | |

|

| ||

| Human | 99.6 | |

|

| ||

| Person | 99.2 | |

|

| ||

| Monitor | 98.1 | |

|

| ||

| Electronics | 98.1 | |

|

| ||

| Display | 98.1 | |

|

| ||

| Screen | 98.1 | |

|

| ||

| Interior Design | 97.9 | |

|

| ||

| Indoors | 97.9 | |

|

| ||

| Person | 97.8 | |

|

| ||

| Person | 97.2 | |

|

| ||

| Person | 89.7 | |

|

| ||

| Tie | 87.6 | |

|

| ||

| Accessories | 87.6 | |

|

| ||

| Accessory | 87.6 | |

|

| ||

| Person | 74.3 | |

|

| ||

| Person | 65.9 | |

|

| ||

| Room | 57.2 | |

|

| ||

| X-Ray | 56.5 | |

|

| ||

| Ct Scan | 56.5 | |

|

| ||

| Medical Imaging X-Ray Film | 56.5 | |

|

| ||

| Theater | 56.2 | |

|

| ||

Clarifai

created on 2023-10-25

Imagga

created on 2022-01-08

| television | 92.4 | |

|

| ||

| monitor | 87.3 | |

|

| ||

| electronic equipment | 57.5 | |

|

| ||

| equipment | 53.3 | |

|

| ||

| broadcasting | 44.3 | |

|

| ||

| telecommunication | 34 | |

|

| ||

| telecommunication system | 27.5 | |

|

| ||

| screen | 22.4 | |

|

| ||

| medium | 22 | |

|

| ||

| liquid crystal display | 21.9 | |

|

| ||

| computer | 21.2 | |

|

| ||

| retro | 20.5 | |

|

| ||

| black | 20.4 | |

|

| ||

| technology | 20 | |

|

| ||

| old | 19.5 | |

|

| ||

| digital | 17.8 | |

|

| ||

| vintage | 15.7 | |

|

| ||

| object | 14.7 | |

|

| ||

| electronic | 14 | |

|

| ||

| film | 14 | |

|

| ||

| display | 13.7 | |

|

| ||

| frame | 13.3 | |

|

| ||

| design | 12.9 | |

|

| ||

| entertainment | 12.9 | |

|

| ||

| business | 12.7 | |

|

| ||

| space | 12.4 | |

|

| ||

| grunge | 11.9 | |

|

| ||

| communication | 11.7 | |

|

| ||

| noise | 11.7 | |

|

| ||

| art | 11.7 | |

|

| ||

| media | 11.4 | |

|

| ||

| text | 11.3 | |

|

| ||

| finance | 11 | |

|

| ||

| border | 10.8 | |

|

| ||

| close | 10.8 | |

|

| ||

| collage | 10.6 | |

|

| ||

| antique | 10.4 | |

|

| ||

| blank | 10.3 | |

|

| ||

| dollar | 10.2 | |

|

| ||

| closeup | 10.1 | |

|

| ||

| rough | 10 | |

|

| ||

| information | 9.7 | |

|

| ||

| movie | 9.7 | |

|

| ||

| audio | 9.6 | |

|

| ||

| grungy | 9.5 | |

|

| ||

| laptop | 9.2 | |

|

| ||

| hand | 9.1 | |

|

| ||

| dirty | 9 | |

|

| ||

| texture | 9 | |

|

| ||

| music | 9 | |

|

| ||

| video | 8.7 | |

|

| ||

| electronics | 8.5 | |

|

| ||

| money | 8.5 | |

|

| ||

| modern | 8.4 | |

|

| ||

| network | 8.3 | |

|

| ||

| banking | 8.3 | |

|

| ||

| speed | 8.2 | |

|

| ||

| data | 8.2 | |

|

| ||

| symbol | 8.1 | |

|

| ||

| bank | 8.1 | |

|

| ||

| detail | 8 | |

|

| ||

| graphic | 8 | |

|

| ||

| your | 7.7 | |

|

| ||

| damaged | 7.6 | |

|

| ||

| web | 7.6 | |

|

| ||

| tech | 7.6 | |

|

| ||

| sign | 7.5 | |

|

| ||

| sound | 7.5 | |

|

| ||

| cash | 7.3 | |

|

| ||

| negative | 7.3 | |

|

| ||

| global | 7.3 | |

|

| ||

| office | 7.2 | |

|

| ||

| paper | 7 | |

|

| ||

Google

created on 2022-01-08

| Television set | 84.6 | |

|

| ||

| Font | 83.7 | |

|

| ||

| Gadget | 81.3 | |

|

| ||

| Display device | 77.6 | |

|

| ||

| Output device | 76.7 | |

|

| ||

| Electronic device | 75.7 | |

|

| ||

| Multimedia | 75.1 | |

|

| ||

| Flat panel display | 75 | |

|

| ||

| Rectangle | 73.7 | |

|

| ||

| Communication Device | 72.5 | |

|

| ||

| Event | 68.2 | |

|

| ||

| Art | 62.7 | |

|

| ||

| Led-backlit lcd display | 61.3 | |

|

| ||

| Room | 60.6 | |

|

| ||

| Projector accessory | 59.2 | |

|

| ||

| Media | 58.2 | |

|

| ||

| Screenshot | 57.2 | |

|

| ||

| Television program | 57 | |

|

| ||

| Monochrome photography | 56.8 | |

|

| ||

| Tablet computer | 56.4 | |

|

| ||

Microsoft

created on 2022-01-08

| text | 99.4 | |

|

| ||

| screenshot | 98.3 | |

|

| ||

| indoor | 96.4 | |

|

| ||

| microwave | 93.6 | |

|

| ||

| oven | 77.1 | |

|

| ||

| television | 74 | |

|

| ||

| kitchen appliance | 11.8 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

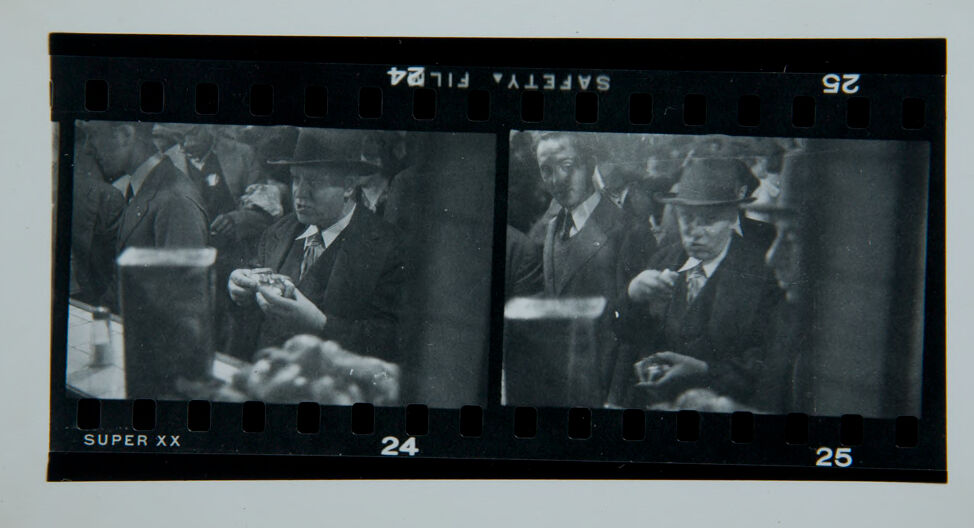

| Age | 49-57 |

| Gender | Male, 99.8% |

| Calm | 98.9% |

| Sad | 0.8% |

| Surprised | 0.1% |

| Confused | 0.1% |

| Disgusted | 0.1% |

| Angry | 0% |

| Happy | 0% |

| Fear | 0% |

AWS Rekognition

| Age | 26-36 |

| Gender | Male, 99.5% |

| Calm | 98.6% |

| Sad | 0.7% |

| Surprised | 0.2% |

| Disgusted | 0.2% |

| Confused | 0.2% |

| Angry | 0.1% |

| Happy | 0.1% |

| Fear | 0.1% |

AWS Rekognition

| Age | 22-30 |

| Gender | Male, 97.7% |

| Sad | 78% |

| Calm | 17.7% |

| Confused | 1.9% |

| Happy | 0.8% |

| Fear | 0.6% |

| Surprised | 0.4% |

| Disgusted | 0.3% |

| Angry | 0.2% |

AWS Rekognition

| Age | 26-36 |

| Gender | Male, 77.2% |

| Calm | 99.3% |

| Sad | 0.5% |

| Surprised | 0% |

| Confused | 0% |

| Angry | 0% |

| Disgusted | 0% |

| Happy | 0% |

| Fear | 0% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| text visuals | 87.1% | |

|

| ||

| paintings art | 9.6% | |

|

| ||

| interior objects | 1.9% | |

|

| ||

Captions

Microsoft

created on 2022-01-08

| a screen shot of a microwave oven | 39.6% | |

|

| ||

| a screen shot of a microwave | 39.5% | |

|

| ||

| a microwave oven | 32.2% | |

|

| ||

Text analysis

Amazon

25

24

SUPER

SAFETY

SAFETY FIL24

FIL24

SUPER XX

XX

SAFETYA FIL24

25

SUPER XX

24

25

SAFETYA

FIL24

25

SUPER

XX

24