Machine Generated Data

Tags

Color Analysis

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 77.1% | |

Categories

Imagga

created on 2019-07-06

| paintings art | 99.9% | |

Captions

Microsoft

created by unknown on 2019-07-06

| a close up of a book | 29.8% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip-2 on 2025-06-29

a painting of a woman in a kimono and a statue

Created by general-english-image-caption-blip on 2025-05-03

a photograph of a woman in a kimono kimono kimono kimono kimono kimono

OpenAI GPT

Created by gpt-4 on 2024-12-10

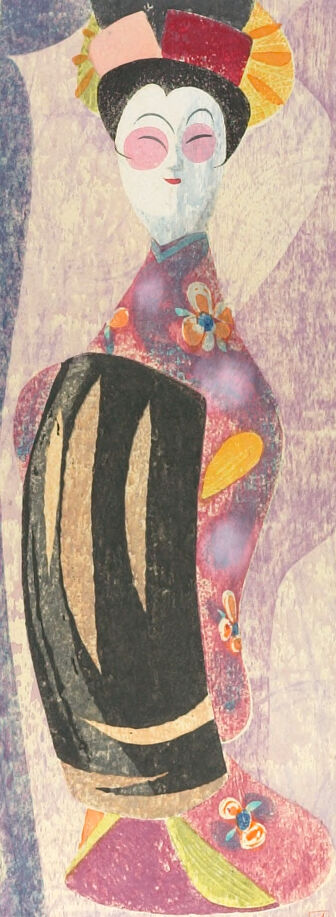

This image appears to be a colorful print or painting featuring a figure dressed in a kimono-style garment with distinct floral patterns and a mix of vibrant colors such as purple, pink, yellow, red, and black. The figure is adorned with a traditional hairpiece that includes a bow-like structure with yellow, red, and dark tones, which adds to the cultural attire's authenticity. The background contains muted colors and textures that vaguely resemble architectural forms or abstract shapes, contrasting with the figure's vivid colored clothing. The artwork has an overall soft and textured appearance, indicative of a printmaking technique or a style that uses multiple layers to create depth and interest.

Created by gpt-4o-2024-05-13 on 2024-12-10

The image appears to be a stylized artwork depicting a figure wearing traditional Japanese attire, likely a kimono, adorned with floral patterns and a decorative obi sash. The figure has an elaborate hairstyle accessorized with colorful ornaments. The background features abstract forms and muted colors. The artwork uses a combination of soft and bold textures to create a visually engaging composition.

Anthropic Claude

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-25

This appears to be a stylized illustration in what looks like a Japanese woodblock print style. The image shows a figure wearing traditional Japanese clothing (likely a kimono) in black and pink/red tones. The figure has a distinctive hairstyle with what appears to be ornamental decorations in orange and yellow. The face is drawn in a minimalist style with closed, curved eyes. The background features muted colors including greys, blues, and purples, creating a dreamy, atmospheric quality. The overall composition has a gentle, elegant feeling typical of traditional Japanese art.

Created by claude-3-5-sonnet-20241022 on 2024-12-28

This is a traditional Japanese art piece depicting a geisha figure in a stylized manner. The artwork features delicate watercolor techniques and shows a figure wearing a decorative kimono with floral patterns in pink, red, and purple tones. The kimono is paired with a black outer garment. The figure has a traditional hairstyle adorned with decorative elements in yellow and red. The face is painted in a classic stylized way with white makeup and closed eyes. In the background, there appears to be a shadow or silhouette in muted gray tones, creating an interesting compositional element. The overall color palette combines soft pastels with bold black accents, creating an elegant and traditional Japanese aesthetic.

Created by claude-3-haiku-20240307 on 2024-12-10

The image appears to depict an artistic representation of a Japanese geisha figure. The central character is a stylized female figure with exaggerated features, wearing a colorful and elaborate kimono with floral patterns. The figure has a distinctive hairstyle and makeup typical of traditional geisha attire. The background consists of abstract shapes and patterns in various shades of blue, pink, and gray, creating a dreamlike or surreal atmosphere around the central figure.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-10

The image is a painting of a woman in a traditional Japanese kimono, with a unique and stylized approach to art. The woman is depicted standing in front of a large, abstracted figure that resembles a pillar or a column. The background of the painting is a soft, muted color, which helps to create a sense of calmness and serenity.

The woman's kimono is a vibrant pink color with yellow and blue accents, and it features a floral pattern. She has a black obi around her waist, which adds a touch of elegance to her overall appearance. Her hair is styled in an updo, with a few loose strands framing her face.

The abstracted figure behind the woman is depicted in shades of gray and purple, and it appears to be made up of geometric shapes. The figure is not clearly defined, but it seems to be some kind of architectural element, possibly a column or a pillar.

Overall, the painting is a beautiful example of modern art, with its bold colors and abstract forms. The use of traditional Japanese motifs, such as the kimono and the obi, adds a sense of cultural depth and richness to the work. The abstracted figure in the background adds a sense of mystery and intrigue, inviting the viewer to interpret the painting in their own way.

The painting is signed by the artist, but the signature is not legible. The painting is dated 1925, which suggests that it was created during the early 20th century, a time of great change and innovation in the art world. The painting is likely a representation of the artist's fascination with traditional Japanese culture, as well as their experimentation with modern art techniques.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-10

The image depicts a stylized illustration of a geisha, characterized by her traditional Japanese attire and hairstyle. The geisha is positioned centrally in the frame, facing forward with her eyes closed, exuding a sense of serenity and elegance. Her hair is styled in an updo, adorned with a red and yellow obi, which adds a pop of color to the overall composition.

The geisha's kimono is a vibrant red hue, featuring intricate floral patterns that add depth and texture to the design. A black obi wraps around her waist, creating a striking contrast against the bold red of the kimono. Her sleeves are long and flowing, with a subtle sheen that catches the light.

To the left of the geisha, a tall, slender object is visible, although its exact nature is unclear. It could be a vase, a statue, or some other decorative element, but its purpose is not immediately apparent. The background of the image is a soft, muted color, with subtle texture and pattern that adds depth and visual interest to the overall design.

Overall, the image presents a captivating and stylized portrayal of a geisha, showcasing her traditional attire and hairstyle in a beautiful and elegant manner. The use of bold colors and intricate patterns creates a visually striking composition that draws the viewer's eye and invites closer inspection.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-26

The image is of a Japanese print featuring a woman standing in front of a column. The woman is wearing a traditional Japanese kimono with a flower pattern on the sleeves and a red hat. The column is a light color with a dark shadow on the side. The background is a mix of purple and blue colors. The image has a vintage look and feel to it.

Created by amazon.nova-pro-v1:0 on 2025-02-26

The image depicts a painting of a woman standing in front of a bamboo tree. The woman is wearing a traditional Japanese kimono with a floral design. She is also wearing a traditional Japanese hairstyle with a red ribbon on top. The woman is also wearing a traditional Japanese face paint with red and white colors. The bamboo tree is painted in a grayish color, and the background is painted in a purple color.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-14

Here is a description of the image:

The image is a stylized print featuring a geisha figure.

Composition:

- Central Figure: A geisha is the main focus. She's depicted in a traditional kimono, holding a large black object, possibly a fan or a decorative accessory.

- Background: Behind the geisha are abstract, softly rendered shapes and a large, vertical, cylindrical form, possibly a pillar or statue. The background has a textured, somewhat muted purple hue with soft, curved outlines.

- Color Palette: The artwork primarily uses pastel colors and muted tones. There are touches of red, pink, purple, and yellow on the geisha's attire and the background, along with black for contrast.

Stylistic Features:

- Simplified Forms: The artwork uses simplified shapes and forms, focusing on the essential elements of the geisha's form and clothing.

- Abstract Elements: The background and some of the details suggest an abstract approach, not fully representing realistic elements.

- Textured Look: The print appears to have a textured surface, suggesting it might be a woodcut or a similar printing technique.

- Flatness: The image avoids a strong sense of depth, giving it a flat, illustrative quality.

Overall Impression:

The image conveys a sense of elegance and stylized artistry, with a strong focus on the geisha and a blend of traditional and abstract elements. The pastel colors and soft lines give it a gentle, almost dreamlike feel.

Created by gemini-2.0-flash on 2025-05-14

The artwork depicts a geisha standing beside a stone lantern. The background has a light purple color with a patterned texture and large, faint shapes suggesting a landscape.

To the left, a stone lantern is painted in shades of white and gray, casting a subtle shadow. The geisha is dressed in an intricately designed kimono with floral patterns in orange, blue, and purple, with a wide black obi accented with tan stripes. Her face is painted with traditional white makeup, red lipstick, and red circles on her cheeks, and her eyes appear closed. She is adorned with a colorful headdress of pink, red, and yellow elements. The artwork has a print-like quality, with visible textures that add depth to the image.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-04

The image depicts a stylized artwork featuring a figure dressed in traditional attire, possibly inspired by East Asian culture. The figure has a white face with pink cheeks and is adorned with a black hairstyle that includes a red and yellow hair accessory. The clothing consists of a colorful, patterned robe with floral designs and a black sash around the waist. The figure stands in a relaxed pose with one hand resting on the hip.

In the background, there is a large, abstract shape that resembles a pillar or monument, rendered in muted tones of blue and gray. The overall color palette of the artwork includes soft purples, pinks, and blues, giving it a serene and somewhat dreamy quality. The style of the artwork suggests a blend of traditional and modern artistic techniques, with a focus on simplicity and elegance.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-08

The image is a stylized and abstract Japanese woodblock print. It features a traditional Japanese figure, possibly a geisha, adorned in vibrant, flowing attire with intricate patterns and colors. The figure is wearing a elaborate hairstyle with two large, dark hairpieces and a decorative headdress adorned with orange and yellow feathers. The background is abstract, with flowing patterns of purple and white, creating a dynamic and textured effect. The overall style is reminiscent of early 20th-century Japanese woodblock prints, with a focus on bold lines, vivid colors, and a sense of movement. The figure is depicted with a serene and elegant pose, standing upright, which adds to the classical and artistic nature of the piece.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-08

This is an artistic illustration of a person dressed in a traditional Japanese kimono, rendered in a stylized and colorful manner. The kimono is adorned with various motifs, including flowers and abstract shapes. The person is wearing traditional Japanese shoes and a small hat with a fan-like decoration. The background features abstract shapes and muted colors, creating a textured and layered look. The overall style of the illustration is reminiscent of traditional Japanese woodblock prints.