Machine Generated Data

Tags

Amazon

created on 2022-01-08

Clarifai

created on 2023-10-25

Imagga

created on 2022-01-08

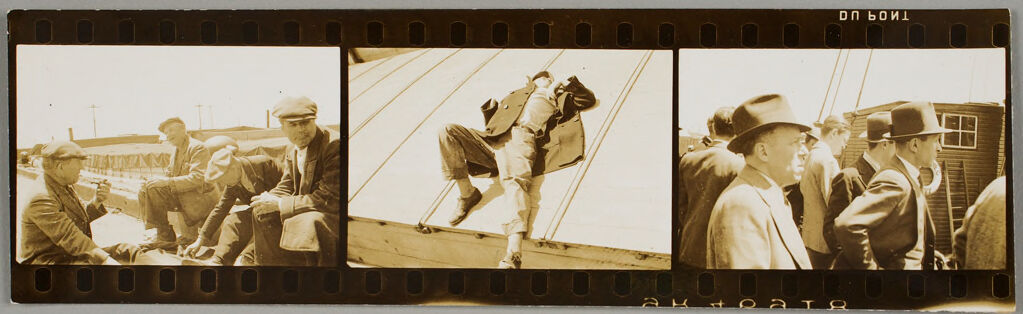

| stringed instrument | 56.8 | |

|

| ||

| musical instrument | 49.3 | |

|

| ||

| device | 32.2 | |

|

| ||

| dulcimer | 29 | |

|

| ||

| vessel | 28.4 | |

|

| ||

| schooner | 25.3 | |

|

| ||

| old | 20.2 | |

|

| ||

| sailing vessel | 18.7 | |

|

| ||

| wood | 18.3 | |

|

| ||

| construction | 18 | |

|

| ||

| architecture | 16.4 | |

|

| ||

| sea | 15.6 | |

|

| ||

| ship | 15.2 | |

|

| ||

| wooden | 14.9 | |

|

| ||

| sky | 14.7 | |

|

| ||

| craft | 14.3 | |

|

| ||

| boat | 14.3 | |

|

| ||

| psaltery | 13.9 | |

|

| ||

| travel | 12.7 | |

|

| ||

| equipment | 12.4 | |

|

| ||

| building | 12.1 | |

|

| ||

| house | 11.7 | |

|

| ||

| ocean | 11.6 | |

|

| ||

| home | 11.2 | |

|

| ||

| industry | 11.1 | |

|

| ||

| work | 10.3 | |

|

| ||

| religion | 9.9 | |

|

| ||

| ancient | 9.5 | |

|

| ||

| roof | 9 | |

|

| ||

| steel | 8.8 | |

|

| ||

| wall | 8.5 | |

|

| ||

| culture | 8.5 | |

|

| ||

| structure | 8.5 | |

|

| ||

| religious | 8.4 | |

|

| ||

| coast | 8.1 | |

|

| ||

| transportation | 8.1 | |

|

| ||

| carpenter | 8 | |

|

| ||

| metal | 8 | |

|

| ||

| builder | 7.9 | |

|

| ||

| holiday | 7.9 | |

|

| ||

| marimba | 7.8 | |

|

| ||

| harbor | 7.7 | |

|

| ||

| god | 7.6 | |

|

| ||

| beach | 7.6 | |

|

| ||

| percussion instrument | 7.5 | |

|

| ||

| art | 7.5 | |

|

| ||

| traditional | 7.5 | |

|

| ||

| tourism | 7.4 | |

|

| ||

| church | 7.4 | |

|

| ||

| rope | 7.4 | |

|

| ||

| water | 7.3 | |

|

| ||

| yellow | 7.3 | |

|

| ||

| industrial | 7.3 | |

|

| ||

| detail | 7.2 | |

|

| ||

| vehicle | 7.1 | |

|

| ||

| machine | 7.1 | |

|

| ||

| summer | 7.1 | |

|

| ||

Google

created on 2022-01-08

| Brown | 98 | |

|

| ||

| Photograph | 94.2 | |

|

| ||

| Rectangle | 83.4 | |

|

| ||

| Art | 77.4 | |

|

| ||

| Tints and shades | 77 | |

|

| ||

| Wood | 74.6 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Vintage clothing | 67.7 | |

|

| ||

| Font | 67.4 | |

|

| ||

| Visual arts | 64.5 | |

|

| ||

| Stock photography | 64.3 | |

|

| ||

| Hat | 63.9 | |

|

| ||

| Room | 62.4 | |

|

| ||

| Display device | 60.2 | |

|

| ||

| Square | 58.6 | |

|

| ||

| History | 58.6 | |

|

| ||

| Photographic paper | 54.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 40-48 |

| Gender | Male, 99.8% |

| Calm | 32.2% |

| Surprised | 24.1% |

| Disgusted | 14.7% |

| Confused | 10% |

| Angry | 8.8% |

| Fear | 4% |

| Sad | 3.4% |

| Happy | 2.7% |

AWS Rekognition

| Age | 51-59 |

| Gender | Male, 99.3% |

| Calm | 90.3% |

| Confused | 3.8% |

| Sad | 3.2% |

| Angry | 1.1% |

| Surprised | 0.6% |

| Disgusted | 0.4% |

| Fear | 0.4% |

| Happy | 0.3% |

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 89.3% |

| Calm | 73.4% |

| Happy | 22% |

| Surprised | 1.8% |

| Angry | 0.8% |

| Sad | 0.7% |

| Disgusted | 0.6% |

| Confused | 0.4% |

| Fear | 0.3% |

AWS Rekognition

| Age | 45-51 |

| Gender | Male, 98.6% |

| Calm | 74.8% |

| Disgusted | 11.6% |

| Surprised | 3.5% |

| Confused | 2.8% |

| Angry | 2.8% |

| Sad | 1.9% |

| Happy | 1.4% |

| Fear | 1.2% |

AWS Rekognition

| Age | 20-28 |

| Gender | Female, 54.5% |

| Calm | 99.2% |

| Sad | 0.2% |

| Disgusted | 0.1% |

| Happy | 0.1% |

| Fear | 0.1% |

| Angry | 0% |

| Surprised | 0% |

| Confused | 0% |

AWS Rekognition

| Age | 53-61 |

| Gender | Female, 79.1% |

| Sad | 96.1% |

| Calm | 1% |

| Confused | 0.8% |

| Angry | 0.7% |

| Disgusted | 0.6% |

| Happy | 0.3% |

| Fear | 0.3% |

| Surprised | 0.2% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

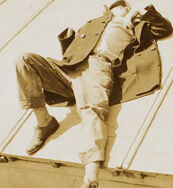

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very likely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| pets animals | 99.5% | |

|

| ||

Captions

Microsoft

created on 2022-01-08

| a group of people sitting at a train station | 33.2% | |

|

| ||

| a group of people in front of a mirror posing for the camera | 33.1% | |

|

| ||

| a man standing in front of a mirror posing for the camera | 33% | |

|

| ||

Text analysis

Amazon

DO

DO BONT

BONT

00 0 00 000

00

0

000