Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 22-34 |

| Gender | Male, 95.3% |

| Happy | 0.1% |

| Calm | 1.9% |

| Sad | 93.9% |

| Angry | 2% |

| Disgusted | 0.1% |

| Surprised | 0.1% |

| Fear | 1.6% |

| Confused | 0.4% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 84.9% | |

Categories

Imagga

created on 2019-10-29

| interior objects | 82.6% | |

| paintings art | 15.1% | |

| food drinks | 1.7% | |

Captions

Microsoft

created by unknown on 2019-10-29

| an orange and black text | 44% | |

Salesforce

Created by general-english-image-caption-blip on 2025-05-20

a photograph of a man sitting on a chair with a cell phone

OpenAI GPT

Created by gpt-4 on 2025-01-29

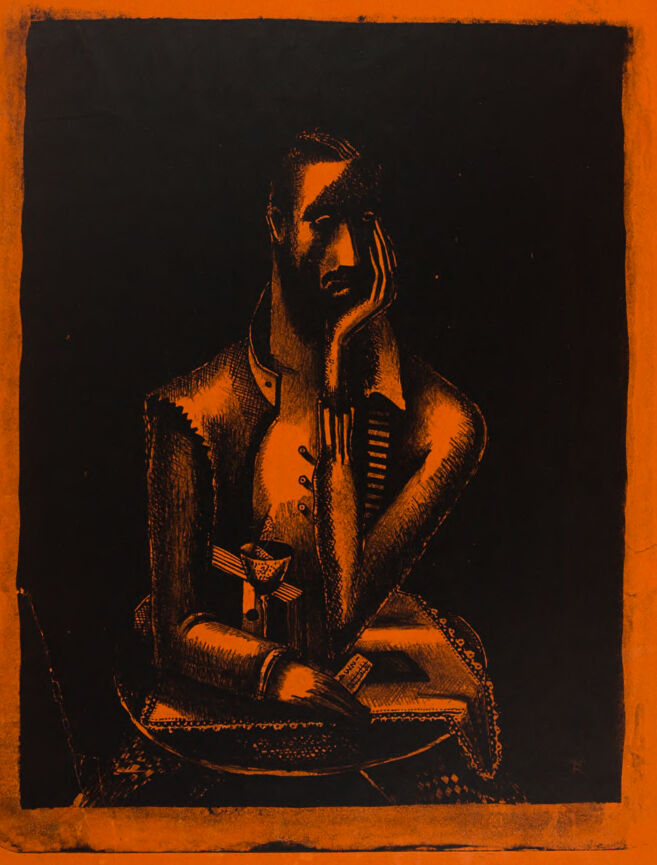

This is an artwork with a predominantly orange and black color scheme. It features a figure sitting cross-legged with their hands resting on their knees. The style appears to be a mix of abstract and figurative, with emphasis on dark lines to outline the figure and patterns on what seems to be the clothing. The background is mostly a solid orange with a large black border framing the central image, giving the piece a bold, contrasting look.

Created by gpt-4o-2024-05-13 on 2025-01-29

The image depicts an abstract artwork featuring a seated figure. The figure has a contemplative posture, with one hand resting near the mouth and the other arm crossed over the torso holding an object that resembles a windmill or pinwheel. The overall color palette comprises a dark central background contrasted by a vivid orange border. The figure's clothing is detailed with textures and shading, suggesting a uniform or a structured outfit, possibly with buttons and a collar. The style of the artwork hints at a mixture of surrealism and abstraction, creating a dramatic visual impact.

Anthropic Claude

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-28

This is an artistic print or lithograph with a striking orange and black color scheme. The artwork depicts a figure in contemplative pose, seated and wearing what appears to be a formal suit or jacket. The composition is rendered in a dramatic contrast, with the figure emerging from a dark background through orange highlights. The style appears to be from the early to mid-20th century, with strong graphic qualities typical of printmaking techniques. The figure's pose suggests deep thought or reflection, with one hand positioned near their face. The overall mood is somber and introspective, enhanced by the limited color palette and bold contrasts.

Created by claude-3-5-sonnet-20241022 on 2025-01-29

This appears to be an artistic print in orange and black tones. It shows a figure seated in a contemplative pose, wearing what appears to be a formal suit or jacket. The composition has a dramatic contrast between the deep black background and the orange highlights that define the figure's form. The style appears to be a woodcut or similar printmaking technique, with bold lines and strong contrasts. The orange border around the black central image adds to the striking visual impact of the piece. The figure's posture suggests deep thought or reflection, with their head resting thoughtfully on their hand.

Created by claude-3-opus-20240229 on 2025-01-29

The image shows a black and white portrait sketch or painting of a seated man against an orange background. The man has long dark hair and is wearing what appears to be a military-style jacket with decorative elements and buttons. He has a serious, contemplative expression on his face as he looks off to the side. The portrait has a rough, textured style with visible brush or pen strokes, giving it an artistic, hand-drawn quality. The bold orange background provides a striking contrast to the dark, monochromatic rendering of the man.

Created by claude-3-haiku-20240307 on 2025-01-29

The image appears to be a portrait depicting a person with a serious expression. The person is shown in a dark, shadowy setting surrounded by an orange background. The figure is wearing a detailed, ornate outfit with intricate patterns and designs. The overall composition and use of light and shadow create a moody, dramatic atmosphere.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-14

The image is a portrait of a man, likely a self-portrait, created by Marc Chagall in 1914. The man is depicted sitting, with his head resting on his hand, and he is wearing a suit jacket and a tie. He is holding a small object in his hand, possibly a book or a letter. The background of the painting is dark, with the man's face and clothing standing out in contrast. The overall mood of the painting is contemplative and introspective, with the man's pose and expression suggesting a sense of quiet reflection.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-14

This image is a striking black-and-white illustration of a man, set against an orange background. The man is depicted in a contemplative pose, with his right hand resting on his face and his left arm crossed over his body. He wears a long-sleeved shirt with buttons down the front, and his attire appears to be from the 18th or 19th century.

The illustration is rendered in a bold, graphic style, with thick lines and expressive shading that gives the image a sense of depth and texture. The orange background adds a pop of color and energy to the overall composition, creating a visually appealing contrast with the monochromatic illustration.

Overall, this image is a captivating and thought-provoking work of art that invites the viewer to reflect on the subject's emotions and intentions.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-29

The image is a black and orange-colored print of a person sitting on a chair. The person is wearing a shirt and a jacket. The person's hand is on his face, and he is looking to the left. The person is holding a cup in his right hand. The person's shadow is cast on the wall behind him. The person is sitting on a chair, and the chair's shadow is cast on the wall behind him.

Created by amazon.nova-lite-v1:0 on 2025-01-29

The image is a monochromatic drawing of a person in a seated position, possibly a man, with his right hand on his face. The person is wearing a black outfit with a white inner shirt and a white ribbon tied around his neck. The person is holding a book in his left hand. The drawing is in a square shape with a black border. The background is black, and the drawing is in a dark shade of orange.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-02

Here's a description of the image:

Overall Impression: The image is a stylized, somewhat somber portrait with a striking color scheme. It features a man in what appears to be a seated pose, rendered in a strong contrast of black and orange.

Composition & Elements:

- Subject: The central figure is a man with a thoughtful or melancholic expression. He is seated, with his head resting on his hand.

- Style: The artwork has a somewhat angular, stylized quality, with the use of cross-hatching or similar techniques to define form and texture.

- Color: The primary colors are black and a vibrant, almost neon orange. The black is used for the figure and the background, while the orange is used for an outer border.

- Background: The background is a solid black, which emphasizes the subject and creates a strong sense of depth.

Details to Note:

- The figure appears to be wearing a collared shirt or jacket, with some details of buttons and clothing visible.

- There is a small object on the figure's lap.

- The image has a certain vintage or print-like quality, suggesting it might be a lithograph or similar printmaking technique.

Created by gemini-2.0-flash on 2025-05-02

The artwork presents a monochromatic image featuring a man, predominantly in a shade of orange or dark yellow, set against a stark black background. The whole picture is framed in a thin, distressed orange border.

The man is depicted sitting with one arm resting on his knee and his hand supporting his chin, suggesting a pose of contemplation or weariness. His clothing appears to be somewhat formal, hinting at a jacket or coat.

There are other elements in the composition, such as a goblet-like object and a rectangular item, which are placed around the man, adding layers to the narrative of the piece. The overall aesthetic leans towards printmaking techniques, such as lithography or serigraphy, as evidenced by the texture and the solid blocks of color.

The artwork conveys a sense of moodiness and introspection due to the man's posture and the contrasting color scheme, with the orange providing a stark relief against the encompassing black.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-07

The image depicts a stylized, abstract artwork featuring a figure that appears to be a person sitting in a contemplative pose. The figure is rendered in a monochromatic orange color scheme against a black background, giving it a striking and dramatic appearance. The figure has a somewhat elongated form, with exaggerated features and a sense of depth created by the use of shading and lines. The person seems to be seated with their legs crossed and hands resting on their knees, evoking a sense of meditation or introspection. The artwork is framed by an orange border, which complements the central figure and enhances the overall visual impact. The style of the artwork suggests influences from modern or expressionist art movements.