Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 20-38 |

| Gender | Male, 66.4% |

| Angry | 13.7% |

| Sad | 40.8% |

| Calm | 13.9% |

| Disgusted | 7.8% |

| Happy | 11.5% |

| Surprised | 5% |

| Confused | 7.2% |

Feature analysis

Amazon

| Person | 98.2% | |

Categories

Imagga

| paintings art | 83.6% | |

| interior objects | 15.6% | |

Captions

Microsoft

created on 2019-07-05

| a close up of a logo | 61.7% | |

| a close up of a piece of paper | 49.7% | |

| a piece of paper | 49.6% | |

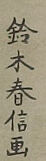

Azure OpenAI

Created by gpt-4 on 2024-12-08

The image depicts a traditional Japanese scene, where an individual is portrayed performing a task or perhaps participating in a tea-making ritual. The figure is dressed in a long, flowing kimono adorned with patterns and is holding an object that might be tied to the activity they are engaged in. Next to the figure is a low table on which rests a plant in full bloom, indicating an attention to nature or the changing seasons. In the background, a stylized representation of smoke or clouds carries Japanese characters, which may convey poetic text or dialogue related to the scene. To the right, an item resembling a shelf or a cupboard hosts various objects, possibly utensils or wares associated with the individual's activities. The setting reflects the simple and elegant aesthetic that is characteristic of traditional Japanese art, aiming to capture not only the visual but also the philosophical essence of the moment. The artwork likely dates back to a period where such scenes were common in artistic representations to capture aspects of Japanese culture and lifestyle.

Created by gpt-4o-2024-05-13 on 2024-12-08

This image appears to be a traditional Japanese woodblock print, likely from the Edo period. It features a figure wearing a kimono with intricate patterns and layers. The figure is holding a pipe, and there are various household items around, including a teapot and a set of drawers with some containers. There is a bonsai tree in a pot to the left. The setting suggests a peaceful domestic scene. The background includes some text, possibly a poem or description, styled in classic Japanese calligraphy.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-08

The image depicts a woman dressed in traditional Japanese attire, likely a geisha or courtier, standing in what appears to be a domestic setting. She is holding a cup or vessel in one hand and appears to be looking off to the side. The background features a landscape with mountains and clouds, as well as some shelving or cabinetry with various objects. The overall style and aesthetic suggest this is a Japanese ukiyo-e style woodblock print or painting.

Created by claude-3-5-sonnet-20241022 on 2024-12-08

This is a traditional Japanese woodblock print (ukiyo-e) depicting a scene of domestic life. The artwork shows a figure in traditional Japanese dress wearing a brown and black kimono with flowing lines, standing near a potted flowering plant with red blossoms. In the background, there appears to be a tea preparation area with a teapot and what looks like a storage cabinet or shelf in red. The composition includes Japanese calligraphy at the top of the image and an artist's signature in the lower left corner. The style is characteristic of the Edo period, with delicate lines and subtle colors. The scene suggests a peaceful moment in a Japanese household, with attention to detail in both the interior setting and the traditional clothing depicted.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-08

The image is a traditional Japanese woodblock print, likely from the 18th or 19th century, featuring a woman in traditional attire standing in front of a table with various objects on it. * The woman is dressed in a kimono and has her hair styled in an updo, adorned with a red obi and a black and white striped sash. * She holds a fan in her right hand and appears to be looking up at something outside the frame. * Her facial expression is serene and contemplative. * Behind the woman, there is a low table with several objects on it, including: * A teapot and cup * A small potted plant * A box or container * A piece of paper or a scroll * The background of the print features a subtle landscape, with hills and buildings visible in the distance. * The sky above is cloudy and overcast, adding to the overall sense of serenity and calmness in the scene. * The print is signed by the artist in the bottom-left corner, although the signature is not legible. * The print is also dated, but the date is not visible in the image provided. Overall, the image presents a peaceful and intimate scene, capturing a moment in time of a woman engaged in a quiet activity. The use of muted colors and subtle details creates a sense of calmness and serenity, inviting the viewer to reflect on the beauty of everyday moments.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-08

This image is a Japanese woodblock print of a woman in traditional clothing, likely from the Edo period (1603-1868). The woman stands in front of a table with a teapot and other items, suggesting she is preparing tea. Her attire includes a kimono, obi, and geta sandals, which were common for women during this time. The background features a subtle design, possibly representing a garden or outdoor setting. The overall atmosphere of the image conveys a sense of serenity and tranquility, characteristic of Japanese art from this era. The use of muted colors and delicate lines adds to the peaceful ambiance, inviting the viewer to appreciate the beauty of everyday life in ancient Japan.