Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 49-57 |

| Gender | Male, 100% |

| Calm | 85.6% |

| Angry | 9% |

| Confused | 2.6% |

| Fear | 0.8% |

| Surprised | 0.7% |

| Sad | 0.7% |

| Happy | 0.4% |

| Disgusted | 0.2% |

Feature analysis

Amazon

| Person | 99.5% | |

Categories

Imagga

| events parties | 67.9% | |

| people portraits | 21.1% | |

| streetview architecture | 7.3% | |

| paintings art | 2.2% | |

Captions

Microsoft

created by unknown on 2022-01-22

| Ron Nyman et al. sitting on a bench | 54.5% | |

| Ron Nyman et al. looking at the camera | 54.4% | |

| Ron Nyman et al. sitting and looking at the camera | 54.3% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-22

| a photograph of a woman in a dress and a man in a suit and tie | -100% | |

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-25

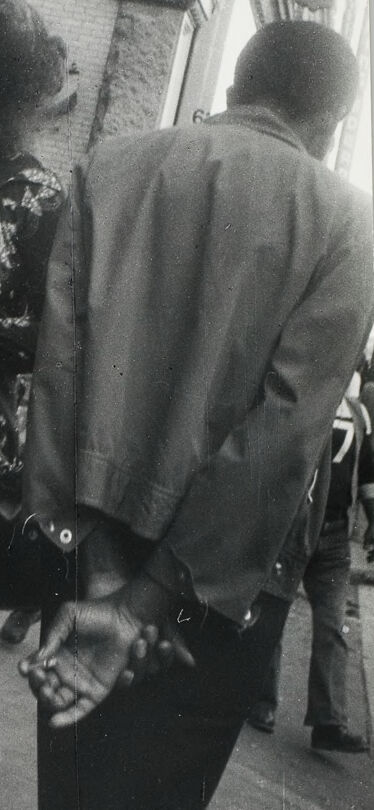

The image is a black-and-white photograph of a woman and a man in an urban setting. The woman, positioned on the left side of the image, is wearing a dark floral dress with a matching jacket, paired with black tights and high heels. She has a watch on her left wrist and a purse in her right hand, which is resting on her hip. Her hair is styled in an afro, and she appears to be looking at the man.

The man, standing on the right side of the image, is facing away from the camera. He is dressed in a dark jacket and dark pants, with his hands clasped behind his back. His head is turned to the left, and he seems to be engaged in conversation with someone outside the frame.

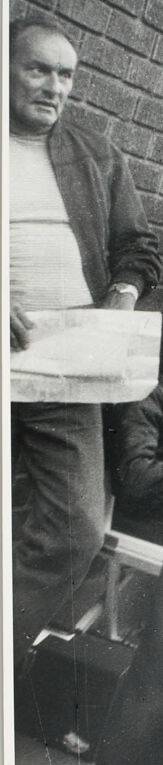

In the background, several individuals are visible, including a man holding a newspaper or document, a person sitting on a bench, and another individual standing nearby. The scene is set against a backdrop of a brick wall and a building, suggesting an urban environment.

Overall, the image captures a moment of everyday life in an urban setting, with the woman and man appearing to be engaged in a casual conversation or interaction.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-25

This image is a black and white photograph of a woman dancing in the street, surrounded by onlookers. The woman is wearing a short dress with a floral pattern, black tights, and a watch on her left wrist. She has a large afro hairstyle and is holding a purse in her right hand. Her mouth is open, and she appears to be singing or shouting.

In the background, there are several people watching her dance. One man is standing against a brick wall, holding a piece of paper, while another man is standing with his back to the camera, wearing a jacket and pants. There are also other people visible in the background, but they are not clearly defined.

The overall atmosphere of the image suggests that the woman is performing for an audience, possibly as part of a street performance or celebration. The onlookers seem to be enjoying the show, and the woman's energetic and expressive dance moves suggest that she is fully engaged in the performance.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-24

A black-and-white photo of people standing outside a building. A woman with curly hair is standing in front of a man wearing a jacket and pants. The man is holding his hands together in front of him. Behind them, a man is holding a piece of paper, and a woman is sitting on a bench. A person is standing on the right side, and a brick wall is behind them.

Created by amazon.nova-lite-v1:0 on 2025-05-24

The image shows a black-and-white photograph of a street scene. The photograph depicts a woman with curly hair standing in the middle of the street. She is wearing a short dress and stockings. A man wearing a hat is standing behind her, and he is holding a newspaper. Another man is standing on the left side of the image, holding a newspaper and wearing a jacket. In the background, there is a brick wall with a sign that reads "TEL."

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-17

Here's a description of the image:

Overall Impression:

The image appears to be a candid street photograph, likely taken in an urban environment. It's a black and white shot, which gives it a vintage feel. The photograph features several people, with the main focus seemingly on a woman in a floral dress.

Specific Elements:

- Main Figure: A woman is positioned prominently in the center. She has a large afro and is wearing a short, dark dress with a floral print. She's blowing a bubblegum bubble. She also wears a wristwatch and carries a large handbag. She has her legs in a semi-strutting stance.

- Surrounding People: There are other people visible in the background:

- A man on the left, holding what seems to be a newspaper or a document.

- A man in a jacket facing away from the camera on the right.

- Another man sitting in a chair behind the woman on the left.

- Environment: The setting seems to be a city street. A brick wall is visible, as well as a sign that has "TEL" on it.

- Atmosphere: The candid nature of the photo creates a sense of movement and a snapshot of a moment in time. It has an energy that comes with street photography.

Style and Possible Themes:

The photograph's composition, the subjects, and the black and white style suggest it may be a documentary or street photography piece. Possible themes could include urban life, capturing moments, social observation, or a focus on fashion and style.

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image:

This black and white photo presents a street scene with several individuals. The main focus is a Black woman with a distinctive afro, wearing a floral-patterned mini dress, dark tights, and what appear to be heels. She has a round object in her mouth, possibly a piece of gum, and is wearing a watch on her left wrist. A black shoulder bag hangs from her shoulder. She is positioned in the center of the frame, slightly off-center to the left.

To the left of the woman, an older man is standing. He is holding a newspaper and wearing a sweater over a t-shirt and pants. Behind him, a younger individual with glasses is seated on a folding chair.

On the right side of the frame, a Black man stands with his back to the camera. His hands are clasped behind his back. He is wearing a jacket and pants. Additional people can be seen further back on the right side of the frame, suggesting a crowd or street scene.

The background consists of a brick building to the left and a building with a "HOTEL" sign visible to the right. The overall atmosphere of the photo suggests a street scene captured in a candid, documentary style.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-26

The image is a black-and-white photograph capturing a street scene. The central figure is a person wearing a dark floral dress, sheer tights, and heels, with a large afro hairstyle. This individual appears to be in motion, possibly walking or dancing, and is positioned between two other people. The person on the left is wearing a light-colored shirt, dark pants, and a jacket, and is holding a white box. The person on the right is dressed in a suit and appears to be walking away from the camera.

In the background, there are additional people, one of whom is partially visible and wearing a jacket and pants. The setting appears to be an urban environment, with a brick wall and a door visible in the background. The overall atmosphere suggests a lively, possibly festive or busy street scene. The photograph has a vintage feel, likely from the mid-to-late 20th century, given the style of clothing and the quality of the image.