Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

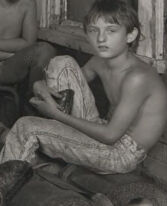

| Age | 14-22 |

| Gender | Male, 95.7% |

| Sad | 45.7% |

| Fear | 29.9% |

| Calm | 22.4% |

| Surprised | 0.7% |

| Angry | 0.4% |

| Disgusted | 0.4% |

| Confused | 0.3% |

| Happy | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.7% | |

Categories

Imagga

created on 2022-01-08

| paintings art | 87.1% | |

| people portraits | 11.9% | |

Captions

Microsoft

created by unknown on 2022-01-08

| a vintage photo of a group of people posing for the camera | 96.5% | |

| a vintage photo of a group of people posing for a picture | 96.4% | |

| a group of people posing for a photo | 96.3% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

a group of boys and girls sit on a wooden bench in front of a house.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a group of people sitting on a porch

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-14

This black-and-white photo features a large group of people gathered on a rustic porch. The setting appears to be rural, surrounded by dense trees and hills in the background. The porch is weathered, with peeling paint on the wooden columns and boards. Various objects are scattered around, including buckets and rolled rugs. Some individuals are sitting, while others are leaning against or climbing on the porch structure. The scene exudes a casual, everyday atmosphere, suggesting a snapshot of family life or community interaction in a rural environment.

Created by gpt-4o-2024-08-06 on 2025-06-14

The image shows a group of people, likely a family, gathered on the porch of a rustic, weathered house located in a mountainous or forested area. Several individuals are standing or sitting on the porch, which shows signs of wear and tear. There are children in various poses, some holding onto porch columns and others sitting on the porch floor. The vegetation in the distance suggests a dense forest or natural landscape. The setting conveys a rural or backwoods atmosphere, with a focus on family life within a simple, rugged environment. Hanging laundry and household items add to the lived-in ambiance of the porch area.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image appears to be a black and white photograph depicting a group of people, primarily children, gathered outside of a rustic-looking building. The children have a range of expressions, from serious to playful, and are dressed in casual, simple clothing. An adult woman is also present, holding a young child. The background suggests a rural or remote setting, with trees and foliage visible. The overall scene conveys a sense of poverty or hardship, with the dilapidated building and the children's modest attire. The image seems to capture a moment in the lives of this group, providing a glimpse into their living conditions and daily experiences.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-19

The black and white photograph shows a group of children and adults gathered on the porch of a wooden house surrounded by trees and foliage. The children range in age from babies to young adolescents. Some are standing while others are sitting on the porch steps. The adults, a woman and a man, are positioned among the children. The woman is holding an infant while leaning out a window, and the man is standing on a ladder leaning against the house. The scene appears to be from a rural area, possibly depicting an extended family or community living together in modest circumstances.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This is a black and white photograph that appears to be taken in a rural setting, likely in Appalachia or a similar mountainous region. The image shows several children and an older woman on what appears to be the porch of a modest wooden house or cabin. The composition includes some children in the foreground and others arranged around a porch post, with some sitting on what looks like the porch floor. The setting suggests a rustic, possibly impoverished environment, with foliage visible in the background. The photograph has a documentary-style quality to it, capturing what appears to be an authentic moment of rural American life. The subjects are dressed simply, with some wearing tank tops and casual clothing appropriate for warm weather. The image has a certain poignancy and depth that was characteristic of documentary photography from the mid-20th century.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a woman and nine children on the porch of a small, rustic house in the mountains.

- The woman sits in the center of the porch, holding a baby on her lap. She has shoulder-length hair and wears a light-colored shirt and dark pants.

- The children are scattered around the porch, some sitting, some standing, and one climbing up the side of the house.

- The house is made of wood and has a small window on the right side. The porch is weathered and worn, with a few pieces of furniture scattered about.

- In the background, trees and bushes can be seen, as well as a power line running across the top of the image.

- The overall atmosphere of the image is one of warmth and family, with the woman and children gathered together on the porch of their home in the mountains.

The image captures a moment of everyday life in a rural community, highlighting the importance of family and community in these settings.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a woman and eight children, likely her grandchildren, posing in front of a rural home. The woman sits on the porch with a baby in her lap, while the children are arranged around her, some standing and others sitting. The scene appears to be set in a rural area, with trees and bushes visible in the background.

The overall atmosphere of the image suggests a sense of warmth and closeness among the family members, as they gather together for a photo opportunity. The use of black and white photography adds a timeless quality to the image, making it feel like a nostalgic snapshot from the past.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image depicts a black-and-white photograph of a family gathered on the porch of a house. The family consists of several children and adults. The adults are seated on the porch, while the children are standing around them. The woman in the center is holding a baby in her lap. The children appear to be posing for the camera, with some of them looking directly at it. The house is surrounded by trees, and the overall atmosphere of the image is one of simplicity and intimacy.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The black-and-white image features a group of people standing and sitting on the porch of a house. A woman holding a baby is sitting on the porch, and a boy is standing behind her. Some children are sitting on the right side, and one is standing on the left side. Behind them is a window with a door and a forest. The image has a white border around it.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

Here's a description of the image:

The image is a black and white photograph of a family in what appears to be a rural setting. It portrays a diverse group of people, likely several children and an adult woman.

Here's a breakdown of what I see:

- Composition: The image is a snapshot of family life, with several people seemingly posed for the shot. The composition is natural, with the subjects spread out and interacting with the surroundings.

- Subjects: There are several children of varying ages and sizes, all dressed in casual attire. A woman, possibly the mother or grandmother, is holding a baby. Some of the children are looking directly at the camera, while others are occupied.

- Setting: The background shows a mountain landscape, the setting looks weathered and rustic.

- Atmosphere: The overall mood is one of intimacy and family. The black and white photography gives a timeless feel to the image.

Let me know if you would like a more detailed description!

Created by gemini-2.0-flash on 2025-05-16

Here is a description of the image:

This black and white photograph features a group of children and an adult woman in what appears to be a rural or impoverished setting. The composition is divided between the foreground and background, with the figures arranged on and around a porch area.

On the left side of the image, three girls are visible. The girl in the center, slightly turned to the left, wears a light-colored tank top. To her left, another girl wears a tank top. Another girl looks out from behind a porch pole on the right.

To the right of the porch pole, an adult woman sits, holding a baby. Several other children are positioned on the porch. One older boy stands on the porch, another boy sits on a makeshift bench along with another child.

The background shows a wooded area with rolling hills. The overall impression is one of poverty and hardship, with the children appearing serious and the setting appearing unkempt. The photograph is taken at eye level, giving the viewer a sense of being present in the scene. The composition is tight, focusing on the faces and expressions of the subjects.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

This black-and-white photograph depicts a group of children and a woman gathered around a rustic, wooden house. The scene appears to be set in a rural or mountainous area, suggested by the dense foliage and misty background.

Here are the details:

Setting: The house is made of wood and looks weathered. There is a porch with a few steps leading up to it. The environment around the house is lush with trees and vegetation, and the atmosphere seems slightly foggy, indicating a possibly cool and humid climate.

People:

- Children: There are several children of varying ages. Some are standing on the porch, while others are seated or standing near the steps. Their expressions range from curious to serious.

- Woman: A woman, presumably a mother or caretaker, is seated on the steps holding a baby. She has a calm demeanor and is looking directly at the camera.

Clothing: The children are dressed in simple, casual clothing, such as tank tops and shorts, which suggests warm weather. Some of the children are barefoot, and others are wearing sandals or shoes.

Objects: There is a bucket near the steps, and some of the children are holding or leaning on various items, such as a tire and a wooden pole.

Atmosphere: The overall mood of the photograph is one of simplicity and rural life. The expressions on the faces of the subjects and the natural setting create a sense of timelessness and a glimpse into everyday life in this particular environment.

The photograph captures a candid moment in the lives of these individuals, providing a snapshot of their living conditions and interactions.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-06

This is a black-and-white photograph showing a group of people, including adults and children, gathered on and around the porch of a rustic building. The porch is supported by a wooden post, and the setting appears to be in a rural or mountainous area, as there are trees and greenery in the background.

- In the foreground, there are two young girls, one holding a pole, and another young girl sitting on the ground with her legs crossed.

- In the middle, an adult woman is holding a baby while another child leans against the porch railing.

- In the background, two young boys are visible. One is climbing on the wooden post, and the other is sitting on the porch with his arms crossed.

- The overall atmosphere of the photograph suggests a candid, everyday moment, and the clothing and setting appear to reflect a rural or working-class lifestyle.

The photograph captures a sense of community and simplicity, emphasizing the close relationship between the individuals and their environment.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-06

This black-and-white photograph captures a group of children and an adult sitting and standing around a rustic wooden post in front of a modest house. The setting appears to be rural, with dense foliage and trees in the background. The children are dressed in casual, somewhat ragged clothing, and their expressions range from serious to somewhat playful. One child is climbing the wooden post, while others are sitting on the ground or on the porch of the house. The adult, who appears to be a woman, is holding a baby and is seated on the porch. The overall atmosphere of the image suggests a candid and unposed moment, capturing a slice of everyday life in a rural setting.