Machine Generated Data

Tags

Amazon

created on 2021-12-14

Clarifai

created on 2023-10-15

Imagga

created on 2021-12-14

| furniture | 30.4 | |

|

| ||

| bookcase | 26.6 | |

|

| ||

| case | 24.4 | |

|

| ||

| interior | 23 | |

|

| ||

| computer | 22.9 | |

|

| ||

| business | 20.6 | |

|

| ||

| equipment | 19.9 | |

|

| ||

| furnishing | 18.2 | |

|

| ||

| technology | 17.8 | |

|

| ||

| indoors | 17.6 | |

|

| ||

| television | 17.4 | |

|

| ||

| table | 17.2 | |

|

| ||

| call | 17 | |

|

| ||

| room | 16.9 | |

|

| ||

| office | 16.8 | |

|

| ||

| shop | 16.1 | |

|

| ||

| telephone | 14.9 | |

|

| ||

| modern | 14 | |

|

| ||

| electronic equipment | 13.9 | |

|

| ||

| people | 13.4 | |

|

| ||

| pay-phone | 12.7 | |

|

| ||

| desk | 12.2 | |

|

| ||

| building | 12.1 | |

|

| ||

| window | 12.1 | |

|

| ||

| black | 12 | |

|

| ||

| work | 11.8 | |

|

| ||

| man | 11.4 | |

|

| ||

| monitor | 11.4 | |

|

| ||

| digital | 11.3 | |

|

| ||

| person | 11.3 | |

|

| ||

| inside | 11 | |

|

| ||

| device | 10.9 | |

|

| ||

| indoor | 10.9 | |

|

| ||

| home | 10.4 | |

|

| ||

| architecture | 10.1 | |

|

| ||

| 3d | 10.1 | |

|

| ||

| old | 9.7 | |

|

| ||

| working | 9.7 | |

|

| ||

| design | 9.6 | |

|

| ||

| laptop | 9.5 | |

|

| ||

| professional | 9.3 | |

|

| ||

| communication | 9.2 | |

|

| ||

| house | 9.2 | |

|

| ||

| adult | 9.1 | |

|

| ||

| machine | 9 | |

|

| ||

| chair | 8.9 | |

|

| ||

| apartment | 8.6 | |

|

| ||

| corporate | 8.6 | |

|

| ||

| glass | 8.5 | |

|

| ||

| industry | 8.5 | |

|

| ||

| telecommunication system | 8.5 | |

|

| ||

| restaurant | 8.5 | |

|

| ||

| keyboard | 8.4 | |

|

| ||

| mercantile establishment | 8.4 | |

|

| ||

| hand | 8.3 | |

|

| ||

| phone | 8.3 | |

|

| ||

| businesswoman | 8.2 | |

|

| ||

| style | 8.2 | |

|

| ||

| light | 8 | |

|

| ||

| worker | 8 | |

|

| ||

| lifestyle | 7.9 | |

|

| ||

| lamp | 7.9 | |

|

| ||

| wood | 7.5 | |

|

| ||

| city | 7.5 | |

|

| ||

| vintage | 7.4 | |

|

| ||

| holding | 7.4 | |

|

| ||

| retro | 7.4 | |

|

| ||

| back | 7.3 | |

|

| ||

| data | 7.3 | |

|

| ||

| decoration | 7.2 | |

|

| ||

| art | 7.2 | |

|

| ||

| night | 7.1 | |

|

| ||

| male | 7.1 | |

|

| ||

| information | 7.1 | |

|

| ||

| job | 7.1 | |

|

| ||

| businessman | 7.1 | |

|

| ||

| screen | 7 | |

|

| ||

Google

created on 2021-12-14

| Style | 84 | |

|

| ||

| Black-and-white | 83.6 | |

|

| ||

| Chair | 79.7 | |

|

| ||

| Musician | 79.1 | |

|

| ||

| Font | 78.7 | |

|

| ||

| Musical instrument | 76.5 | |

|

| ||

| Art | 76.4 | |

|

| ||

| Music | 74.8 | |

|

| ||

| Monochrome photography | 73.8 | |

|

| ||

| Recital | 73.8 | |

|

| ||

| Monochrome | 72.9 | |

|

| ||

| Poster | 69.5 | |

|

| ||

| Advertising | 69.5 | |

|

| ||

| Event | 68.6 | |

|

| ||

| Room | 66 | |

|

| ||

| Stock photography | 64.8 | |

|

| ||

| Suit | 64.2 | |

|

| ||

| Pianist | 62.9 | |

|

| ||

| Entertainment | 62.2 | |

|

| ||

| Classic | 59 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 22-34 |

| Gender | Male, 94% |

| Calm | 76.9% |

| Sad | 13.1% |

| Angry | 6.8% |

| Fear | 1.3% |

| Confused | 0.7% |

| Surprised | 0.4% |

| Disgusted | 0.4% |

| Happy | 0.3% |

AWS Rekognition

| Age | 29-45 |

| Gender | Male, 99.7% |

| Fear | 89.2% |

| Surprised | 7.5% |

| Calm | 1.2% |

| Sad | 0.9% |

| Angry | 0.4% |

| Confused | 0.4% |

| Happy | 0.2% |

| Disgusted | 0.2% |

AWS Rekognition

| Age | 21-33 |

| Gender | Male, 98.8% |

| Calm | 97.3% |

| Happy | 1% |

| Sad | 0.8% |

| Angry | 0.6% |

| Surprised | 0.1% |

| Confused | 0.1% |

| Disgusted | 0.1% |

| Fear | 0% |

AWS Rekognition

| Age | 32-48 |

| Gender | Male, 99.9% |

| Calm | 82.2% |

| Happy | 7.6% |

| Sad | 5.1% |

| Angry | 3% |

| Surprised | 1% |

| Confused | 0.7% |

| Fear | 0.2% |

| Disgusted | 0.2% |

AWS Rekognition

| Age | 22-34 |

| Gender | Female, 98.3% |

| Calm | 75.3% |

| Sad | 16.3% |

| Angry | 2.4% |

| Disgusted | 1.6% |

| Surprised | 1.4% |

| Confused | 1.2% |

| Fear | 0.9% |

| Happy | 0.9% |

AWS Rekognition

| Age | 20-32 |

| Gender | Male, 76.6% |

| Sad | 66.4% |

| Calm | 15.9% |

| Happy | 10.2% |

| Angry | 6.2% |

| Surprised | 0.6% |

| Disgusted | 0.3% |

| Fear | 0.2% |

| Confused | 0.1% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| interior objects | 99.8% | |

|

| ||

Captions

Microsoft

created by unknown on 2021-12-14

| a group of people sitting at a desk in front of a store | 64% | |

|

| ||

| a person sitting at a desk in front of a store | 63.9% | |

|

| ||

| a group of people sitting at a desk | 63.8% | |

|

| ||

Clarifai

created by general-english-image-caption-blip on 2025-05-17

| a photograph of a group of people sitting around a table | -100% | |

|

| ||

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

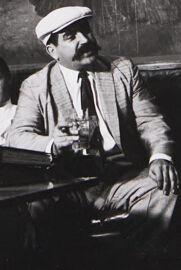

Here's a description of the image:

Overall Impression:

The image is a black and white photograph showing a group of people gathered in a well-appointed room, likely a library or study. The photograph has a vintage feel.

Key Elements:

- The People: A group of six or seven people, primarily men, are seated. They appear to be engaged in a casual gathering. One man is holding a long stick. Another is wearing a white hat and holding a glass. One woman is sitting wearing glasses.

- The Setting: The room has dark wooden bookshelves filled with books lining the walls. A large painting of a woman in an evening gown dominates the wall.

- Furniture: The group is seated around a low table.

Possible Interpretations:

The image evokes a sense of history and perhaps a meeting of intellectuals or social elites. The setting suggests a place of learning and contemplation. The group might be discussing a topic or simply enjoying each other's company.

Created by gemini-2.0-flash on 2025-05-16

Here's a description of the image:

This black and white photograph depicts a group of people gathered in what appears to be a library or study. Tall bookshelves filled with books flank a large painting of a woman in an elegant gown.

In the foreground, several people are seated around a table made from suitcases. One man, on the left, is holding a fishing pole. Another man, wearing a light-colored suit and a cap, is seated prominently with a glass in his hand, giving the impression of being a central figure. There are two more men seated behind them, to the right of the man in the cap, dressed in darker suits. A woman with her hair up and wearing glasses sits on the right end of the group.

The overall composition suggests a candid moment, perhaps a social gathering or a meeting of some kind. The presence of the fishing pole and the suitcases as furniture adds an element of intrigue.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-31

The image is a black-and-white photograph depicting a group of five men in a room that appears to be a library or study. The room is adorned with bookshelves filled with books on either side of a large painting. The painting is of a woman in a flowing dress, standing in a dramatic pose with a cloudy sky in the background.

The men are dressed in attire that suggests a setting from the mid-20th century. They are seated or leaning against a desk or table, which has various items on it, including papers and possibly a typewriter. The man in the center is wearing a hat and appears to be holding a drink, while the others are engaged in conversation or looking at the camera. The atmosphere seems relaxed and informal, possibly indicating a social gathering or a casual meeting. The overall setting and the attire of the individuals give the image a vintage, nostalgic feel.

Text analysis

Amazon