Machine Generated Data

Tags

Color Analysis

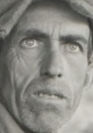

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 40-48 |

| Gender | Male, 100% |

| Confused | 95.5% |

| Calm | 3.6% |

| Sad | 0.4% |

| Disgusted | 0.1% |

| Fear | 0.1% |

| Angry | 0.1% |

| Surprised | 0% |

| Happy | 0% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 97.3% | |

Categories

Imagga

created on 2022-01-22

| paintings art | 99.8% | |

Captions

Microsoft

created by unknown on 2022-01-22

| an old photo of a man | 84.2% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

photograph of a man and a woman in a car.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a man and woman sitting in a car

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-14

This black-and-white image depicts the interior of an old vehicle with two individuals seated inside. The person on the left is dressed in heavy clothing, possibly a coat or blanket, while the person on the right is wearing patterned clothing and is positioned near the steering wheel. The vehicle appears to be rustic, with a worn dashboard and visible metal components. Its roof is made of canvas or similar fabric and is visibly damaged. The photo captures a candid moment likely from an earlier historical period, evoking themes of travel or hardship.

Created by gpt-4o-2024-08-06 on 2025-06-14

The image depicts the interior of an old vehicle, possibly a truck or car, with two seated individuals. The person on the right is holding the steering wheel, indicating they might be the driver. They are wearing a coat and a hat. The interior of the vehicle shows signs of wear, with a rustic and aged appearance. The dashboard and steering wheel suggest a vintage vehicle design, and the visible clothing appears to be from an earlier era, possibly mid-20th century. The image is in black and white, emphasizing the historical context.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-16

The image shows a man sitting in the driver's seat of a vehicle, bundled up against the cold. He appears to be an older man with a weathered face, wearing a hat and coat. The interior of the vehicle is dimly lit, with a steering wheel and other details visible. The overall impression is one of hardship and struggle, with the man's expression conveying a sense of determination or resignation in the face of difficult circumstances.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-16

The black and white photograph shows two people, a man and a woman, sitting in what appears to be an old automobile from the early-to-mid 20th century era based on the styling. The man is at the wheel, wearing a cap and looking straight ahead with a pensive expression. The woman is bundled up in a coat or blanket, looking towards the camera. The interior of the vehicle has a worn, rustic appearance with exposed wooden beams and panels. The overall mood of the image seems contemplative, capturing a quiet moment between the vehicle's occupants during their journey.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-16

This is a black and white photograph that appears to be from the early to mid-20th century. It shows two people sitting in what appears to be an old automobile. The driver is wearing a flat cap and appears to be a working-class individual with weathered features, seated at the steering wheel. Next to them is a passenger bundled up in what looks like a fur-collared coat. The image has the grainy, documentary-style quality typical of Depression-era photography. The interior of the vehicle is sparse and utilitarian, characteristic of early automobiles. The photograph captures a moment of everyday life from this historical period.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-23

This black-and-white photograph captures a man and woman sitting in the cab of an old truck, facing the camera. The man, wearing a flat cap and a jacket, is positioned behind the steering wheel, while the woman, dressed in a coat, sits beside him. The interior of the truck features a wooden ceiling and a windshield, with the man's hands gripping the steering wheel. The background, visible through the windshield, appears to be a rural landscape.

The image, taken by Dorothea Lange in 1936, is part of the Library of Congress's collection. It is titled "Migrant Worker and Wife, Arkansas" and is cataloged as LC-USF34-00001-S. The photograph provides a poignant glimpse into the lives of migrant workers during the Great Depression, highlighting the struggles and hardships they faced.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-23

The image is a black-and-white photograph of a man and woman sitting in the front seats of an old car. The man, wearing a cap, is behind the steering wheel, while the woman sits beside him. The car's interior appears worn, with visible signs of wear and tear on the seats and dashboard.

In the background, a blurred landscape can be seen through the windshield, suggesting that the photo was taken during a journey or outing. The overall atmosphere of the image conveys a sense of nostalgia and simplicity, capturing a moment in time from a bygone era.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-23

The image is a black-and-white photograph of a man and a woman sitting inside a vehicle. The man is wearing a hat and is sitting on the driver's seat. He is holding the steering wheel with his left hand. The woman is sitting next to him, wearing a fur-lined coat. She is looking to the left, and her right hand is on her lap. The vehicle's interior is visible, including the dashboard and the windshield.

Created by amazon.nova-lite-v1:0 on 2025-05-23

The image shows a black and white photograph of two people sitting inside a vehicle. The man is wearing a hat and is holding the steering wheel, while the woman is sitting beside him and is looking at him. They are both wearing winter clothes, and the man is wearing a scarf around his neck. The vehicle appears to be an old car or truck, with a wooden roof and a windshield. The image has a vintage look and feel, suggesting that it was taken many years ago.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-17

Here is a description of the image:

The image is a black and white photograph. It features a man and a woman seated inside an old car. The man is driving, holding the steering wheel. He wears a cap and a collared shirt. His face has a weathered look, and his eyes are focused forward. The woman is seated beside him, facing slightly to the side. She is bundled in a coat, and the car's interior appears somewhat worn. The lighting suggests it's a daytime scene. The overall style is reminiscent of documentary photography, possibly from the early to mid 20th century.

Created by gemini-2.0-flash on 2025-05-17

The grayscale photo presents a man and a woman inside an old car, the man behind the wheel and the woman sitting next to him. The man is wearing a cap and a jacket, and he appears to be focused on the road. His face is weathered, showing the signs of age and hardship.

The woman, seated next to him, is wearing a coat with a fur collar. Her expression is contemplative, and she seems to be looking off into the distance. The car's interior is sparse and worn, with visible signs of age and wear. The roof appears to be tattered, and the dashboard is simple and functional.

The scene evokes a sense of the past, possibly during a time of economic hardship or displacement. The black and white tones further enhance the sense of nostalgia and the timeless quality of the image. The framing of the shot places the viewer inside the car with the subjects, creating a sense of intimacy and immediacy.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-24

The image is a black-and-white photograph depicting two individuals sitting inside an old car. The person on the right is a man who appears to be the driver, as he is holding the steering wheel. He is wearing a cap and a jacket, and his facial expression looks serious or contemplative. The person on the left is a woman, who is seated in the passenger seat. She is wrapped in a coat with a fur collar and appears to be looking slightly away from the camera. The car's interior is visible, with a cloth or blanket draped over the seat between them. The vehicle looks worn, suggesting it might be from an earlier era, possibly the early to mid-20th century. The overall mood of the image conveys a sense of hardship or endurance, possibly reflecting the challenges faced during that time period.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-09

The image is a black-and-white photograph of two individuals inside a vehicle, likely an old car, given the steering wheel and dashboard design. The person on the left appears to be a woman wearing a large fur-trimmed coat and has a contemplative or serious expression. The person on the right is a man wearing a flat cap, a jacket, and gloves, and he is looking ahead with a pensive or concerned expression. The vehicle's interior suggests a vintage model, with visible details like the steering wheel, dashboard, and part of the windshield. The photograph has a slightly grainy texture, indicative of its age or the technology used to capture the image. The setting outside the vehicle is not clearly visible, but it appears to be outdoors with dry, possibly arid terrain. The overall mood of the photograph conveys a sense of quiet intensity or contemplation.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-09

This black-and-white photograph captures two individuals seated inside a car, likely from an older time period. The person on the left appears to be a woman, wearing a coat with a fur collar and a blanket draped over her lap. The person on the right, presumably a man, is wearing a cap and a coat, and is holding the steering wheel, suggesting he is the driver. The interior of the car is visible, including the steering wheel and dashboard. The overall tone of the image suggests a historical context, possibly from the early to mid-20th century. The expressions on their faces convey a sense of seriousness or contemplation.