Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 100% |

| Calm | 99.6% |

| Confused | 0.2% |

| Sad | 0.1% |

| Angry | 0% |

| Happy | 0% |

| Disgusted | 0% |

| Surprised | 0% |

| Fear | 0% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.3% | |

Categories

Imagga

created on 2022-01-23

| paintings art | 100% | |

Captions

Microsoft

created by unknown on 2022-01-23

| a group of people posing for a photo | 77.7% | |

| an old photo of a man | 77.6% | |

| a man and woman posing for a photo | 53.3% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

photograph of a family sitting on a bench.

Salesforce

Created by general-english-image-caption-blip on 2025-05-16

a photograph of a group of people sitting on a bench in a garden

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-15

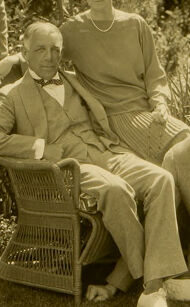

This sepia-toned image features a group of four individuals posing outdoors in a lush garden setting. Three individuals are sitting—two directly on the grass and one in a wicker chair—while the fourth stands slightly behind them. The group is dressed in early-20th-century clothing, including suits with ties and high-waisted trousers. The standing individual wears a vest under the jacket, adding an element of formal attire. Trees, shrubs, and filtered sunlight create a peaceful natural backdrop. The photo suggests a casual gathering with a vintage aesthetic.

Created by gpt-4o-2024-08-06 on 2025-06-15

The image depicts four people in a garden setting. Two individuals are seated, one on a wicker chair and the other on the ground, with their legs crossed. The seated individual on the ground is wearing a suit with a bow tie. The person sitting on the chair is dressed in casual clothing, with trousers and a pullover sweater. The standing figure is dressed in a suit and bow tie, positioned behind the seated individuals. The background features lush greenery with trees and shrubs. The photograph is mounted on a plain beige frame, and there is a signature or inscription in the lower right corner of the frame.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-20

The image shows a group portrait of four people in a garden setting. There is an older man seated in a wicker chair, with a younger man and woman standing behind him, and another younger man sitting on the ground in front of the group. They appear to be dressed in formal attire from an earlier era, with the men wearing suits and the woman wearing a dress. The background features lush greenery, including trees and shrubs, creating a natural and serene environment for the portrait.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-20

This is a vintage black and white photograph showing four people in what appears to be a garden setting. The image has a formal yet relaxed quality typical of 1920s or early 1930s photography. The subjects are well-dressed - the men are wearing suits with bow ties and sweaters, while there's a woman in what appears to be a casual dress. One person is seated in what looks like a wicker chair, while another sits on the ground, and two others are standing. The garden setting includes foliage and trees in the background, creating a pleasant outdoor atmosphere. The photograph has the sepia tones and composition style characteristic of that era's portrait photography.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-30

The image is a sepia-toned photograph of four individuals posing in a garden setting. The photograph is framed by a thick border, with the top and bottom edges being wider than the left and right edges.

- Background:

- The background of the photograph features a lush garden with various plants and trees.

- The garden appears to be well-manicured, with neatly trimmed bushes and flowers.

- Individuals:

- There are four individuals in the photograph, all dressed in formal attire.

- The man standing on the left is wearing a suit and tie, with his hands in his pockets.

- The woman sitting on the right is wearing a dress and pearl necklace, with her hands resting on her lap.

- The man sitting on the left is wearing a suit and bow tie, with his arms crossed.

- The man kneeling on the right is wearing a suit and bow tie, with his hands clasped together.

- Overall Impression:

- The photograph appears to be a formal portrait, possibly taken for a family album or as a keepsake.

- The individuals in the photograph are posing in a relaxed manner, suggesting that the photo was taken in a casual setting.

The photograph provides a glimpse into the lives of the individuals in the image, showcasing their formal attire and relaxed demeanor in a beautiful garden setting.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-30

This image is a sepia-toned photograph of four individuals, likely taken in the early 20th century. The group consists of two men and two women, all dressed in formal attire.

The man on the left stands with his hands in his pockets, wearing a suit jacket, vest, shirt, tie, and pants. He has short hair and appears to be looking directly at the camera.

In the center, a woman sits on the arm of a wicker chair, which is occupied by another man. She wears a long-sleeved dress and has her hair styled in an updo. The man in the chair has his legs crossed and is dressed in a suit jacket, vest, shirt, bow tie, and pants. He also has short hair and looks directly at the camera.

On the right, a man kneels on the ground, wearing a suit jacket, vest, shirt, bow tie, and pants. He has short hair and appears to be looking at the camera.

The background of the photo features trees and bushes, suggesting that it was taken outdoors. The overall atmosphere of the image is one of formality and elegance, with the subjects posing in a dignified manner.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-29

The image is a black-and-white photograph of four people posing for a picture. The four people are sitting on a wicker chair and kneeling on the ground. The man on the left is standing with his hands in his pockets. The man in the middle is sitting on a chair with his legs crossed. The woman is sitting on the lap of the man in the middle. The man on the right is kneeling on the ground. The background is blurry with trees and plants.

Created by amazon.nova-pro-v1:0 on 2025-05-29

The image is a black and white photograph of four people. Two men are standing on the left side of the image, while two people are sitting on the right side. One of the sitting people is a woman, and the other is a man. The man on the left is wearing a suit, while the man on the right is wearing a bow tie. Behind them are trees and plants.

Google Gemini

Created by gemini-2.0-flash on 2025-05-18

Here's a description of the image:

The image is a black and white photograph of four people in what appears to be a garden setting. The photo is mounted on a larger tan-colored backing.

From left to right:

- A young man stands with his hands in his pockets. He is wearing a suit, a sweater and a tie.

- An older man is seated in a wicker chair, wearing a suit and bow tie.

- A woman stands behind the seated man, and has her hand on his shoulder. She wears a simple sweater and a necklace.

- Another young man is kneeling in front, wearing a suit and bow tie.

The background consists of trees and greenery, giving the impression of an outdoor setting, possibly a garden or park. The overall tone of the image is formal and dignified, suggesting that it might be a family portrait from an earlier era. A signature, likely that of the photographer, is visible on the photograph in the bottom right.

Created by gemini-2.0-flash-lite on 2025-05-18

Certainly! Here's a description of the image:

Overall Impression:

The image is a vintage black and white photograph. It appears to be a family portrait. The composition is framed within a larger, off-white border.

People & Composition:

- There are four people posed outdoors, in a garden setting.

- One man is standing at the far left. He's wearing a suit jacket over a sweater.

- One older man is seated in a wicker chair, wearing a suit.

- A woman is sitting next to the seated man, she is wearing a sweater.

- Another man is sitting, with one knee bent, on the ground to the right. He is wearing a suit.

- The people seem to be well-dressed in what could be considered formal attire for the time.

Setting:

- The background consists of lush greenery, including trees and possibly a garden.

- The image suggests a relaxed, outdoor setting.

Style & Age:

- The black and white photography and the style of dress point to a historical period, possibly the early to mid-20th century.

- The photograph has a slightly faded, vintage quality to it, which adds to its historical feel.

Overall, the image is a formal family portrait taken in a natural setting, likely capturing a special moment in time.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-31

The image is a sepia-toned photograph featuring four individuals posing outdoors in what appears to be a garden setting. The photograph is framed with a light-colored mat.

The person on the far left is standing and appears to be a young man. He is wearing a light-colored sweater, a dark jacket, and a tie. His hands are in his pockets, and he is looking directly at the camera.

The individual seated in the center is an older man wearing a suit, a bow tie, and glasses. He is seated in a wicker chair and has his right hand resting on his chin, while his left hand is on the armrest of the chair.

The person standing behind the seated man is a woman with short, curly hair. She is wearing a light-colored dress with a darker collar and is looking at the camera with a slight smile.

The person on the far right is a young man seated on the ground. He is wearing a suit and a bow tie, and his legs are crossed. He is looking at the camera with a relaxed posture.

The background of the image features lush greenery, including bushes and trees, suggesting that the photograph was taken in a garden or a similar outdoor setting. The overall tone of the image is formal yet relaxed, capturing a moment of togetherness among the individuals.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-05

This image is a sepia-toned photograph featuring a group of four individuals posing outdoors in a garden setting. The scene appears to be from a historical period, possibly the early 20th century, judging by the clothing styles and the overall aesthetic of the image.

Foreground (Seated):

- There is a man seated on a wicker chair, dressed in formal attire with a bow tie and a suit. He has an older appearance, suggesting a significant figure in the group.

- To his right (from the viewer's perspective), there is another man dressed in a suit and bow tie, sitting with one leg crossed over the other. He appears younger than the other seated individual.

Background (Standing):

- Behind the seated individuals, there is a woman standing, wearing a long-sleeved dress and a necklace. Her hairstyle is consistent with early 20th-century fashion.

- To her left (from the viewer's perspective), there is another man standing, dressed in a suit with a tie. He has a neat appearance and is standing with his hands in his pockets.

The background consists of lush greenery, including trees and shrubs, which adds to the serene and natural setting of the photograph. The overall mood of the image is formal and composed, typical of family or group portraits from that era.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-05

This image is a sepia-toned vintage photograph of four individuals, likely taken in the early 20th century. The setting appears to be an outdoor garden with trees and foliage in the background.

- Standing figure (left): A man dressed in a suit with a tie and a light-colored sweater beneath his jacket. He has his hands in his pockets and is looking directly at the camera.

- Seated figure (center-left): An older man sitting in a wicker chair. He is dressed in a suit with a bow tie and a light-colored sweater. He is wearing white shoes and is looking slightly to the side.

- Seated figure (center-right): A woman standing behind the older man. She is wearing a long dress and a necklace. Her hair is styled in a manner typical of the early 20th century.

- Crouching figure (right): A younger man crouching in front of the other figures. He is dressed in a suit with a bow tie and is looking directly at the camera.

The photograph has a classic, formal composition, and the attire of the individuals suggests a formal or semi-formal occasion. There is a signature in the bottom right corner of the photograph.