Machine Generated Data

Tags

Color Analysis

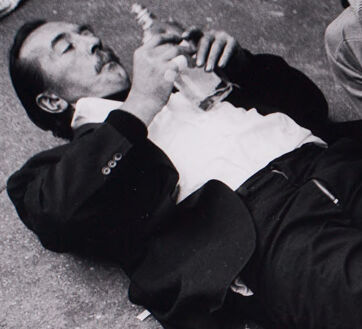

Face analysis

Amazon

AWS Rekognition

| Age | 32-48 |

| Gender | Male, 96% |

| Calm | 99.2% |

| Sad | 0.3% |

| Surprised | 0.2% |

| Fear | 0.2% |

| Angry | 0.1% |

| Happy | 0.1% |

| Disgusted | 0% |

| Confused | 0% |

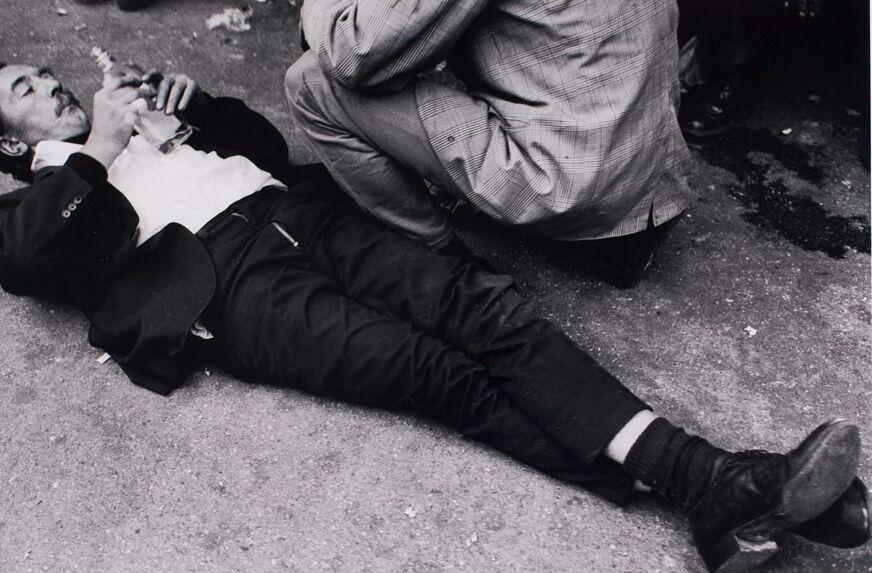

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 97.9% | |

Categories

Imagga

created on 2021-12-14

| food drinks | 79.1% | |

| paintings art | 7.3% | |

| cars vehicles | 6.7% | |

| pets animals | 2.4% | |

| nature landscape | 2% | |

Captions

Microsoft

created by unknown on 2021-12-14

| a boy lying on the ground | 65.5% | |

| a young man lying on the ground | 65.4% | |

| a person lying on the ground | 65.3% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-11

a man lies on the ground with his head on his hands.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a man laying on the ground with a cigarette in his mouth

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-12

The image depicts a man lying on the ground, dressed in a dark suit, white shirt, and black shoes. His legs are fully extended, and his posture suggests he is either resting or incapacitated. Another individual, wearing a patterned jacket, is kneeling nearby, seemingly attending to the person lying down. Surrounding them, the surface appears to be rough pavement, with scattered debris visible in the background. The scene suggests an urban environment and may capture a moment of concern or assistance.

Created by gpt-4o-2024-08-06 on 2025-06-12

The image shows a person lying on the ground, wearing a dark suit and black socks. Their shoes are visible, and they appear to be holding or inspecting something in their hands. Another person, dressed in a plaid-patterned coat, is crouching next to them. The scene takes place on a rough, textured surface, possibly a sidewalk or pavement, with some scattered debris around.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-16

The image depicts a person lying on the ground, seemingly unconscious or injured. The person is wearing a suit and appears to be a man. Another person, likely a bystander or authority figure, is standing over the person on the ground. The image has a somber, dramatic tone, suggesting some kind of incident or altercation has occurred.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-16

This is a black and white photograph showing a person lying on what appears to be a street or concrete surface. They are wearing a dark suit and white shirt, and appear to be smoking while lying down. Another person is partially visible in the frame, sitting next to the reclining figure. The image has a candid, documentary-style quality typical of street photography. The composition is striking, with the full length of the reclining figure captured in the frame.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-01

The image is a black-and-white photograph of a man lying on the ground, with another person kneeling beside him. The man on the ground is dressed in dark pants and a dark jacket over a white shirt, with his head tilted back and his eyes closed. He appears to be holding a cigarette in his right hand, which is raised to his mouth.

The person kneeling beside him is wearing light-colored pants and a plaid shirt, with their back to the camera. The background of the image shows a concrete floor littered with trash and debris, suggesting that the scene may be taking place in an urban or industrial setting.

Overall, the image conveys a sense of relaxation and casualness, despite the somewhat gritty surroundings. The man's pose and expression suggest that he is enjoying his cigarette and may be taking a break from some activity. The presence of the other person kneeling beside him adds a sense of companionship and social interaction to the scene.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-01

The image depicts a black-and-white photograph of a man lying on the ground, with his head resting on his left arm. He is dressed in a dark suit jacket, white shirt, and dark pants, with his legs extended and feet pointing towards the right side of the image. His right hand is raised to his mouth, holding a cigarette or cigar.

To the right of the man, another person is crouched down, with their back to the camera. They are wearing a light-colored plaid jacket and dark pants. The background of the image appears to be a concrete or asphalt surface, with some debris scattered around the man's head.

Overall, the image suggests that the man may have been involved in some kind of accident or altercation, and the person crouched down beside him may be trying to help or comfort him. The image is presented in a classic black-and-white style, which adds to its nostalgic and timeless feel.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-04

The black and white photograph depicts a scene on a street. A man is lying on the ground, seemingly unconscious or asleep, wearing a black suit and leather shoes. His head is tilted to the side, and he has a cigarette in his hand, which he is smoking. Another person is kneeling beside him, possibly attending to him or checking on his condition. The photograph captures a moment of stillness and quiet, with the focus on the two individuals and the cigarette smoke in the air.

Created by amazon.nova-pro-v1:0 on 2025-06-04

The black-and-white image shows a man lying on the ground with his legs crossed and his eyes closed, probably resting. He is wearing a suit and holding a cigarette in his hand. Next to him, another person is sitting on the ground, wearing a checkered shirt and pants. The ground is dirty, with some litter scattered around. The image has a white border.

Google Gemini

Created by gemini-2.0-flash on 2025-05-16

Here is a description of the image:

The black and white photograph shows a man lying on his back on what appears to be a concrete or asphalt surface. He is wearing a dark suit jacket, a white shirt that is partially unbuttoned, and dark pants that are slightly cropped above his black, socked ankles and worn, dark shoes. His eyes are closed, and he is holding something in his hands near his chest. Debris litters the ground around him.

Behind him, another person is kneeling, partially out of frame, with a plaid patterned jacket visible. The overall scene has a gritty, urban feel.

Created by gemini-2.0-flash-lite on 2025-05-16

Here's a description of the image:

Overall Impression: The image is a black and white photograph depicting a man lying on the ground. There's a sense of stillness and a bit of melancholy in the scene.

Details:

- The Man: The main subject is a man, dressed in a dark suit jacket, a white shirt, dark trousers and boots. He is lying on his back or side, with his legs stretched out. He appears to be holding something in his hands near his face, possibly lighting a cigarette or in the act of smoking. He has a mustache.

- The Setting: The ground appears to be concrete. There are some small pieces of trash scattered around. Another person in a checkered shirt and pants can be seen in the background.

- Style: The photograph has a documentary feel, perhaps taken on the street. The monochrome palette adds to the overall mood, emphasizing the shapes and textures.

- Light and Composition: The lighting is natural, and the composition is relatively simple, focusing on the man's posture and the details of the scene.

Possible Interpretations: The photo could depict someone who is sick, homeless, or simply taking a moment. It evokes a sense of isolation or a quiet moment in a bustling environment.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-31

The black-and-white photograph depicts a scene with two men. One man is lying on the ground, dressed in dark clothing including a jacket and pants. He appears to be injured or unconscious, as he is not moving and his eyes are closed. The other man, wearing a light-colored, checkered suit, is leaning over him, seemingly trying to assist or check on him. The setting appears to be outdoors, possibly on a street or sidewalk, as indicated by the pavement and scattered debris. The overall atmosphere of the image suggests a moment of distress or emergency.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-09

The image is a black-and-white photograph that appears to be taken in an urban environment, possibly a street. It shows a man lying on the ground, seemingly unconscious or asleep, with his head resting on the pavement. He is dressed in formal attire, including a jacket and trousers, which contrasts with his slumped posture. Next to him, there is another person, possibly a bystander or a companion, who is crouched down and appears to be holding a small object, possibly a phone or a small device. The ground is littered with debris, including what looks like an empty bottle. The monochrome tone of the photograph adds to the somber and mysterious atmosphere of the scene.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-09

This black-and-white photograph depicts a man lying on the ground, appearing to be unconscious or asleep. He is dressed in a dark suit, white shirt, and dark shoes, and his legs are splayed out. Another person, dressed in a plaid shirt and dark pants, is kneeling beside him, holding a bottle near the man's face, possibly attempting to revive him. The scene takes place on a rough, textured surface, possibly a street or sidewalk, with some debris scattered around. The overall mood of the image suggests a moment of desperation or care in an urban setting.