Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

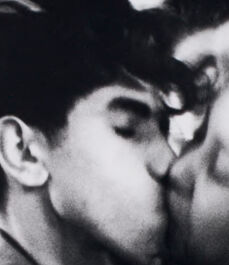

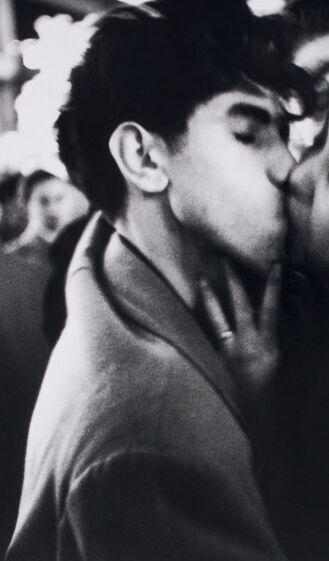

| Age | 18-24 |

| Gender | Male, 88.6% |

| Sad | 45% |

| Calm | 20% |

| Disgusted | 16.5% |

| Happy | 6.4% |

| Angry | 4% |

| Confused | 3.8% |

| Fear | 2.7% |

| Surprised | 1.6% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.4% | |

Categories

Imagga

created on 2022-01-08

| pets animals | 37.9% | |

| people portraits | 27.9% | |

| paintings art | 22.2% | |

| interior objects | 4.7% | |

| text visuals | 3% | |

| food drinks | 2.5% | |

Captions

Microsoft

created by unknown on 2022-01-08

| a man standing in front of a mirror posing for the camera | 35.8% | |

| a man looking at the camera | 35.7% | |

| a man and a woman looking at the camera | 35.6% | |

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

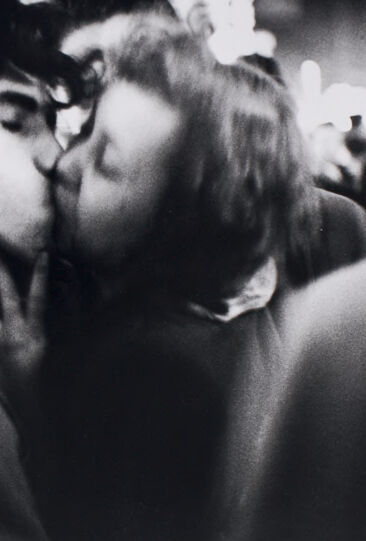

a photograph of a man and woman kissing in a crowded area

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-15

The image is a black-and-white scene depicting a crowded urban environment, likely a busy night in the city. People are gathered and moving in various directions under bright illuminated signs and blurred lights, suggesting a vibrant nightlife atmosphere. The composition focuses on a close perspective, capturing interactions and movement amid the larger group. The overall mood conveys intimacy and a bustling, dynamic urban experience.

Created by gpt-4o-2024-08-06 on 2025-06-15

The image is a black and white photograph depicting a crowded street scene. It is nighttime, and the background is filled with soft-focus lights and signs, suggesting an urban environment, possibly Times Square or a similarly busy area. There are several people visible, mostly adults dressed in coats or suits, indicative of a cooler season or evening time. The crowd appears to be bustling, with multiple individuals walking or standing closely together. The lighting is dim, relying on the ambient illumination from city lights and signage.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image shows two people, a man and a woman, kissing in an intimate embrace. They appear to be in a crowded public setting, with other people visible in the background. The image is in black and white, giving it a nostalgic, vintage feel. The focus is on the two individuals and their passionate embrace, capturing a moment of intimacy and connection between them.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This is a romantic black and white photograph showing a couple sharing a kiss in what appears to be a crowded public space. The image has a candid, documentary-style quality typical of street photography. The couple is surrounded by other people in the background, creating a sense of intimacy within a busy environment. The lighting and grain of the photograph suggest it was taken in an earlier era, possibly mid-20th century. The composition draws the viewer's focus to the tender moment between the two people while the background figures and urban setting provide context and atmosphere.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-27

The image depicts a black-and-white photograph of two individuals sharing a kiss, with the man on the left and the woman on the right. The man has short dark hair and is wearing a coat, while the woman has shoulder-length blonde hair and is also wearing a coat. The background of the image is blurred, but it appears to be a crowded area with people standing around.

The overall atmosphere of the image suggests a romantic moment between the two individuals, possibly in a public setting. The use of black and white photography adds a sense of nostalgia and timelessness to the image, making it feel like a moment frozen in time.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-27

The image depicts a black-and-white photograph of two individuals sharing a kiss in a crowded setting. The couple is positioned centrally, with the man on the left and the woman on the right. The man has short dark hair and wears a light-colored jacket, while the woman has shoulder-length light hair and is dressed in a dark top with a white collar.

In the background, several people are visible, although they are out of focus. The atmosphere appears to be lively, with the couple's intimate moment taking place amidst a bustling environment. The overall mood of the image is one of romance and affection, capturing a tender moment between the two individuals.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-27

A black-and-white photograph of a couple kissing in the middle of a crowd. The couple is facing each other, and their lips are touching. The man is wearing a suit, while the woman is wearing a dress. The crowd is blurry, and some people are standing and looking at them. The image has a white border on the top and bottom. The image is probably taken in the 1950s.

Created by amazon.nova-pro-v1:0 on 2025-05-27

The black-and-white photo depicts a man and a woman kissing each other passionately. The man is wearing a suit, while the woman is wearing a dress. Behind them, there are several people, some of whom are also wearing suits. The photo was taken in a crowded area, possibly in a street or a public place. The couple is standing close to each other, and their faces are slightly blurred, possibly due to the camera's movement or the subject's movement.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

Certainly! Here's a description of the image:

Overall Impression:

The image is a black and white photograph depicting a couple passionately kissing in a crowded public space. It has a vintage, classic feel.

Key Details:

- Focal Point: The central focus is the couple. The man is on the left, and the woman is on the right. They are in the middle of a kiss, with their faces close together, capturing a moment of intimacy.

- Setting: The background appears to be a busy street or public area. There are blurred figures and what appears to be storefronts or buildings in the background. The crowd suggests that this is happening in a public setting.

- Mood/Atmosphere: The image conveys a sense of emotion, likely joy, and perhaps a bit of rebellion. The intimate kiss, combined with the bustling background, creates an interesting juxtaposition.

- Composition: The photograph is a candid shot, suggesting it was taken spontaneously. The framing is close-up, highlighting the couple and allowing the viewer to be part of that moment.

Additional Observations:

- The quality of the photograph suggests it could be from a previous era (perhaps the mid-20th century).

- The photograph is likely a print, indicated by the white border.

- The sharpness of the kiss is a sharp contrast to the blurred background.

Let me know if you would like me to elaborate on anything!

Created by gemini-2.0-flash on 2025-05-16

The black and white photograph depicts a couple kissing passionately amidst a crowd. The man on the left has dark, swept-back hair and a jacket, his hand subtly touching the woman's face. The woman, with her hair styled back, is locked in the kiss with the man. Their faces are the focal point, blurred slightly by the movement.

The background is filled with people, creating a sense of hustle and bustle. Some faces are more distinct than others, with one man in a suit standing out with a faint smile. The background also includes building facades and blurred lights, suggesting an urban setting. The overall mood is romantic and timeless, capturing an intimate moment within a public space.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black-and-white photograph capturing a candid moment of two people kissing in a public setting. The couple is surrounded by a crowd of people, suggesting they are in a busy, possibly urban environment. The background is slightly blurred, indicating the focus is on the couple in the foreground. The man has short, dark hair and is wearing a suit jacket, while the woman has medium-length, light-colored hair and is wearing a coat. The overall atmosphere of the image conveys a sense of intimacy and affection amidst a bustling scene. The lighting suggests it might be evening or nighttime, adding to the romantic ambiance.