Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 26-36 |

| Gender | Female, 100% |

| Sad | 81.1% |

| Calm | 15.7% |

| Confused | 1.3% |

| Fear | 0.6% |

| Surprised | 0.6% |

| Disgusted | 0.3% |

| Angry | 0.3% |

| Happy | 0.1% |

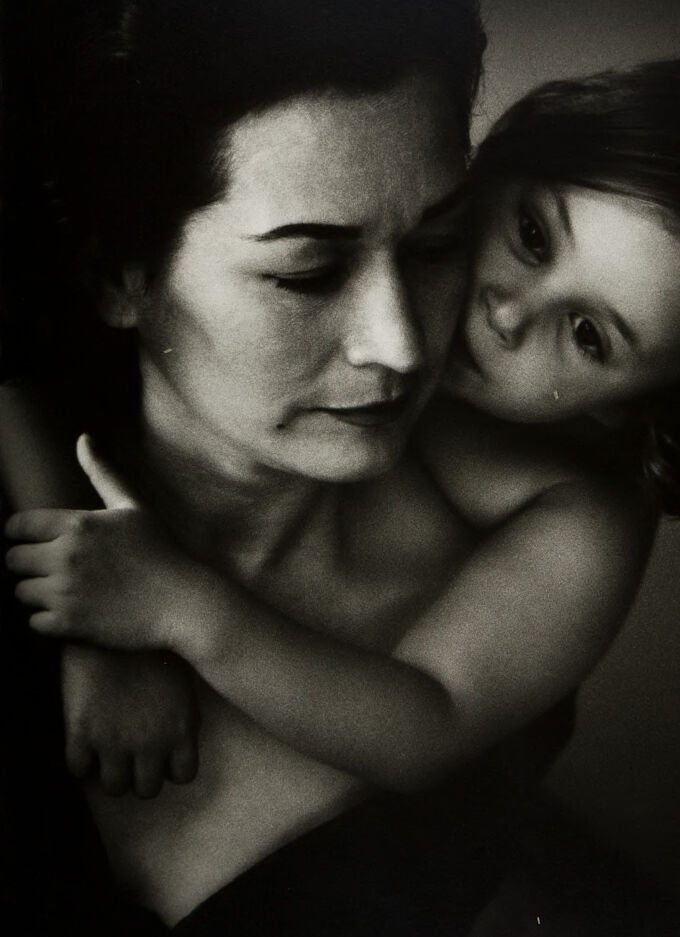

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 91.8% | |

Categories

Imagga

created on 2022-01-09

| people portraits | 99.6% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a man and a woman taking a selfie | 38.9% | |

| a man and woman taking a selfie | 30.8% | |

| a close up of a man and a woman taking a selfie | 30.7% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-11

person, a mother and daughter by person.

Salesforce

Created by general-english-image-caption-blip on 2025-05-21

a photograph of a woman holding a baby in her arms

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-10

The image is a black-and-white photograph featuring an intimate scene of two figures. One figure is partially unclothed, with the upper torso visible, and the other figure is embracing them with arms wrapped around their shoulders. The composition highlights the textures of skin and hair in a soft, artistic manner, emphasizing emotional closeness and warmth. The photo is signed in the lower-right corner with "Christophe, St. Myers, CA."

Created by gpt-4o-2024-08-06 on 2025-06-10

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-15

The image depicts a close, intimate moment between two individuals. It appears to be a black and white photograph showing the profile of a woman's face as she embraces a child, likely her own. The woman's expression is pensive, with her eyes closed, while the child's face is visible and looking directly at the camera. The lighting and composition create a sense of tenderness and vulnerability in the scene.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-15

This is a striking black and white portrait photograph showing an intimate moment between what appears to be a mother and child. The image has a soft, artistic quality with dramatic lighting that creates deep shadows and highlights. The adult figure has their eyes closed in a serene expression while the child embraces them from behind, looking directly at the camera. The composition is close-up and personal, capturing the tender bond between the two subjects. The photograph has a timeless quality and appears to be professionally shot, with careful attention to lighting and emotional depth. There's a signature visible in the bottom right corner of the image.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a woman holding a child.

- The woman has dark hair and is wearing a dark top.

- She is looking down at the child with a gentle expression.

- Her right arm is wrapped around the child's back, holding them close.

- The child has dark hair and is wearing a light-colored shirt.

- They are looking up at the woman with a curious expression.

- Their arms are wrapped around the woman's neck, holding onto her tightly.

- The background of the image is a solid gray color.

- There are no other objects or people visible in the image.

The overall atmosphere of the image is one of warmth and intimacy, capturing a tender moment between the woman and the child.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a woman holding a child. The woman has dark hair and is wearing a dark top, with her face blurred out. She is holding the child in her arms, with the child's arms wrapped around her neck. The child also has dark hair and is looking at the camera with a neutral expression.

In the bottom-right corner of the image, there is a signature in white cursive text that reads "Christopher" followed by some illegible text. The background of the image is dark, with a vignette effect that draws attention to the woman and child. Overall, the image appears to be a tender moment between a mother and child, captured in a simple yet powerful way.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-07

The image is a black-and-white photograph of a mother and daughter hugging each other. The mother is looking down, and the daughter is looking up. The mother's hand is on the daughter's shoulder, and the daughter's hand is on the mother's chest. The daughter's hair is short and straight, and the mother's hair is long and wavy. The image has a watermark in the bottom right corner.

Created by amazon.nova-pro-v1:0 on 2025-06-07

The image is a black-and-white photograph featuring a woman and a child. The woman is holding the child close to her chest, with the child's arms wrapped around her neck. The woman's face is partially obscured by the child's head, but her expression appears serene and content. The child's face is fully visible, and they seem to be looking at something off-camera with a curious expression. The background is plain and dark, which makes the subjects stand out prominently.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

The image is a black and white photograph featuring a woman and a young child, likely a mother and daughter, in a tender embrace. The woman appears to be in her thirties or forties, with dark hair styled up away from her face. Her gaze is directed downwards with a thoughtful or perhaps slightly sad expression. The child, whose face is pressed against the woman's cheek, looks upwards towards the viewer with large, expressive eyes. The child's arms are wrapped around the woman's shoulders in a protective gesture.

The lighting in the photograph is soft and diffused, with subtle shadows that add depth and dimension to the subjects' faces. The focus is sharp on their facial expressions, emphasizing the emotional connection between them. The background is out of focus, creating a sense of intimacy and drawing the viewer's attention to the interaction between the mother and daughter. The photograph captures a moment of love, comfort, and perhaps a hint of melancholy.

Created by gemini-2.0-flash-lite on 2025-05-17

The image is a black and white portrait of a mother and child embracing. The mother, on the left, has a thoughtful expression. She appears to be looking down, with her eyes slightly closed. The child, on the right, is nestled close to the mother, gazing at the viewer with a gentle, innocent look. The child's arms are wrapped around the mother. The lighting is soft and highlights the bond between the two. The composition and tone create a sense of warmth, intimacy, and protection. The photograph has a grainy texture, which adds to its vintage feel. There is a signature in the bottom right corner.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image is a black-and-white photograph depicting two women embracing each other closely. The woman on the left appears to be older, possibly a mother, and the woman on the right seems younger, possibly a daughter. The older woman has her eyes closed, suggesting a moment of deep emotion or affection, while the younger woman is looking upward with an intense gaze. The lighting is soft and dramatic, casting shadows that highlight the emotional intimacy between the two subjects. The overall tone of the image conveys a sense of love, protection, and connection. The photograph has a vintage or artistic quality, indicated by the grainy texture and the signature in the bottom right corner.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-04

The image is a black-and-white photograph featuring a tender moment between an adult and a child. The adult, positioned on the left, has a calm and composed expression, with their eyes closed. They are holding the child close, with the child resting their head on the adult's shoulder in a relaxed manner. The child appears to be a young girl, with her arms wrapped around the adult's neck. The lighting in the photograph highlights the contours of their faces and the intimate connection between them, creating a sense of warmth and affection. The photograph has a timeless quality, evoking feelings of love, care, and familial bonds. In the bottom right corner, there is a signature and a mark that appears to be a logo or watermark.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-04

This is a black-and-white photograph depicting a tender moment between a woman and a young child. The woman, with a serene expression, is holding the child close, with the child resting their head on her shoulder and wrapping their arms around the woman's neck. The lighting is soft, creating a peaceful and intimate atmosphere. The photograph is signed in the bottom right corner.