Machine Generated Data

Tags

Amazon

created on 2022-01-08

Clarifai

created on 2023-10-25

Imagga

created on 2022-01-08

| portrait | 31.7 | |

|

| ||

| world | 31 | |

|

| ||

| person | 29.9 | |

|

| ||

| people | 27.9 | |

|

| ||

| adult | 27.8 | |

|

| ||

| man | 26.2 | |

|

| ||

| male | 24 | |

|

| ||

| looking | 20.8 | |

|

| ||

| attractive | 20.3 | |

|

| ||

| face | 19.2 | |

|

| ||

| wind instrument | 17.6 | |

|

| ||

| happy | 17.5 | |

|

| ||

| pretty | 17.5 | |

|

| ||

| hair | 16.6 | |

|

| ||

| fashion | 16.6 | |

|

| ||

| handsome | 16 | |

|

| ||

| smiling | 15.9 | |

|

| ||

| couple | 15.7 | |

|

| ||

| sexy | 15.3 | |

|

| ||

| musical instrument | 15.1 | |

|

| ||

| smile | 15 | |

|

| ||

| cute | 14.3 | |

|

| ||

| love | 14.2 | |

|

| ||

| black | 14.1 | |

|

| ||

| lady | 13.8 | |

|

| ||

| eyes | 12.9 | |

|

| ||

| women | 12.7 | |

|

| ||

| head | 12.6 | |

|

| ||

| lifestyle | 12.3 | |

|

| ||

| outdoor | 12.2 | |

|

| ||

| brunette | 12.2 | |

|

| ||

| human | 12 | |

|

| ||

| one | 11.9 | |

|

| ||

| outdoors | 11.9 | |

|

| ||

| model | 11.7 | |

|

| ||

| child | 11.4 | |

|

| ||

| hand | 11.4 | |

|

| ||

| sitting | 11.2 | |

|

| ||

| phone | 11.1 | |

|

| ||

| casual | 11 | |

|

| ||

| happiness | 11 | |

|

| ||

| look | 10.5 | |

|

| ||

| serious | 10.5 | |

|

| ||

| boy | 10.4 | |

|

| ||

| weapon | 10.4 | |

|

| ||

| expression | 10.2 | |

|

| ||

| youth | 10.2 | |

|

| ||

| holding | 9.9 | |

|

| ||

| romance | 9.8 | |

|

| ||

| talk | 9.6 | |

|

| ||

| glasses | 9.3 | |

|

| ||

| bow and arrow | 9 | |

|

| ||

| style | 8.9 | |

|

| ||

| microphone | 8.8 | |

|

| ||

| pipe | 8.7 | |

|

| ||

| business | 8.5 | |

|

| ||

| instrument | 8.4 | |

|

| ||

| guy | 8.4 | |

|

| ||

| summer | 8.4 | |

|

| ||

| studio | 8.4 | |

|

| ||

| teen | 8.3 | |

|

| ||

| device | 8.3 | |

|

| ||

| telephone | 8.2 | |

|

| ||

| family | 8 | |

|

| ||

| businessman | 7.9 | |

|

| ||

| together | 7.9 | |

|

| ||

| life | 7.8 | |

|

| ||

| men | 7.7 | |

|

| ||

| relationship | 7.5 | |

|

| ||

| fun | 7.5 | |

|

| ||

| teenager | 7.3 | |

|

| ||

| blond | 7.2 | |

|

| ||

Google

created on 2022-01-08

| Microphone | 90.4 | |

|

| ||

| Beard | 88.1 | |

|

| ||

| Black-and-white | 84.7 | |

|

| ||

| Style | 84.1 | |

|

| ||

| Music | 79.8 | |

|

| ||

| Street fashion | 79.7 | |

|

| ||

| Musician | 79.3 | |

|

| ||

| Audio equipment | 76.8 | |

|

| ||

| Monochrome | 76.4 | |

|

| ||

| Eyewear | 75.1 | |

|

| ||

| Facial hair | 72.9 | |

|

| ||

| Smoking | 72 | |

|

| ||

| Monochrome photography | 71.9 | |

|

| ||

| Plucked string instruments | 69.5 | |

|

| ||

| Happy | 68.7 | |

|

| ||

| Moustache | 67.9 | |

|

| ||

| Street | 66.1 | |

|

| ||

| Musical instrument | 66 | |

|

| ||

| Sitting | 62.3 | |

|

| ||

| Fur | 61.3 | |

|

| ||

Microsoft

created on 2022-01-08

| outdoor | 98 | |

|

| ||

| music | 94.6 | |

|

| ||

| human face | 92.5 | |

|

| ||

| black and white | 86.7 | |

|

| ||

| person | 73.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

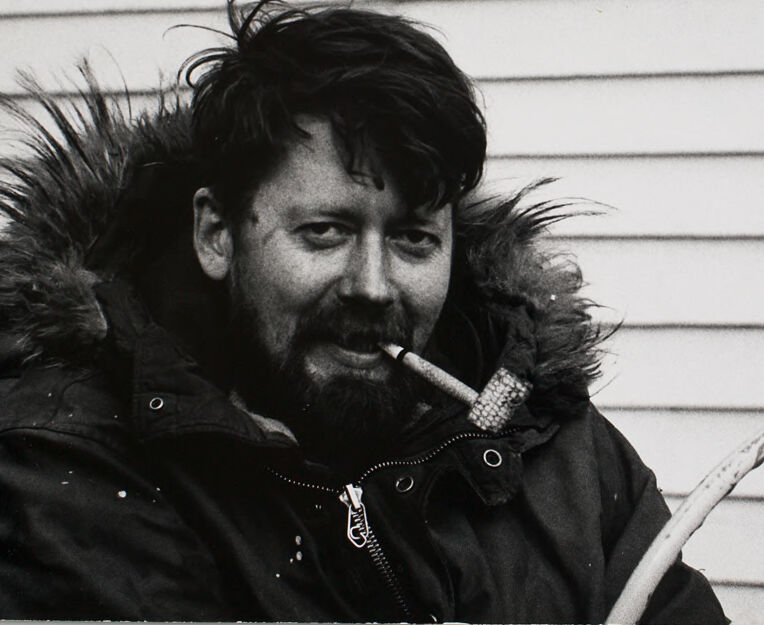

| Age | 34-42 |

| Gender | Male, 99.9% |

| Sad | 48.5% |

| Calm | 27.3% |

| Happy | 10.9% |

| Fear | 5.7% |

| Surprised | 2.3% |

| Disgusted | 2.1% |

| Angry | 1.8% |

| Confused | 1.5% |

Microsoft Cognitive Services

| Age | 50 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 75.8% | |

|

| ||

| people portraits | 22% | |

|

| ||