Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

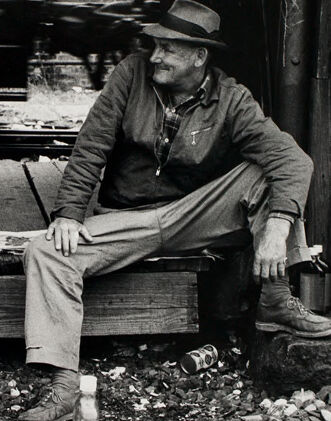

| Age | 57-65 |

| Gender | Male, 97.6% |

| Happy | 98.1% |

| Calm | 0.8% |

| Sad | 0.4% |

| Surprised | 0.2% |

| Confused | 0.2% |

| Angry | 0.1% |

| Fear | 0.1% |

| Disgusted | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.8% | |

Categories

Imagga

created on 2022-01-22

| paintings art | 68% | |

| interior objects | 13.4% | |

| people portraits | 13.2% | |

| food drinks | 2.6% | |

| cars vehicles | 1.6% | |

Captions

Microsoft

created by unknown on 2022-01-22

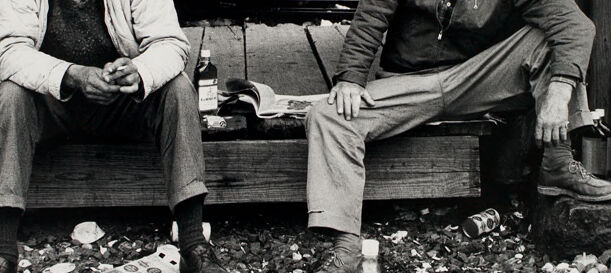

| a man sitting on a bench | 93% | |

| a man that is sitting on a bench | 91.2% | |

| a man is sitting on a bench | 90.1% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

men sitting on a bench.

Salesforce

Created by general-english-image-caption-blip on 2025-05-16

a photograph of a group of men sitting on a train track

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-15

The image depicts two men seated on a wooden beam near what appears to be a railway track. The scene has a gritty, urban atmosphere, with discarded items such as bottles and litter scattered on the ground. In the background, a train is in motion, and a worker is visible standing on the platform of the train, providing a sense of hustle and activity. The contrast between the stillness of the seated men and the dynamic movement of the train adds depth to the composition. The setting seems industrial and rough, emphasizing themes of labor or hardship.

Created by gpt-4o-2024-08-06 on 2025-06-15

The image depicts two individuals sitting on a wooden platform next to a railway track. They appear to be casually seated, with one person leaning slightly forward and the other resting their hand on their knee. Various items are scattered around them, including a whiskey bottle and a can. Above them, the blurred outline of a train is visible, with another figure standing on the train steps in the background. The setting appears to be a gritty, industrial area, suggested by the presence of the railway, discarded items, and the spare, utilitarian structure. The scene conveys a sense of urban realism and captures a moment of life at the edge of society.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-20

The image depicts two older men sitting on a bench in what appears to be a dilapidated or industrial setting. One man is wearing a hat and the other is clean-shaven. They seem to be resting or conversing, with the background showing a person working on something in the distance. The overall scene has a gritty, black-and-white aesthetic that conveys a sense of hardship or struggle.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-20

This is a black and white photograph showing two men sitting on what appears to be a loading dock or platform near railroad tracks. In the background, there's a silhouette of what looks like a railroad worker on a train car. The two men in the foreground are dressed in work clothes - one wearing a light-colored shirt and the other in a jacket with a hat. They appear to be taking a break, with one looking to the left and the other to the right. The scene has an industrial, working-class atmosphere typical of railroad yards. There's scattered debris or gravel on the ground near the tracks, and the composition captures a candid moment in what seems to be a day's work at the railway.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of two men sitting on a wooden bench, with a train in the background.

- The man on the left is wearing a light-colored jacket, dark pants, and dark shoes. He has his hands clasped together in front of him and is looking to the right.

- The man on the right is wearing a dark jacket, light pants, and dark shoes. He is wearing a hat and has his legs crossed. He is also looking to the right.

- The bench they are sitting on is made of wood and appears to be weathered.

- In the background, there is a train with a door open. A man is standing in the doorway, holding onto the railing.

- The ground around the men is covered in dirt and debris, including rocks and leaves.

- The overall atmosphere of the image suggests that the men are taking a break or waiting for something, possibly the train.

The image captures a moment in time, with the two men sitting together, looking out at the train and the surrounding environment. The contrast between the weathered bench and the modern train in the background adds to the sense of nostalgia and timelessness in the image.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

The image depicts a black-and-white photograph of two men sitting on a wooden platform, with a train in the background. The man on the left is wearing a light-colored jacket, dark sweater, and pants, while the man on the right is dressed in a dark shirt, light-colored pants, and a hat. Both men are seated with their legs crossed, facing each other.

In the background, a train is visible, with a man standing on the steps of one of the cars. The ground beneath the men is littered with trash, including paper and other debris. The overall atmosphere of the image suggests a casual, relaxed setting, possibly during a break or downtime for the men.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-05

A black-and-white photo shows two men sitting on a bench in front of a train. They are wearing hats and shoes. The man on the left is holding a cigarette in his hand. The man on the right is looking at something. Behind them, there is a man standing on the platform of the train. He is holding a rope and seems to be pulling something. There are some bottles and papers on the ground.

Created by amazon.nova-pro-v1:0 on 2025-06-05

The black-and-white photo shows two men sitting on a wooden bench in an outdoor area, probably at a train station. They are both wearing jackets, pants, and shoes. One of them is wearing a hat. A man standing on the train is looking at them. There are several items on the ground, including bottles, a piece of paper, and a can. There are some houses in the distance.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-17

Certainly! Here's a description of the image:

Overall Impression:

The image captures a candid, somewhat somber moment in what appears to be a railway yard or industrial setting. It's a black and white photograph, which adds a sense of timelessness and emphasizes the textures and tones.

Main Elements:

- Two Men: The primary focus of the image is on two older men seated on a wooden structure.

- The man on the left is looking off to the side, with a contemplative or perhaps weary expression.

- The man on the right is looking towards the side with a small smile or grin on his face.

- Railway Setting: The background and foreground provide context.

- A railway car/train is visible, suggesting a working environment associated with trains. A blurry figure can be seen appearing to be controlling the mechanics of the train.

- Rail tracks are present in the foreground.

- Debris such as rocks and trash appears to surround the wooden platform.

Atmosphere & Style:

The lighting appears to be natural, contributing to the grainy quality of the image, typical of older photography. The overall mood is one of quiet observation, with an emphasis on the men and their possible connection to the industrial setting.

Created by gemini-2.0-flash on 2025-05-17

This black and white photo shows two men sitting on what looks like a small, wooden platform or step adjacent to railroad tracks. Both men appear to be of similar age, somewhere in their middle to later years. The man on the left is wearing dark socks, worn leather dress shoes, jeans, a dark sweater under a light-colored jacket. He has his hands clasped together in his lap. The man on the right is wearing a hat, work boots, and rolled-up tan pants, and a similar jacket over a plaid shirt.

A bottle of alcohol, an open book, and trash are scattered around their feet, as well as a couple of cans. In the background, we see a train car and what appears to be another man working on the train. The overall atmosphere is one of a working-class setting, perhaps during a break or lull in activity.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black and white photograph depicting two older men sitting on a bench in what appears to be an industrial or railway setting. The man on the left is wearing a light-colored shirt, a vest, and dark pants. He is holding something in his hands and appears to be in conversation with the man on the right. The man on the right is wearing a dark jacket, a hat, and light-colored pants. He is holding a newspaper and seems to be engaged in the conversation.

In the background, there is a large piece of machinery or equipment, possibly related to a train or industrial operation. Another person is visible in the background, standing on a platform or elevated area, possibly operating or inspecting the machinery. The ground around the bench is littered with leaves, suggesting it might be autumn.

The overall atmosphere of the image conveys a sense of camaraderie and relaxation amidst an industrial environment. The men seem to be taking a break, possibly discussing the day's events or sharing stories.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-09

This is a black-and-white photograph depicting two individuals sitting on a wooden boxcar bench next to a railway track. The individual on the left appears to be wearing a light jacket, a dark shirt, and boots, and is sitting with one hand resting on their knee while the other hand is near their face. The individual on the right is wearing a jacket, a hat, a shirt, and pants, and has one hand resting on their knee. There are various items around them, including bottles, a bottle opener, and some scattered papers on the ground. Behind them, another person is standing on the boxcar, seemingly working on a piece of equipment or machinery. The overall setting suggests a railway station or a railway yard, and the scene has a rustic and somewhat nostalgic feel.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-09

This black-and-white photograph captures a moment of rest and camaraderie among three men in an industrial setting, likely a train yard. In the foreground, two men are seated on a wooden bench, appearing to be engaged in a casual conversation. The man on the left is wearing a light-colored jacket and a dark apron, while the man on the right is dressed in a fedora hat, a jacket, and light-colored pants. Both men have work boots on, and there are various items scattered on the ground around them, including a bottle and some papers.

In the background, a third man is standing on the steps of a train car, seemingly working or inspecting something on the train. The train car is large and has a dark exterior, with a window that reflects the light. The overall scene suggests a break in the workday, with the men taking a moment to relax and socialize. The photograph has a candid and authentic feel, capturing a slice of everyday life in a bygone era.