Machine Generated Data

Tags

Amazon

created on 2022-01-22

Clarifai

created on 2023-10-26

Imagga

created on 2022-01-22

Google

created on 2022-01-22

| Rectangle | 84.5 | |

|

| ||

| Font | 82.3 | |

|

| ||

| Motor vehicle | 79.4 | |

|

| ||

| Advertising | 63.8 | |

|

| ||

| Art | 61 | |

|

| ||

| Book cover | 59.6 | |

|

| ||

| Book | 58.7 | |

|

| ||

| Publication | 57.6 | |

|

| ||

| Metal | 57.3 | |

|

| ||

| Monochrome | 52.6 | |

|

| ||

| Visual arts | 52.1 | |

|

| ||

| Monochrome photography | 50 | |

|

| ||

Microsoft

created on 2022-01-22

| text | 99.9 | |

|

| ||

| drawing | 94.6 | |

|

| ||

| black and white | 90 | |

|

| ||

| handwriting | 86.6 | |

|

| ||

| poster | 83.3 | |

|

| ||

| cartoon | 69.8 | |

|

| ||

| street | 59.3 | |

|

| ||

| monochrome | 59.3 | |

|

| ||

| old | 48.2 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 24-34 |

| Gender | Male, 96.5% |

| Calm | 98.2% |

| Angry | 0.5% |

| Sad | 0.4% |

| Surprised | 0.3% |

| Fear | 0.2% |

| Confused | 0.2% |

| Disgusted | 0.1% |

| Happy | 0.1% |

Feature analysis

Categories

Imagga

| streetview architecture | 54% | |

|

| ||

| paintings art | 34.5% | |

|

| ||

| interior objects | 5.4% | |

|

| ||

| food drinks | 4.7% | |

|

| ||

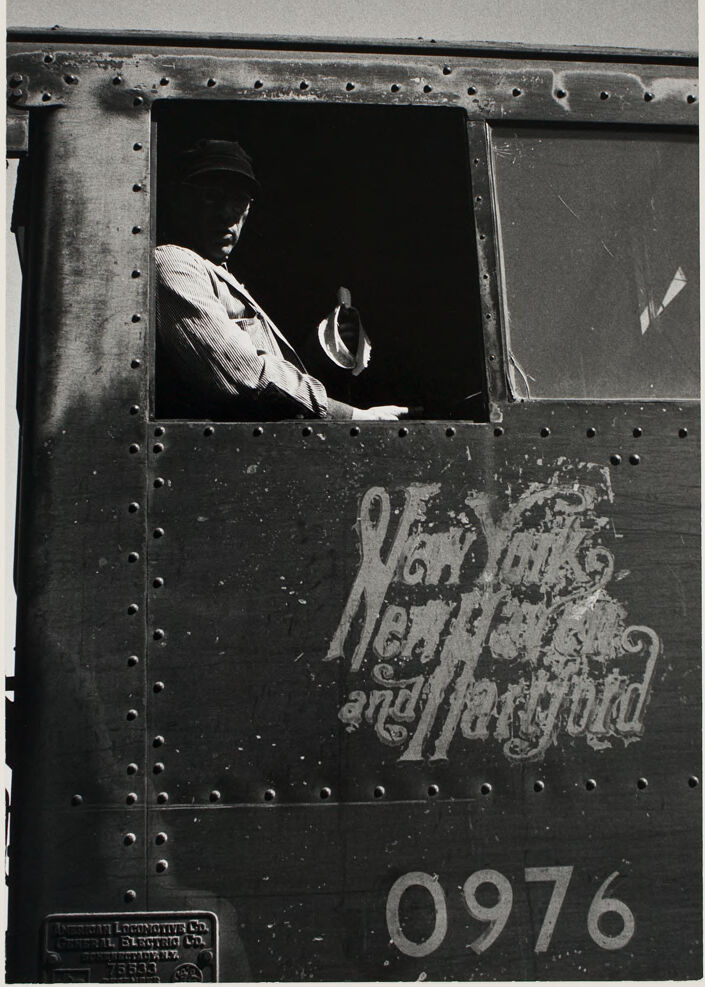

Captions

Microsoft

created on 2022-01-22

| a close up of a sign | 74.5% | |

|

| ||

| graffiti on a wall | 42.9% | |

|

| ||

| an old photo of a person | 40.8% | |

|

| ||

Text analysis

Amazon

0976

L.D.

GENER 14 ELECTRIC L.D.

LOCANOTIVE

75.333

ELECTRIC

ANTRICAN LOCANOTIVE LTD,

GENER 14

vellarged

LTD,

ANTRICAN

adidas

0976

JONENEETANY

75533

0976

JONENEETANY

75533