Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 31-41 |

| Gender | Male, 100% |

| Calm | 98.3% |

| Sad | 1.2% |

| Surprised | 0.1% |

| Angry | 0.1% |

| Happy | 0.1% |

| Confused | 0.1% |

| Disgusted | 0% |

| Fear | 0% |

AWS Rekognition

| Age | 37-45 |

| Gender | Male, 99.5% |

| Sad | 55.6% |

| Calm | 41.3% |

| Angry | 1.5% |

| Surprised | 0.5% |

| Confused | 0.3% |

| Fear | 0.3% |

| Disgusted | 0.3% |

| Happy | 0.3% |

AWS Rekognition

| Age | 22-30 |

| Gender | Female, 57.2% |

| Fear | 30.3% |

| Sad | 28.7% |

| Calm | 20.2% |

| Happy | 9.8% |

| Disgusted | 4.6% |

| Surprised | 2.7% |

| Angry | 2.7% |

| Confused | 0.9% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Likely |

Feature analysis

Categories

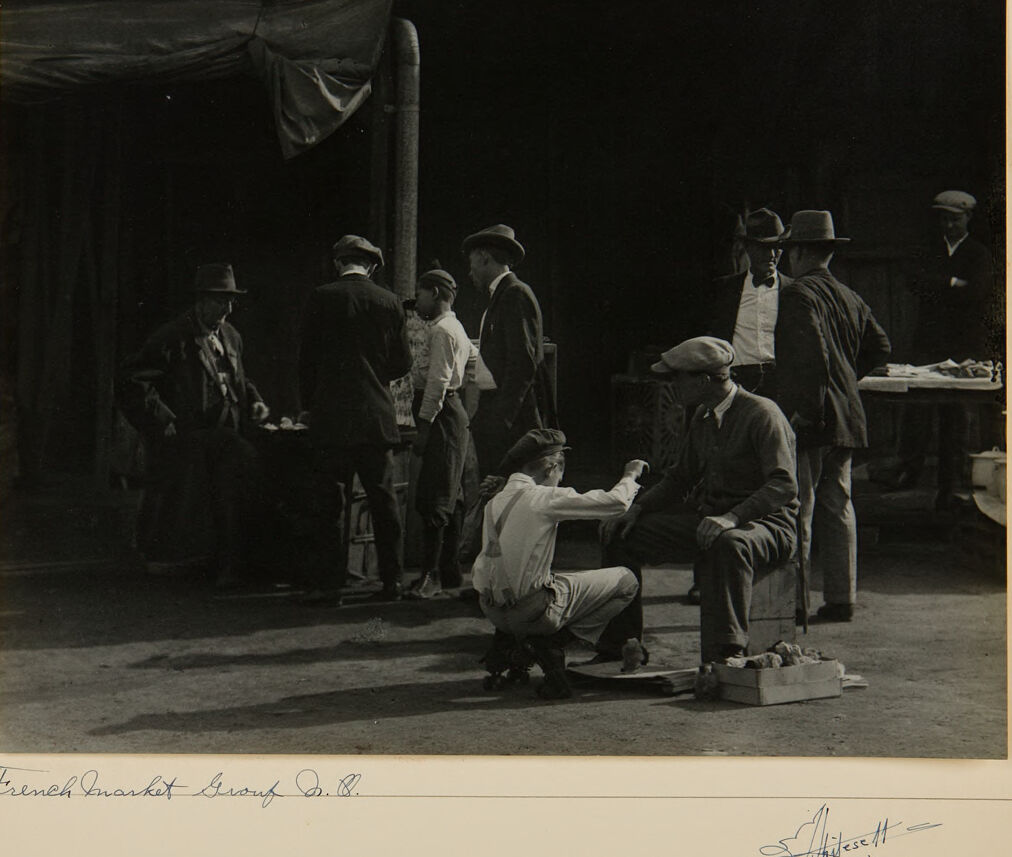

Imagga

| interior objects | 43.2% | |

| events parties | 18.1% | |

| streetview architecture | 11.9% | |

| beaches seaside | 11.5% | |

| food drinks | 5.8% | |

| people portraits | 2.6% | |

| paintings art | 2.4% | |

| nature landscape | 1.9% | |

Captions

Microsoft

created on 2022-01-14

| a vintage photo of a group of people standing in front of a crowd | 82.4% | |

| a vintage photo of a group of people around each other | 81.8% | |

| a vintage photo of a group of people in front of a crowd | 81.7% | |