Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 27-37 |

| Gender | Female, 100% |

| Surprised | 79.2% |

| Calm | 9.1% |

| Sad | 3.7% |

| Fear | 3.3% |

| Confused | 2% |

| Angry | 1.5% |

| Happy | 0.8% |

| Disgusted | 0.4% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 98.6% | |

Categories

Imagga

created on 2022-01-09

| pets animals | 99.9% | |

Captions

Microsoft

created by unknown on 2022-01-09

| graphical user interface | 35.9% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

actor in a promotional portrait.

Salesforce

Created by general-english-image-caption-blip on 2025-05-20

a photograph of a woman is brushing her teeth with a toothbrush

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-10

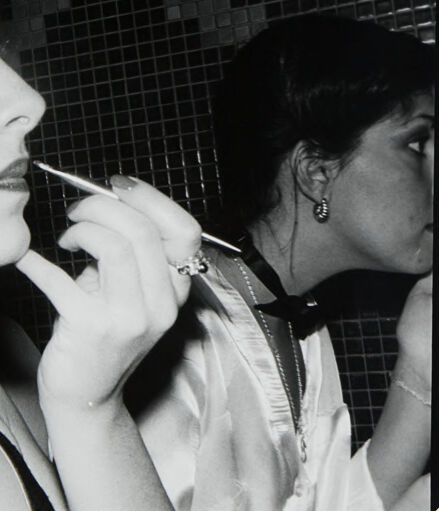

The image is a black-and-white photograph displaying women in a restroom setting, with tiled walls visible in the background. One woman is holding an applicator or brush, wearing a necklace and ring, while another woman is partially visible wearing earrings and a satin-like blouse. The handwritten text at the bottom reads "The Ladies Room," accompanied by the year "1975."

Created by gpt-4o-2024-08-06 on 2025-06-10

The image is a black and white photograph featuring two women styled with 1980s fashion and accessories. One woman is holding a makeup brush or similar tool, and appears to be applying cosmetics. She is wearing a necklace and a sleeveless top. The second woman, who is partially turned away, is wearing a satin material ranging from top or jacket and earrings. The background shows a tiled wall typical of a bathroom setting. The photograph is framed with a white border, on which is handwritten "The Ladies Room" along the bottom edge.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-15

The image shows a woman with dark, curly hair applying makeup, likely lipstick, in what appears to be a dressing room or backstage area. She has a pensive expression on her face as she focuses on her task. The background includes a grid-like pattern, suggesting this may be a theater or performance setting. The image has a vintage, black-and-white aesthetic, adding to the sense of time and place.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-15

This is a black and white photograph titled "The Ladies Room" showing a profile view of a woman smoking a cigarette. She has dark, styled hair in a 1950s-era fashion and appears to be wearing a light-colored top with a necklace. The photo has a candid, intimate quality typical of mid-century social photography. In the background, there appears to be a tiled or gridded wall pattern, and another person is partially visible. The composition and lighting create a dramatic, film noir-like atmosphere.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of a woman applying lipstick in a bathroom, with the title "The Ladies Room" handwritten at the bottom.

- The woman has dark, curly hair and is wearing a sleeveless dress or top.

- She is holding a tube of lipstick in her right hand and applying it to her lips.

- Her left hand is not visible in the frame.

- In the background, there are other women standing around the bathroom, but they are not clearly visible.

- One woman is partially visible behind the main subject, with only her head and shoulders showing.

- Another woman is partially visible on the right side of the frame, with only her head and part of her arm showing.

- The bathroom has tiled walls and a tiled floor.

- The tiles are small and square, and they are arranged in a grid pattern.

- The walls and floor appear to be made of ceramic or porcelain tiles.

- The overall atmosphere of the image suggests that it was taken in the 1950s or 1960s.

- The style of clothing and hairstyles worn by the women in the image is consistent with fashion trends from that era.

- The use of black-and-white film and the handwritten title at the bottom of the image also suggest that it was taken during this time period.

In summary, the image is a black-and-white photograph of a woman applying lipstick in a bathroom, with other women in the background. The image appears to have been taken in the 1950s or 1960s, based on the style of clothing and hairstyles worn by the women in the image, as well as the use of black-and-white film and the handwritten title at the bottom of the image.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of a woman applying lipstick in front of a mirror, with another woman visible in the background. The woman in the foreground has dark hair and is wearing a sleeveless top, a necklace, and a ring on her left hand. She is holding a lipstick applicator to her mouth.

In the background, there is a tiled wall with small square tiles. Another woman can be seen behind the main subject, also facing the mirror. The overall atmosphere suggests that the women are getting ready for a night out or a special occasion.

At the bottom of the image, handwritten text reads "The Ladies Room" in blue ink. This caption provides context to the scene, indicating that the photograph was taken in a ladies' restroom.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-07

The black-and-white photo features a woman with curly hair applying makeup to her face. She is holding a brush and is looking at herself in a mirror. She is wearing a necklace and a ring. Another woman is standing behind her, also looking at the mirror. The wall behind them has a tile design. The photo has a white border and the words "The Ladies Room" written in the bottom right corner.

Created by amazon.nova-pro-v1:0 on 2025-06-07

The image is a black-and-white photograph featuring a woman with curly hair applying lipstick. She is holding a lipstick tube in her right hand and is applying it to her lips. The woman is wearing a necklace and a sleeveless top. Behind her, another woman is visible, wearing a bow tie and a necklace. The background appears to be a tiled wall, suggesting that the setting might be a bathroom or a dressing room. The photograph has a vintage aesthetic, with a slightly blurred background and a focus on the woman applying makeup. The image is titled "The Ladies Room," which likely refers to the setting of the photograph.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

Certainly! Here's a description of the image:

Image Description

The image is a black and white photograph, likely taken in the late 1970s or early 1980s, titled "The Ladies Room." It depicts a scene in a bathroom or powder room, featuring three women.

- Main Subject: A woman in the foreground is shown in profile, applying lipstick with a brush. She has dark, curly hair, a necklace, and a ring on her finger.

- Secondary Subject: Another woman is partially visible next to her, also seemingly applying makeup and looking toward a mirror or vanity. She has dark hair and is wearing earrings and a white garment with a black bow.

- Background: A third woman is partially visible. The background is a tiled wall, adding to the bathroom atmosphere.

The composition and style suggest a candid or documentary approach, capturing a moment of women getting ready in a public or semi-public space. The overall tone is somewhat intimate and casual.

The inscription "The Ladies Room" is written in script at the bottom of the image, along with the artist's signature, and the year "1979".

Created by gemini-2.0-flash-lite on 2025-05-17

Certainly! Here's a description of the image:

Overall Impression:

The photograph is a black and white print. It appears to be a candid shot of women in a "ladies' room" setting. The atmosphere seems somewhat intimate and perhaps suggestive of a nightlife scene.

Key Elements:

- Subject: The central figure is a woman with dark, curly hair. She is in the process of applying lipstick. Her face is the focus of the image.

- Composition: The composition is a tight shot, focusing on the faces and upper bodies of the women. This enhances the intimate feel.

- Setting: The background features a tiled wall, possibly in a restroom or dressing area. This hints at a location like a club, bar, or other social space.

- Additional Figures: There are other women visible in the background, partially obscured. The presence of these additional women adds depth and creates a sense of place.

- Details: The woman applying lipstick is wearing a pendant necklace and a ring. A second woman wears earrings and has a black ribbon tied around her neck.

- Inscription: Written at the bottom "The Ladies Room" and some handwriting that looks like a date.

Overall Tone:

The photograph has a sense of immediacy and realism, enhanced by the black and white medium. It evokes a sense of the time period (based on the fashion and styling of the women). The setting suggests a glimpse into a moment in women's private space.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-05

The image is a black and white photograph titled "The Ladies Room." It depicts a woman with curly hair having her makeup applied by another individual. The woman in the foreground is wearing a sleeveless top and a necklace. The person applying makeup is holding a brush and appears to be focused on their task. In the background, another woman is partially visible, observing the scene. The setting appears to be a restroom, as suggested by the tiled wall in the background. The photograph captures a candid moment, likely taken in a social or preparatory setting.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-04

The image is a black-and-white photograph featuring a woman in the foreground, who appears to be applying makeup, possibly lipstick, using a brush to her lips. She is wearing a necklace and has her hair styled in curls. Her gaze is directed away from the camera, and she seems focused on the task at hand. In the background, another individual is partially visible, and there is a patterned tiled wall behind them. The photograph has a vintage or candid feel, suggesting it might have been taken in a setting like a dressing room or backstage area. The text "The Ladies Room" is handwritten at the bottom of the image.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-04

This black-and-white photograph captures a candid moment in what appears to be a ladies' room, as indicated by the handwritten caption at the bottom. The central figure is a woman with voluminous, styled hair, who is looking to the side with a contemplative expression. She is smoking a cigarette, holding it delicately with her fingers. Behind her, another woman is partially visible, also smoking a cigarette and looking in the same direction. The background features a patterned wall, possibly made of small tiles or a similar material, which adds texture to the scene. The overall atmosphere suggests a relaxed, social setting, possibly during a party or event. The photograph has a vintage feel, likely from the mid-20th century, given the style of clothing and hair. The image is framed with a thin black border, enhancing its classic appearance.