Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

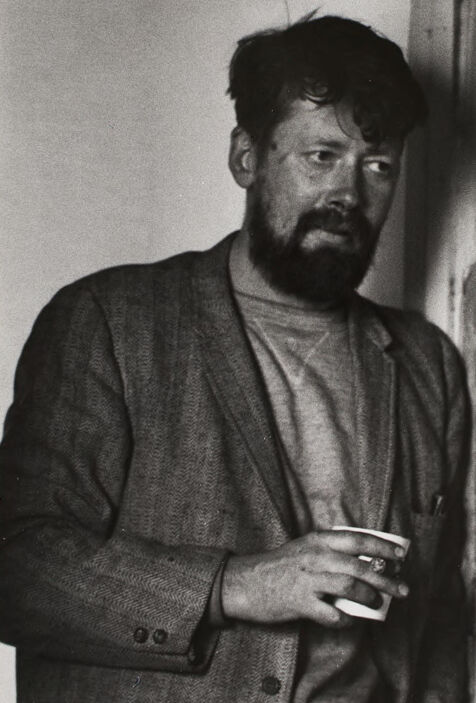

| Age | 35-43 |

| Gender | Male, 100% |

| Fear | 64% |

| Sad | 30.6% |

| Calm | 2.1% |

| Confused | 1.2% |

| Disgusted | 0.8% |

| Angry | 0.7% |

| Happy | 0.5% |

| Surprised | 0.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.4% | |

Categories

Imagga

created on 2022-01-08

| pets animals | 98.4% | |

Captions

Microsoft

created by unknown on 2022-01-08

| a man standing in front of a mirror posing for the camera | 73.8% | |

| a man standing in front of a mirror | 73.7% | |

| a man sitting in front of a mirror posing for the camera | 60.6% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

photograph of a man with a beard.

Salesforce

Created by general-english-image-caption-blip on 2025-05-02

a photograph of a man with a beard and a beard

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-15

The image depicts a person standing indoors near a doorway or a corner of a room. They are wearing a casual blazer with a textured pattern over a plain shirt, and holding a small cup in one hand. A strap or cord is seen hanging on the wall to the right of the figure. The setting features a somewhat dilapidated appearance, with visible wear on the doorframe and surrounding structures. The overall mood appears candid and monochromatic.

Created by gpt-4o-2024-08-06 on 2025-06-15

The image depicts a person standing in what appears to be an indoor space with a visible door frame. The individual is holding a small cup in one hand. They are dressed in a casual attire consisting of a jacket over a t-shirt, which is slightly loose-fitting. The lighting suggests the photograph might be taken in natural lighting and gives a slightly shadowed effect to the space, indicating a lack of direct light. The overall atmosphere of the image conveys a casual and intimate setting.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image shows a man with a beard, wearing a jacket, standing in a doorway or corner of a room. He has a serious expression on his face and is holding something, possibly a cup or glass, in his hands. The image has a black and white, vintage aesthetic, suggesting it is an older photograph.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This is a black and white photograph showing a bearded person standing in what appears to be a doorway or hallway. They are wearing a casual outfit consisting of what looks like a tweed or textured jacket over a t-shirt or sweater. They are holding what appears to be a drink or cup in their hand. The image has a somewhat candid, informal quality typical of mid-20th century documentary-style photography. The lighting creates strong contrasts, and the composition has the subject positioned against a light background with darker elements framing the scene.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-05

The image is a black-and-white photograph of a man holding a cup, standing in front of a wall.

The man has dark hair and a beard, and he is wearing a dark jacket over a light-colored shirt. He is holding a white cup in his right hand, which is positioned in front of his chest. The background of the image shows a wall with a doorway or window frame on the left side. The wall appears to be made of concrete or stone, and there are some marks or stains on it. The overall atmosphere of the image suggests that the man is in a casual setting, possibly at home or in a workplace.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-05

This image is a black-and-white photograph of a man standing in a doorway, holding a cup. The man has dark hair and is wearing a light-colored shirt under a dark jacket. He is looking to the right of the camera with a serious expression. His left hand holds a white cup, and his right hand is not visible.

The background of the image shows a doorway with a white wall on the left side and a white door frame on the right side. A black cord or wire is attached to the wall on the right side of the doorway. The overall atmosphere of the image suggests that it was taken indoors, possibly in a home or office setting.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-03

This black and white image shows a man standing in front of a door. He is wearing a gray jacket and a gray shirt. He is holding a cup in his hand. The door has a wooden frame and a white door panel. There is a black strap hanging on the wall on the right side of the door. The image has a vintage look.

Created by amazon.nova-pro-v1:0 on 2025-06-03

The black-and-white photo shows a man standing in front of a door. He is wearing a suit with a shirt underneath. He is holding a cup in his hand, and he is looking at something. He has a beard and short hair. The door is open, and it has a rope hanging on the side. The wall behind him is white, and it has some stains on it.

Google Gemini

Created by gemini-2.0-flash on 2025-05-16

The black-and-white photograph depicts a middle-aged man standing in a doorway. He is wearing a tweed jacket over a t-shirt. His hair is thick and slightly disheveled, and he has a full beard. He is holding a white cup in his hand. He is looking off to the right of the frame, with a thoughtful or contemplative expression on his face.

The doorway frames the man, with the wall to the right of the frame showing a vertical cable hanging. The background is plain, with a simple wall visible through the doorway. The lighting is soft, creating subtle shadows and highlighting the texture of the man's jacket and the rough surface of the wall. The overall composition feels intimate and slightly informal.

Created by gemini-2.0-flash-lite on 2025-05-16

Here's a description of the image:

This is a black and white photograph of a man standing inside what appears to be a doorway.

Subject:

- The man has a rugged appearance. He has a dark, full beard and dark hair.

- He is wearing a jacket over a t-shirt.

- He's holding a disposable cup in his right hand.

Setting and Composition:

- The setting appears to be a rather plain interior, possibly a home or a studio.

- The composition is simple, focusing on the man and the doorway.

- The lighting is natural, creating soft shadows and enhancing the contrast of the image.

Overall Impression:

The photo has a candid and intimate feel. The man looks thoughtful and slightly weary, the shot seems authentic and a glimpse into a real moment in his life.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black-and-white photograph of a man standing in a doorway. He is holding a cup in his left hand. The man has a beard and mustache, and he is wearing a tweed jacket over a light-colored shirt. The background of the image is relatively plain, with a simple wall and door frame visible. The lighting in the photograph is somewhat dim, creating a moody atmosphere. The man's expression is neutral, and he appears to be looking directly at the camera. The overall tone of the image suggests a candid, everyday moment.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-10

The image is a black-and-white photograph of a man standing indoors. He has a beard and is looking to the side with a thoughtful or contemplative expression. He is wearing a suit jacket over a collared shirt and is holding a small cup or mug in his right hand, which suggests he might be drinking something. The background shows a simple, possibly aged, interior with visible walls and a framed mirror, adding a sense of depth to the image. The lighting is soft and natural, casting gentle shadows and contributing to the overall mood of the photograph.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-10

This black-and-white photograph depicts a man standing indoors, leaning slightly against a wall. He has a beard and is wearing a jacket over a collared shirt. In his hand, he holds a small cup. The setting appears to be a simple room with plain walls, and there is a visible electrical cord running along the wall. The lighting is soft, creating a somewhat moody atmosphere.