Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 36-44 |

| Gender | Male, 99.9% |

| Calm | 92.6% |

| Surprised | 6.5% |

| Fear | 6.4% |

| Confused | 3.5% |

| Sad | 2.3% |

| Angry | 0.7% |

| Disgusted | 0.7% |

| Happy | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.1% | |

Categories

Imagga

created on 2018-08-20

| paintings art | 39.1% | |

| food drinks | 21.7% | |

| text visuals | 13.5% | |

| streetview architecture | 10.1% | |

| events parties | 9.4% | |

| interior objects | 5.7% | |

| people portraits | 0.1% | |

| pets animals | 0.1% | |

| nature landscape | 0.1% | |

Captions

Microsoft

created by unknown on 2018-08-20

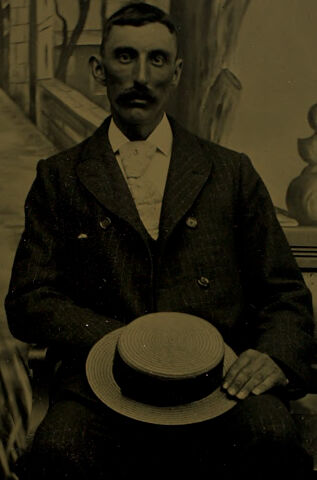

| an old photo of a man | 89.1% | |

| old photo of a man | 87.4% | |

| a man posing for a photo | 83.1% | |

Salesforce

Created by general-english-image-caption-blip on 2025-05-05

a photograph of a man in a suit and hat sitting on a bench

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-11

This is a vintage photograph depicting a seated individual dressed in formal attire, including a buttoned coat and collar. The person is holding a straw boater hat in their lap. The background features an artistic illustration resembling a park or garden scene, with trees, an architectural structure, and a curved path leading into the distance. The ambiance reflects a classic, historical style often found in portrait photography from the late 19th to early 20th century.

Created by gpt-4o-2024-08-06 on 2025-06-11

The image is an old-style photograph depicting a person seated. The individual is wearing a formal double-breasted suit with a high collared shirt underneath. They are holding a round straw boater hat with a dark band resting on their lap. The background appears to be a painted backdrop, typical of portrait photography from earlier eras, featuring an outdoor scene with a tree and what seems to be a pathway leading through an archway. The setting gives the appearance of a studio portrait commonly found in the 19th century.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-16

The image appears to be a black and white photograph of a man sitting in an ornate chair or armchair. He is wearing a suit and hat and has a serious expression on his face. The background behind him appears to be a painted or decorated wall, with what looks like a vase or other decorative object visible. The overall atmosphere of the image suggests it may be a portrait or studio photograph from an earlier era.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-16

This is a vintage tintype or similar early photographic portrait, likely from the late 1800s or early 1900s. The subject is seated and wearing a dark formal suit with a white collar and tie. They are holding a straw boater hat in their lap. The photograph was taken in a studio setting with a painted backdrop featuring trees and architectural elements like arches. The backdrop creates a romantic, park-like scene. The photograph has the sepia tones and slight imperfections characteristic of early photographic processes. The subject is formally posed in the style typical of portrait photography from that era.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a man sitting on a bench, holding a hat in his lap. The man is dressed in a dark suit and tie, with a white shirt and a dark jacket. He has a mustache and is looking directly at the camera.

The background of the image features a painted backdrop with trees, a stone wall, and a path leading to a building. The overall atmosphere of the image suggests that it was taken in the late 19th or early 20th century, possibly in a studio setting.

The man's formal attire and the style of the backdrop suggest that the photograph was taken for a special occasion or as a portrait. The image provides a glimpse into the past, capturing a moment in time that is both nostalgic and intriguing.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a man sitting on a bench, with a hat in his lap. The man is wearing a dark suit and a white shirt, and he has a mustache. He is sitting on a bench that appears to be made of wood, and there is a small table or shelf behind him with a decorative object on it. The background of the image is a painted backdrop, which depicts a scene of trees and buildings. The overall atmosphere of the image suggests that it was taken in a studio, possibly for a formal portrait.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-31

The image is a vintage photograph of a man sitting on a bench with his hat on his lap. He is wearing a suit and tie. The background is a painted scene with a tree, a pathway, and a building. The painting has a slightly blurry effect, giving it an old-fashioned look. The man's expression is serious, and he is looking directly at the camera. The image has a monochromatic filter, giving it a black-and-white appearance.

Created by amazon.nova-pro-v1:0 on 2025-05-31

The image is a black-and-white photograph of a man sitting on a bench. He is wearing a suit with a bow tie and holding a hat in his left hand. He is looking to the right. Behind him, there is a garden with a stone pathway, a stone archway, and a vase on a table. There are also trees and a house in the background.

Google Gemini

Created by gemini-2.0-flash on 2025-05-05

Here is a description of the image:

This is a vintage portrait photograph of a man sitting on a bench, likely taken in a studio setting. The man has a serious expression, with a prominent mustache, and is wearing a dark double-breasted suit over a light-colored shirt and tie. He holds a boater hat in his left hand, resting it on his knee. He is wearing dark shoes.

The backdrop is a painted scene of an outdoor landscape, featuring trees, a walkway with an archway, and what appears to be a building. To the right of the man, there's a decorative element on the bench that looks like a series of stacked, round shapes. The overall tone of the photograph is dark and has the appearance of an older photograph.

Created by gemini-2.0-flash-lite on 2025-05-05

Here's a description of the image:

Overall:

The image is a sepia-toned portrait of a man in a studio setting. It has an antique feel, likely from the late 19th or early 20th century.

Subject:

- The Man: The man is the central focus. He's sitting on a bench, dressed in a dark suit with a collared shirt and what appears to be a bow tie. He has a prominent mustache. His hat rests in his lap, held in his hand.

- Pose & Expression: The man is looking directly at the camera. His expression is serious.

Background:

- Painted Backdrop: The background is a painted studio scene. It features a pathway with arches and a building, with trees along the side. A decorative element sits on top of the bench to the man's right. The background is meant to give the impression of an outdoor scene.

Technical Aspects & Condition:

- Sepia Tone: The sepia tone gives the image an aged, vintage quality.

- Lighting: The lighting is relatively simple, with the man well-lit and the background softer.

- Condition: The image appears to be a photograph, perhaps a glass negative or a print from that era. Some of the details are slightly blurred or lost, which is typical of older photographs.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a vintage photograph featuring a man posing in formal attire. Here are the detailed observations:

Subject: The man has a mustache and is wearing a dark, double-breasted suit jacket with a white shirt and a bow tie. He is holding a light-colored hat in his left hand.

Background: The backdrop appears to be a painted studio background, depicting an outdoor scene with trees and a building, possibly a house or a small structure. The setting suggests it is a studio portrait rather than an outdoor photograph.

Pose: The man is seated, leaning slightly forward with his left elbow resting on a table or a prop. His posture is upright and formal, typical of posed portraits from earlier times.

Lighting and Tone: The photograph has a sepia tone, which is characteristic of older photographs. The lighting is soft and even, highlighting the man's face and attire clearly.

Additional Elements: There is a decorative vase or urn on the table beside the man, adding to the formal and staged nature of the portrait.

Overall, the image captures a formal portrait of a man from what appears to be the late 19th or early 20th century, showcasing the fashion and photographic styles of that era.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-01

The image is a sepia-toned photograph of a man seated on a staircase. The man is dressed in formal attire, including a suit, a white shirt, and a tie. He is holding a straw hat in his right hand. The background features a painted scene that appears to depict a street with an archway and trees, giving the impression of a vintage or staged backdrop commonly used in photography studios. The overall tone of the photograph is aged, suggesting it may be from the late 19th or early 20th century.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-01

This is a sepia-toned vintage photograph of a man seated on a bench. He is dressed formally in a dark suit with a white shirt and a bow tie. The man has short dark hair, a mustache, and is looking directly at the camera with a serious expression. He is holding a straw hat with a dark band on his lap. The background features a painted scene with trees, a stone archway, and a decorative urn on a ledge. The overall style of the photograph suggests it was taken in the late 19th or early 20th century.