Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

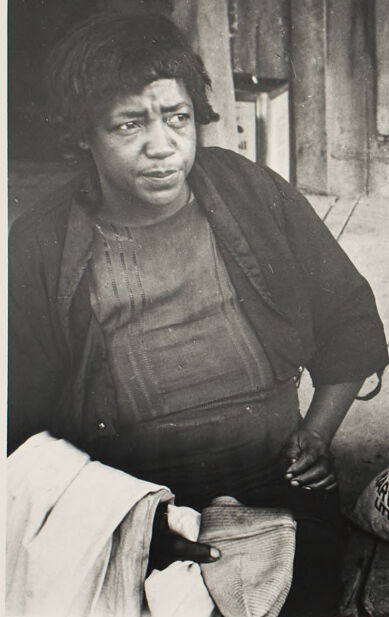

| Age | 43-61 |

| Gender | Female, 98.7% |

| Confused | 59.3% |

| Sad | 16.9% |

| Calm | 8.4% |

| Angry | 5.8% |

| Surprised | 3.1% |

| Fear | 2.7% |

| Disgusted | 2.2% |

| Happy | 1.6% |

Feature analysis

Amazon

| Hat | 99.9% | |

Categories

Imagga

| paintings art | 91% | |

| people portraits | 2.9% | |

| pets animals | 2.7% | |

| nature landscape | 2.7% | |

Captions

Microsoft

created by unknown on 2021-12-15

| a group of people in an old photo of a person | 81.4% | |

| a group of people posing for a photo | 81.1% | |

| a couple of people that are posing for a photo | 76.5% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-02

| a photograph of a woman in a straw hat sitting on a bench | -100% | |

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

Here's a description of the image:

Overall Impression:

The photograph is a black and white image of two African-American women seated on a wooden porch or platform. The lighting is soft, and the image has a slightly vintage feel.

People:

- Woman 1 (Left): She is seated, looking towards the left with a serious expression. She has dark hair and is wearing a simple, dark outfit. There is a bundle of clothing in her hands.

- Woman 2 (Right): She's wearing a wide-brimmed straw hat, dark clothing and a white skirt. Her expression is focused and she's looking to her left.

Setting:

- The backdrop is weathered wooden planks, suggesting a simple or rural setting, possibly a cabin or a porch.

- There is a bag leaning against the platform.

Possible Context:

The image may capture a moment of rest or work. The scene is evocative of a time period in which the women are at work with their white skirts and bundles of clothing.

Created by gemini-2.0-flash on 2025-05-16

Here's a description of the image:

This is a black and white photograph showing two women seated outside on what appears to be a wooden porch.

On the left, a woman with short, dark hair is seated and looking to the side. She is wearing a dark-colored top over what might be a textured or patterned garment underneath. She is holding cloth in her hands.

To her right, another woman is wearing a wide-brimmed straw hat and a dark sweater or jacket. She is also looking to the side, away from the camera. There is a bag with writing on it next to her.

The wooden porch or platform they are sitting on is visible, along with a wooden wall or door behind them. In the background, there is also the lower half of a person standing in what might be a doorway, along with clothing hanging on the wall. The image appears to be a vintage or historical photograph.