Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 37-45 |

| Gender | Male, 95.8% |

| Angry | 71.3% |

| Confused | 16.4% |

| Calm | 11% |

| Disgusted | 0.5% |

| Surprised | 0.3% |

| Sad | 0.2% |

| Fear | 0.2% |

| Happy | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.4% | |

Categories

Imagga

created on 2022-01-09

| paintings art | 99.9% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a vintage photo of a man | 88.4% | |

| a vintage photo of a man holding a book | 62.8% | |

| a vintage photo of a man holding a book posing for the camera | 57.5% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

a portrait of a man.

Salesforce

Created by general-english-image-caption-blip on 2025-05-17

a photograph of a man in a native american indian headdress

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-16

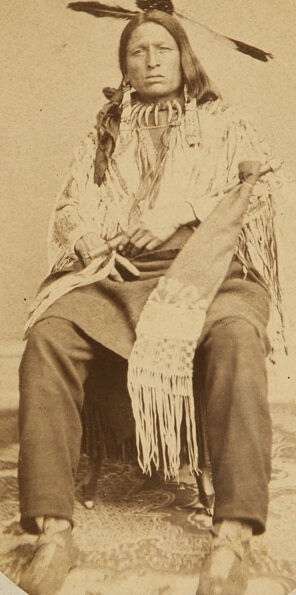

The image shows an individual seated on a chair, dressed in traditional Indigenous attire. The clothing features fringed and embroidered elements, including a shawl or robe draped over the individual’s shoulders. A prominent feathered headdress adorns the individual’s head. The seated figure rests on a patterned carpet, which adds texture and detail to the setting. The photograph is sepia-toned and framed within an oval border, giving it a vintage appearance.

Created by gpt-4o-2024-08-06 on 2025-06-16

The image depicts a sepia-toned photograph of a person seated in a studio setting. The individual wears traditional Native American attire, including a fringed garment and moccasins. There is a headdress featuring two feathers extending from the top, and the figure holds a long fringed object draped over their lap. The background includes a patterned floor covering. The image is framed within an oval border on a textured paper.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-19

The image depicts a Native American man wearing traditional clothing and accessories. He is sitting in an outdoor setting, with a rocky landscape visible in the background. The man has long hair and is wearing a feathered headdress, a fringed jacket, and leggings. He appears to be holding a pipe or other object in his hands. The image has an oval frame and appears to be an old photographic print.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-19

This is a historical sepia-toned photograph, likely from the 19th century, showing a Native American individual in traditional dress. The subject is wearing a fringed buckskin shirt, dark pants, and has a single feather in their hair. They are seated in a formal portrait pose against a plain backdrop. The photograph is presented in an oval format, which was a common style for portrait photography of that era. The image shows remarkable detail in the traditional clothing, particularly the intricate fringe work and what appears to be beaded or decorative elements. The photograph has some aging and slight discoloration around the edges, which is typical of photographs from this time period.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is an antique photograph of a Native American man, likely from the late 19th or early 20th century. The photograph is sepia-toned and features the man sitting on a rug or blanket, with his legs crossed and his hands resting on his lap. He is wearing traditional Native American clothing, including a fringed shirt, pants, and moccasins. A feathered headdress adorns his head, and a long, fringed sash hangs from his shoulder.

The background of the photograph is a plain wall, and the overall atmosphere suggests a formal portrait. The man's serious expression and traditional attire convey a sense of dignity and cultural pride. The photograph appears to be a studio portrait, given the plain background and the man's formal pose.

The image provides a glimpse into the history and culture of Native American communities during the late 19th and early 20th centuries. It highlights the importance of preserving cultural heritage and the need to respect and appreciate the traditions of indigenous peoples.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

This image is a vintage photograph of a Native American man, likely from the 19th century. The man is seated on a chair, facing the camera directly. He has long hair and is adorned with traditional attire, including a feathered headdress, a fringed shawl or blanket, and moccasins. His clothing appears to be made of animal hides or woven fibers.

The background of the photograph is a plain wall, with a patterned rug or mat beneath the man's feet. The overall atmosphere of the image suggests a formal portrait, possibly taken by a photographer who traveled to Native American communities to document their culture and way of life.

The photograph is presented in an oval frame, which adds to its vintage charm. The frame is surrounded by a white border, with some discoloration and wear visible around the edges. This suggests that the photograph has been handled and displayed over time, and may have been part of a larger collection or exhibition.

Overall, this image provides a fascinating glimpse into the lives and traditions of Native American communities during the 19th century. It is a valuable historical artifact that can help us better understand the cultural heritage of these communities and the ways in which they were represented and documented during this period.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image appears to be an old photograph of a Native American man. He is sitting on a chair with his legs crossed, wearing a traditional Native American outfit. He has a feathered headdress on his head and is holding a long object, possibly a spear or a staff. The photograph has a vintage look, with a sepia tone and a slightly blurry effect. The background is a plain, light-colored surface.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The old photo shows a man sitting on a carpet, wearing a traditional outfit. He is wearing a white shawl with fringes and a white shirt underneath. He is also wearing a long-sleeved shirt with fringes and a pair of pants. He is holding a long piece of cloth with fringes, which is probably a blanket. He is wearing a feather headdress, which is a traditional headdress of Native Americans.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image:

The image is an old, sepia-toned photograph of a Native American man. The photo is presented in an oval format, set against a rectangular paper background that shows signs of aging, with yellowing and some creasing around the edges.

The Native American man is seated and faces the camera directly. He has a stoic expression. He is wearing traditional clothing that includes a fringed shawl or wrap over a plain shirt or jacket. Around his neck, he has a large necklace made of what appears to be bones or beads. In his hair, he wears two feathers. He is seated on what looks like a patterned blanket or rug, and his feet are clad in moccasins.

The overall quality of the photograph suggests it is a historical portrait, possibly taken in the late 19th or early 20th century. The aged paper and sepia tone contribute to the vintage aesthetic.

Created by gemini-2.0-flash-lite on 2025-05-17

Certainly! Here's a description of the image:

Overall Impression

The image is a sepia-toned portrait of a Native American man. The photograph has an oval frame within a larger rectangular border. It appears to be an older photograph, possibly from the late 19th or early 20th century.

Description

- Subject: The central focus is a Native American man. He is seated, wearing traditional attire.

- Clothing and Accessories:

- He is wearing a fringed shawl or blanket draped over his shoulders.

- He has a neckpiece consisting of several beads.

- He has a headpiece with feathers.

- He wears dark trousers and moccasins.

- Pose: The man is seated, with his legs slightly apart. He is looking directly at the camera, creating a sense of directness and connection with the viewer.

- Background: The background is a simple, light backdrop. The man is seated on what appears to be a patterned surface, possibly a rug or mat.

- Additional Elements: The man holds a long pipe and possibly another object in his hands.

Overall, the image is a dignified portrait capturing the essence of a Native American individual.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image is an old sepia-toned photograph of a Native American individual, likely taken in the late 19th or early 20th century. The person is seated outdoors, with a landscape that includes some hills or mountains in the background.

The individual is wearing traditional Native American attire, which includes:

- A fringed and patterned garment, possibly a shirt or tunic.

- A blanket or shawl draped over the shoulders.

- Leggings or pants that are also fringed.

- Moccasins on the feet.

The person's hair is long and braided, and they are adorned with feathers in their hair, which is a common element in Native American headdresses. The individual is also wearing a necklace, which appears to be made of beads or similar materials.

The photograph is framed within an oval shape, and the edges of the paper show signs of aging, with some discoloration and wear. The overall tone of the image is quite solemn and dignified, reflecting the cultural heritage and traditions of Native American people.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-04

This image is a sepia-toned portrait of a seated individual dressed in traditional attire, likely from an indigenous North American tribe. The person is wearing a headdress adorned with feathers, a patterned shirt, and a long, fringed scarf or wrap draped over the shoulders. The individual is seated in a relaxed posture, with hands resting on the lap or holding an object, possibly a ceremonial item. The background appears to be an ornate, oval-shaped frame, which enhances the historical or cultural significance of the portrait. The overall style suggests it is a vintage photograph, possibly from the 19th or early 20th century, capturing a moment of cultural representation.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-04

This is a sepia-toned photograph of a Native American man sitting in a formal pose. The image is framed within an oval mat. The man is dressed in traditional attire, including a beaded and fringed garment draped over his shoulders and chest. He is wearing dark pants and moccasins. His long hair is parted in the middle and adorned with feathers. The background appears to be a studio setting with a patterned floor. The photograph has an aged appearance, with some discoloration and wear around the edges of the mat.