Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

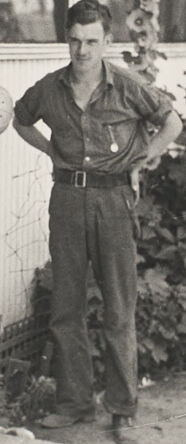

| Age | 26-42 |

| Gender | Male, 92.6% |

| Calm | 86.2% |

| Angry | 6.1% |

| Happy | 3.8% |

| Sad | 1.6% |

| Disgusted | 1.3% |

| Surprised | 0.3% |

| Confused | 0.3% |

| Fear | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.9% | |

Categories

Imagga

created on 2021-12-15

| paintings art | 63.2% | |

| people portraits | 18.2% | |

| pets animals | 8.5% | |

| streetview architecture | 6.6% | |

| nature landscape | 2.7% | |

Captions

Microsoft

created by unknown on 2021-12-15

| a group of people posing for a photo | 95.6% | |

| a man standing in front of a group of people posing for a photo | 90.8% | |

| a group of people standing in the snow | 90.7% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-11

photograph of a man standing in front of a house.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a man in uniform is taking a picture of a man in uniform

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-17

The image shows a group of three men standing near a building with corrugated metal siding and large windows. One man, wearing a denim shirt and pants, has his back to the camera and is holding what appears to be a hard hat. The other two men, one wearing work overalls and the other in a button-up shirt, stand facing him near the entrance to the building. There is vegetation surrounding the structure, including vines and plants visible on the right side. The scene appears casual, suggesting interaction among workers or individuals in a rural or industrial setting.

Created by gpt-4o-2024-08-06 on 2025-06-17

The image depicts a scene with three individuals standing near a building. The person in the foreground is wearing a short-sleeved shirt and jeans and appears to be holding a hat, facing the other two individuals. The individual on the left is wearing a buttoned shirt and pants, standing with one hand resting on their hip. The person on the right is dressed in overalls with rolled-up sleeves and has both hands on their hips. The setting includes plants growing along the side of the building, which is constructed with horizontal panels and features a series of windows on the side facing the viewer. There is a short step leading up to a doorway on the left side of the image. The overall atmosphere suggests a casual outdoor meeting or conversation among the individuals.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image shows three men standing outside a building. One man is facing the camera and appears to be painting or working on the exterior of the building. The other two men are standing nearby, observing or conversing. The scene appears to be from an earlier time period, based on the clothing and style of the building. The image has a black and white, vintage aesthetic.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This is a black and white photograph that appears to be from an earlier era, possibly the 1930s or 1940s, based on the clothing and setting. Three men are shown outside what appears to be a screened porch or enclosed veranda of a building. They are all wearing work clothes - denim overalls and casual shirts. One man has his back to the camera and appears to be working on or examining a window, while two others are standing and observing. The building has white wooden siding and a row of windows. There are some shrubs or plants visible along the building's foundation. The image has the documentary-style quality typical of Depression-era photography.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image depicts a black-and-white photograph of three men standing outside a building, with one man holding a bucket and another man leaning against the wall. The man on the left is wearing a denim shirt and jeans, while the man in the middle is wearing a dark-colored shirt and pants. The man on the right is wearing overalls and a light-colored shirt.

The background of the image shows a building with windows and a door, surrounded by plants and trees. The overall atmosphere of the image suggests a casual, everyday scene, possibly from the 1940s or 1950s.

Answer: A black-and-white photograph of three men standing outside a building, with one man holding a bucket and another man leaning against the wall.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of three men standing outside a building. The man on the left is wearing a denim shirt and jeans, with his back to the camera. He appears to be holding a hat in his right hand, which is raised above his head. The man in the middle is wearing a dark-colored shirt and pants, with his hands on his hips. The man on the right is wearing overalls and a light-colored shirt, with his hands on his hips as well.

In the background, there is a white building with windows and a door. There are also some plants and bushes visible behind the men. The overall atmosphere of the image suggests that the men are taking a break or posing for a photo outside the building.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image is a black-and-white photograph featuring three men standing outside a building. The man on the left is holding a hat and appears to be fixing something on the wall. The man in the middle is standing with his hands on his hips, looking at the man on the left. The man on the right is leaning against the wall with his hands in his pockets. The building has glass windows and a door, and there is a plant with flowers on the left side.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The black-and-white image features three men standing outside a house. The man on the left is standing sideways and seems to be fixing the window. He is wearing a hat in his right hand. The man in the middle is standing with his hands in his pockets. The man on the right is wearing an apron and looking at the man on the left. There are plants and bushes in front of the house.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

Here is a description of the image:

This black and white photograph captures a scene of three men outside a building. The man in the foreground has his back to the camera, wearing a denim shirt and jeans. He is holding a hat and gesturing towards a doorway. The man in the middle is standing with his hands on his hips, dressed in a button-down shirt and pants. The man on the right is also standing with his hands on his hips, wearing overalls. The building behind them has several windows. There is shrubbery around the men. The photo is taken in a way that suggests casual candidness.

Created by gemini-2.0-flash on 2025-05-16

Here is a description of the image:

This black and white photograph captures three men standing outside a simple building with a row of windows. The man on the left is facing away from the camera and seems to be gesturing with a hat in his hand, perhaps talking to someone inside the building. He is dressed in a denim shirt and jeans, with a belt cinching his waist.

The man in the middle is standing with his hands on his hips, wearing a collared shirt, matching pants, and a belt. He is looking at the man on the left, possibly engaged in the conversation.

The man on the right is older, wearing overalls and a collared shirt, also with his hands on his hips. He is positioned further to the right and also looking at the man on the left.

Behind the men is a building with a corrugated metal exterior. The windows are covered with screens. A small bush or leafy plant is growing near the building. The overall impression is a casual outdoor setting, possibly depicting farm or rural workers. The lighting suggests it is daytime, and the scene is captured in a straightforward, documentary style.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

This black-and-white photograph depicts three men standing outside a building. The building appears to be constructed with a combination of wood and metal, with large windows that allow a view into the interior.

Man on the Left: This man is facing away from the camera, towards the building. He is wearing a long-sleeved shirt and jeans. He appears to be adjusting or hanging something on the exterior wall of the building, possibly a hat.

Man in the Middle: This man is standing with his hands on his hips, facing the camera. He is wearing a short-sleeved shirt and pants with a belt. He has a relaxed stance and is positioned in front of a window.

Man on the Right: This man is also facing the camera. He is wearing a short-sleeved shirt and overalls, with his hands in his pockets. He is standing next to some foliage, possibly shrubs or small trees, which are growing along the side of the building.

The scene suggests a casual, possibly rural setting. The men's attire and the appearance of the building hint at a mid-20th-century time period. The photograph captures a moment of everyday life, with the men engaged in what appears to be a routine or leisurely activity.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-05

The image is a black-and-white photograph showing three men outdoors near a building. One man, positioned on the left, appears to be taking a photograph with a vintage camera, holding his hat in his right hand. The other two men, standing to the right, are dressed in work attire – one in a button-up shirt and suspenders, and the other in a short-sleeved shirt and pants. They both have their hands on their hips, seemingly posing for the photo. The setting appears to be a rural or agricultural area, with some plants and a fence visible in the background. The building has large windows, and the overall atmosphere suggests a casual, everyday moment captured in a historical context.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-05

This black-and-white image shows three men standing in front of a building with horizontal siding and a row of windows. The man on the left is facing away from the camera, holding a hat in his hands, and appears to be in the process of either putting it on or taking it off. The other two men are standing side by side, facing the camera, with their hands resting on their hips. The man on the right is wearing overalls and glasses. There are some plants and a fence visible in the background. The overall scene suggests a casual, possibly rural or working-class setting.