Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 7-17 |

| Gender | Female, 96.1% |

| Calm | 85.6% |

| Happy | 10.9% |

| Sad | 1.5% |

| Angry | 0.9% |

| Disgusted | 0.5% |

| Confused | 0.2% |

| Fear | 0.1% |

| Surprised | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.9% | |

Categories

Imagga

created on 2021-12-15

| people portraits | 97.4% | |

| paintings art | 1.8% | |

Captions

Microsoft

created by unknown on 2021-12-15

| a group of people posing for a photo | 97.4% | |

| a group of people posing for a picture | 97.3% | |

| a group of people posing for the camera | 97.2% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

a family of immigrants poses for a photograph.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a group of people standing in front of a house

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-13

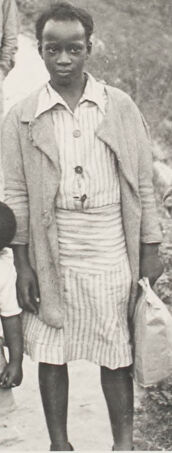

This black and white image depicts a group of six individuals, including adults and children, standing together on a dirt path in a rural area. They are dressed in modest, casual clothing, with some holding items such as bags or books. The background features a row of simple houses on a hillside, with visible porches and stairs, surrounded by grass and vegetation. The scene conveys a glimpse of life in a small, rural community during the mid-20th century.

Created by gpt-4o-2024-08-06 on 2025-06-13

The image depicts a group of people standing on a dirt path in what appears to be a rural setting. Behind them, there are several small houses on a hillside. The individuals in the photo are dressed casually, with some holding items such as bags or books. The landscape is grassy and the houses have simple architectural designs typical of rural or small-town settings. The path they are standing on curves around the houses, suggesting it is a well-traveled route within this community. The overall tone of the image suggests it might be historical, potentially from the mid-20th century.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image shows a group of children standing in a rural setting. There are five children in the photograph - three girls and two boys. They appear to be of African descent and are dressed in simple, casual clothing. The background includes some buildings and a grassy field. The image has a black and white, vintage aesthetic, suggesting it was taken some time in the past.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This appears to be a vintage black and white photograph, likely from the mid-20th century. It shows a group of children standing on a dirt path or road, with several white houses visible on a hillside in the background. The children are dressed in casual clothing typical of the era - skirts, shorts, and button-up shirts. The setting appears to be a residential area, possibly a mining town or hillside community. The composition captures what seems to be an everyday moment in what was likely a working-class neighborhood of that time period.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image depicts a black-and-white photograph of a group of children standing on a dirt road in front of houses.

In the foreground, six children are visible, with the youngest child, a boy, holding hands with the girl to his left. The girl to his right is holding a bag in her left hand. The boy to her right is holding a bag in his right hand. The girl to his left is wearing a dress and holding a bag in her right hand. The boy in the back is wearing a long-sleeved shirt and pants.

In the middle ground, the dirt road stretches out behind the children, with a few houses visible in the background. The houses appear to be small and simple, with porches and windows.

In the background, the sky is not visible, but the atmosphere suggests a rural or suburban setting, possibly during the early 20th century. The overall mood of the image is one of innocence and simplicity, capturing a moment in time of childhood and community.

The image appears to be a historical photograph, possibly taken by a professional photographer or as part of a family album. The quality of the image is good, with clear details and a well-composed scene.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of six children standing on a dirt road in front of a row of houses. The children are dressed in casual clothing, with the girls wearing dresses and the boys wearing shirts and pants or overalls. They appear to be from a low-income community, possibly during the Great Depression era.

In the background, there are several houses with porches, suggesting a rural or small-town setting. The overall atmosphere of the image is one of simplicity and hardship, with the children's clothing and the condition of the houses indicating a lack of resources. However, the children's faces suggest a sense of resilience and determination, despite the challenges they may be facing.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

A black-and-white photo shows a group of children standing on a dirt road. They are all dressed in simple, old-fashioned clothing. The children are standing in a line, with a small boy in the middle. The boy is holding a bag. Behind them, there is a house with a porch and a fence. The house has a slanted roof and is surrounded by grass and plants.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The black-and-white photo shows a group of children standing on a pathway. They are all wearing different outfits. A boy is standing behind them, and the child in the middle is holding a baby. Some of them are holding a bag in their hands. Behind them, there is a grass field with some plants. In the distance, there are houses with windows.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

Here's a description of the image:

The photograph captures a group of six African-American children posing on a dirt road. The image is in black and white, which contributes to a sense of nostalgia and historical context. They appear to be in a rural or small-town setting, with simple houses lining the background on a slight hill.

Here's a breakdown of the composition and details:

- Subjects: There are four girls and two boys. They are all neatly dressed in simple clothes, which may suggest a time when resources were more limited. Some of the children are carrying paper bags, possibly containing their lunches or other belongings.

- Setting: The scene takes place outdoors. The background features several houses, suggesting a residential area. The presence of the dirt road and the grassy hill hints at a less developed environment.

- Overall Impression: The photograph evokes a sense of community, simplicity, and perhaps the daily lives of children in a specific historical period. It might capture a moment in time from the mid-20th century, given the style of clothing and the visual aesthetic.

Created by gemini-2.0-flash on 2025-06-16

Certainly! Here's a description of the image:

General Impression:

The image is a black and white photograph of six children standing on a dirt road in what appears to be a rural or lower-income neighborhood. The composition is somewhat formal, with the children arranged in a row and looking towards the camera. The setting includes houses on a hill in the background.

Details:

Children: There are six children in the photo. Two girls on the left and a boy at the center are taller. A little boy stands in the middle front, next to the boy at the center. Two boys are on the right side.

Clothing: The children are dressed in everyday clothing that is likely indicative of the time period. Some are wearing dresses, shirts and pants, and overalls. Some carry bags or items.

Setting: The background includes modest houses on a hill. The road appears to be unpaved.

Mood: The expressions on the children's faces are neutral to slightly serious, giving the image a somber yet dignified tone.

I hope this description is helpful! Let me know if you have any other requests.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black-and-white photograph depicting a group of six children standing outdoors on a dirt road. The setting appears to be a rural or small-town area, with several modest houses in the background. The children are dressed in simple, modest clothing typical of an earlier era.

From left to right, the children are:

- A young girl wearing a checkered dress and holding a small bag or object.

- A slightly older girl in a light-colored blouse and dark skirt, with her hands clasped in front of her.

- A small child, possibly a toddler, in light-colored clothing, standing in front of the third child.

- A woman or older girl wearing a striped dress and holding the hand of the small child.

- A young boy in overalls and a light-colored shirt, standing slightly behind the others.

- Another young boy in the background, standing on the dirt road, wearing a collared shirt and pants.

The background shows a row of houses along the dirt road, with some greenery and hills visible further back. The overall atmosphere suggests a simple, possibly impoverished, community setting from a bygone era.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-10

This black-and-white photograph depicts a group of children standing on a dirt road in what appears to be a rural setting. The children are dressed in casual attire, with some holding small bags or items in their hands. The background shows a row of modest houses with porches, situated on a hillside, suggesting a community living in close proximity. The overall atmosphere of the image conveys a sense of simplicity and rustic living. The children's expressions are neutral, and the photo captures a candid moment in time.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-10

This black-and-white photograph shows a group of six children standing on a dirt path in a modest neighborhood. The children are dressed in a mix of formal and casual clothing, with some wearing collared shirts and dresses, while others are in overalls and simpler attire. They are holding bags, possibly containing food or other items. In the background, there are several simple, single-story houses with porches, situated on a hillside. The overall scene suggests a rural or small-town setting, likely from an earlier time period, possibly the mid-20th century. The children appear to be looking directly at the camera, and their expressions range from neutral to slightly smiling.