Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

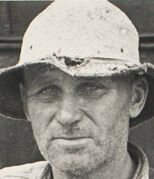

| Age | 39-57 |

| Gender | Male, 99.8% |

| Calm | 99.7% |

| Sad | 0.1% |

| Angry | 0.1% |

| Surprised | 0% |

| Happy | 0% |

| Confused | 0% |

| Disgusted | 0% |

| Fear | 0% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Hat | 99.7% | |

Categories

Imagga

created on 2021-12-15

| paintings art | 98.6% | |

Captions

Microsoft

created by unknown on 2021-12-15

| a man posing for a photo | 95.8% | |

| a person standing posing for the camera | 95.7% | |

| a person posing for the camera | 95.6% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

person, a farmer, with his tractor.

Salesforce

Created by general-english-image-caption-blip on 2025-05-02

a photograph of a man in overalls and a hat on a farm

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-12

The image shows a person standing outdoors, wearing overalls and a long-sleeve shirt. A wide-brimmed hat is visible, and the person is positioned in front of what appears to be farm machinery, with large wheels and belts characteristic of agricultural equipment. The background features a grassy field and trees, suggesting a rural setting. The overall feel of the photo appears to document work or farm life.

Created by gpt-4o-2024-08-06 on 2025-06-12

The image is a black and white photograph depicting a person wearing a brimmed hat and work attire, specifically overalls with a long-sleeve shirt underneath. The individual is standing outdoors in a field with some farm equipment that appears to be vintage or historical, partly visible behind them. The background shows a landscape with fields and trees, suggesting a rural setting. The person has a relaxed posture with one hand resting near their waist and the other by their side. The overall atmosphere conveys an impression of agricultural work.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image shows a middle-aged man wearing a white hat, overalls, and a work shirt, standing in front of a wooden structure and farm equipment. The man has a serious expression on his face and appears to be a farmer or agricultural worker. The background shows a rural, pastoral scene with trees and fields in the distance.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This is a black and white photograph that appears to be from the early-to-mid 20th century. It shows a farmer or agricultural worker standing next to what appears to be farm equipment or machinery. The person is wearing typical farming attire of that era - denim overalls, a light-colored long-sleeve shirt, and a wide-brimmed hat that shows signs of wear. The setting appears to be rural, with fields visible in the background. The photograph has a documentary-style quality typical of Depression-era photography. Behind the subject, you can see parts of what might be a tractor or other farming implement with visible wheel components.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-05

The image depicts a black-and-white photograph of a man standing in front of a piece of farm equipment. The man is dressed in a long-sleeved shirt, overalls, and a hat, with his hands resting on his hips. He appears to be engaged in some form of agricultural work.

In the background, there is a field with trees visible in the distance. The overall atmosphere of the image suggests that it was taken during the daytime, likely in a rural setting. The man's attire and the presence of farm equipment indicate that he is involved in farming or agricultural activities.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-05

The image is a black-and-white photograph of a man standing in front of a piece of farm equipment. The man is wearing a light-colored hat, a long-sleeved shirt, and overalls. He has his hands in his pockets and appears to be looking at the camera with a serious expression.

The background of the image shows a field with trees in the distance. The overall atmosphere of the image suggests that it was taken during the Great Depression, as the man's clothing and the state of the equipment suggest a time of economic hardship.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-04

The image is a black-and-white photograph of a man standing in a field. He is wearing a hat and overalls. He is standing next to a large wheel, possibly from a tractor or other farm equipment. His hands are in his pockets, and he is looking to the side. Behind him is a fence and a large field. The image has a vintage feel, and the man's clothing and equipment suggest that it was taken in the past.

Created by amazon.nova-pro-v1:0 on 2025-06-04

The image is in black and white. It depicts a man standing in front of a large machine, possibly a vintage farming equipment. The man is wearing a hat, a long-sleeved shirt, and denim overalls. He is holding a tool in his hands. The man has a serious expression on his face. Behind him, there is a field with tall grass and trees. The sky is clear, and the sun is shining brightly.

Google Gemini

Created by gemini-2.0-flash on 2025-05-07

The black and white photo depicts a farmer standing in front of some kind of farm machinery.

The farmer is a middle-aged man with a weathered face and a serious expression. He is wearing a straw hat, overalls, and a long-sleeved shirt. His hands are visible, one in his pocket and the other holding a small object.

The farm machinery behind him appears to be a large, industrial piece of equipment, possibly a tractor or a steam engine. It has large wheels and various mechanical components.

The background consists of a field and some trees. The overall mood of the photo is one of hard work, resilience, and a connection to the land.

Created by gemini-2.0-flash-lite on 2025-05-07

The image is a black and white photograph of a man standing in front of a piece of farm machinery. The man is dressed in overalls, a long-sleeved shirt, and a straw hat. His expression is serious, and he is looking directly at the camera. The background shows a field and some trees. The photograph appears to be taken in the early to mid-20th century. The image captures the essence of the American rural working class.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black and white photograph of a man standing next to a piece of farm equipment. The man is wearing overalls and a light-colored shirt, with a brimmed hat on his head. He has a serious expression on his face and is leaning against the equipment with his hands resting on it. The background shows an open field with some trees in the distance, suggesting a rural setting. The farm equipment appears to be an older model, possibly a tractor or some type of agricultural machinery. The overall scene conveys a sense of hard work and the agricultural lifestyle.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-10

This is a black-and-white photograph featuring a man standing in a rural setting, likely on a farm. He is wearing a white hat, a long-sleeved shirt, and overalls, which are typical garments for manual labor. The man appears to be leaning against a piece of farm machinery, which includes large wheels and metal components, possibly part of a tractor or a similar agricultural device. The background shows a field with some fencing and a blurred landscape, suggesting a calm, countryside environment. The overall tone of the image gives a sense of the hard work and lifestyle associated with farming in the past.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-10

This is a black-and-white photograph of a man standing in an outdoor setting, likely a farm. He is wearing a wide-brimmed hat, a long-sleeved shirt, and overalls. The man has a serious expression on his face and is positioned in front of an old-fashioned farm machine with large wheels. In the background, there is a wooden fence and an open field with some trees and hills in the distance. The overall scene suggests a rural, agricultural environment.