Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 20-28 |

| Gender | Male, 99.9% |

| Calm | 99.9% |

| Angry | 0% |

| Confused | 0% |

| Surprised | 0% |

| Sad | 0% |

| Disgusted | 0% |

| Fear | 0% |

| Happy | 0% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.7% | |

Categories

Imagga

created on 2022-01-08

| cars vehicles | 49.6% | |

| paintings art | 44.6% | |

| interior objects | 2.1% | |

| streetview architecture | 1.1% | |

Captions

Microsoft

created by unknown on 2022-01-08

| a group of people riding on the back of a truck | 81.2% | |

| a group of people in an old photo of a truck | 81.1% | |

| a vintage photo of a group of people posing for the camera | 81% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

a group of men and women in a car.

Salesforce

Created by general-english-image-caption-blip on 2025-05-04

a photograph of a group of people standing around a car

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-09

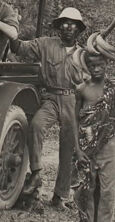

The image depicts a group of individuals gathered around an early 20th-century automobile in a forested area. The vintage vehicle has an open roof and appears to be stationary on a dirt road or trail surrounded by dense vegetation, including tall trees and tropical plants. Some of the individuals are seated or leaning against the car, while others stand nearby, with one person holding a large bundle, possibly bananas. The setting suggests an exploration or travel scene in a rural or jungle environment. The winding path in the background adds depth to the composition.

Created by gpt-4o-2024-08-06 on 2025-06-09

The image depicts a black and white photograph of a group of people in a jungle or forest setting, standing around an early 20th-century automobile. The car appears to be an open-top vehicle typical of that era. The scene is set on an unpaved, narrow dirt road that winds through a dense area filled with tall trees and foliage. Some individuals are sitting on the car, while others stand nearby. The environment suggests a remote or rural location, and the individuals' attire appears to be from a historical period, likely the early 1900s. The composition of the image, including the vehicle and the road, conveys a sense of exploration or travel in a natural setting.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-15

The image shows a group of people posing next to an old automobile in a forested, rural setting. The vehicle appears to be an early 20th century model, with a distinctive body style and large wheels. The people in the image are dressed in clothing typical of that era, with the men wearing suits and hats, and the women wearing dresses. They seem to be enjoying an outing or excursion in the woods, with the lush vegetation and dirt path visible in the background. The overall scene has a vintage, historical feel to it.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-15

This is a black and white historical photograph showing a group of people gathered around what appears to be a Model T Ford on a dirt road or path in a wooded area. The setting looks to be from the early 1900s, with tall trees visible in the background. Some of the individuals are wearing work clothes while others are dressed in white shirts. The vintage automobile appears to be parked on what looks like a rural or forest road. The image has the grainy, slightly faded quality typical of photographs from this era.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a group of people posing around an old car in a wooded area. The car, positioned centrally, has a convertible top and appears to be from the early 20th century. It is parked on a dirt road or path, surrounded by trees and bushes.

There are eight individuals in the image, all dressed in casual attire suitable for outdoor activities during that era. Some are seated on the car, while others stand beside it. The atmosphere suggests a leisurely outing or excursion, possibly a picnic or a day trip into the countryside.

The overall setting and the style of clothing worn by the individuals indicate that the photograph was taken sometime in the early 20th century, likely in a rural or natural environment. The image captures a moment of relaxation and camaraderie among friends or family members enjoying time together in the great outdoors.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a group of people posing with a car in a wooded area.

The car is an open-top vehicle, likely from the early 20th century, with a large front seat and a smaller rear seat. The front seat has a canopy covering it, while the rear seat does not. There are six people in the image: two men sitting on the front seat, one man sitting on the back of the car, one woman standing beside the car, and two men standing to the right of the car. The people are dressed in casual clothing, with some wearing hats and others not.

The background of the image shows a wooded area with trees and bushes. The ground is covered in dirt and grass, and there is a dirt path leading into the woods behind the car. The overall atmosphere of the image suggests a leisurely outing or a picnic in a scenic location.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-05

The image is a monochromatic photograph that depicts a group of people posing for a picture in a forested area. The photograph is old, evident from the grainy texture and faded tones. The people in the image are dressed in early 20th-century attire, suggesting the photograph was taken sometime in the early 1900s. The group consists of six men, with one seated on the front fender of an old car and the others standing around the vehicle.

The car is a vintage model, possibly from the 1920s or 1930s, with a distinctive design and large tires. The car is parked on a dirt road that cuts through the forest, and the surrounding trees and foliage are lush and green. The people in the photograph appear to be smiling and in good spirits, suggesting that they are on a leisurely outing or a trip.

The image captures a moment in time, a glimpse into the past, and offers a glimpse into the lives of people who lived in a different era. The photograph is a valuable historical artifact, providing insight into the social and cultural norms of the time.

Created by amazon.nova-pro-v1:0 on 2025-06-05

The black-and-white photo shows a group of people standing and sitting on a vintage car in the middle of a forest. The car has an open roof. The man on the right is wearing a hat, while the man on the left is wearing a bow tie. The man standing on the right is wearing a tie. The man standing on the left is wearing a hat and shoes.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-02

Here is a description of the image:

This is a vintage black and white photograph showing a group of men posed with a car in a forested area. The car appears to be an early model automobile with an open-air design and a canvas top. Several men are sitting or standing around the car, and there are also several other people of different ethnicities standing next to it. The background is a dense forest with tall trees and a dirt road winding through it. The photograph is likely from the early 20th century, and the style and clothing of the people suggest a colonial or exploration context. The overall tone of the image evokes a sense of adventure, exploration, and perhaps the social dynamics of the time.

Created by gemini-2.0-flash on 2025-05-02

This vintage, black-and-white photograph presents a group of people with a vintage car in a tropical or forested environment.

On the left, a man is sitting on the front bumper of the car. He's wearing a light-colored, short-sleeved shirt and dark trousers, with his socks and boots visible. To his right, another man is casually leaning against the car's front tire. He is wearing a light shirt, dark trousers, and a bowtie.

The car, a vintage automobile with an open top, has several other people inside or around it. One man is seated in the driver's seat, while another is standing beside the car, leaning on the door. Two people of color stand to the right of the car, seemingly indigenous to the area. One is holding what looks like a spear or a long stick.

Further to the right, a man in a safari hat stands with his arms crossed, dressed in a light shirt and khaki pants. The background is a dense forest with tall trees, suggesting a tropical location. A dirt road winds its way into the forest behind the group, indicating the path they may have traveled or intend to follow.

The photograph captures a scene that may depict an expedition or adventure in a foreign land, with the car serving as the main mode of transportation. The overall composition suggests a sense of exploration and adventure.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a vintage black-and-white photograph depicting a group of people posing with an early 20th-century automobile on a dirt road in a forested area. The car appears to be an open-top model, possibly from the 1910s or 1920s.

Here are the details:

Setting: The scene is set in a lush, forested environment with tall trees and dense vegetation surrounding the dirt road. The road appears to be somewhat rugged and uneven.

People:

- There are seven individuals in the photograph.

- Four men are sitting in or on the car. One man is sitting on the running board on the left side, one is seated in the driver's seat, and two are seated in the back.

- One man is standing to the left of the car, leaning against it.

- Two individuals, possibly local to the area based on their attire, are standing to the right of the car. One is holding a rifle or a long object.

Attire:

- The men in and around the car are dressed in early 20th-century Western clothing, including shirts, trousers, and hats.

- The two individuals standing to the right are dressed in traditional attire, which may indicate they are from a local indigenous community.

Car:

- The automobile is an early model, likely from the 1910s or 1920s. It has an open-top design with a basic, utilitarian appearance.

- The car is parked on a dirt road, indicating it might be on an expedition or journey through a rural or wilderness area.

Context:

- The photograph suggests a sense of adventure or exploration, possibly indicating a journey or expedition into a remote or less-developed area.

- The presence of the rifle and the traditional attire of the two individuals on the right hint at a possible interaction between Western explorers or travelers and local inhabitants.

Overall, the image captures a moment in time, reflecting the early days of automobile travel and exploration in a natural, forested setting.

Qwen

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-01

This is a black-and-white photograph that appears to have been taken in a forested or jungle-like environment. The image shows a group of men gathered around an old-fashioned automobile, which looks to be from the early 20th century. The car has a soft top and a spare tire mounted on the back.

The men are dressed in a mix of attire. Some are wearing suits and ties, while others are in more casual clothing, including one man wearing a pith helmet, which suggests a colonial or expeditionary context. The setting is rugged, with a dirt road and dense vegetation surrounding the group. The overall composition suggests a moment of pause during a journey or expedition.

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-01

This image is a black-and-white photograph depicting a group of people gathered around an old-fashioned vehicle, likely a Model T Ford, parked on a dirt road amidst a dense forest setting. The car has an open-top design with a canvas roof. The individuals appear to be a mix of Europeans and local individuals, possibly from the early 20th century.

- The Europeans are dressed in formal attire, with some wearing bow ties, suggesting a formal or staged event. Their clothing styles and the car indicate a period from the early 1900s.

- One European is seated on the hood of the car, while others are seated inside and on the ground around the vehicle.

- The local individuals are standing to the side, dressed in what might be traditional or work attire, possibly indicating their roles as workers or guides.

- The background is a thick forest with tall trees and undergrowth, suggesting the location could be a remote or rural area. The road appears unpaved and narrow, further emphasizing the remote setting.

Overall, the image captures a moment of interaction between Europeans and locals in a forested, possibly colonial context.