Machine Generated Data

Tags

Amazon

created on 2022-01-08

Clarifai

created on 2023-10-25

| people | 99.6 | |

|

| ||

| street | 97.3 | |

|

| ||

| home | 94.9 | |

|

| ||

| adult | 94.5 | |

|

| ||

| group | 93.5 | |

|

| ||

| two | 92.9 | |

|

| ||

| no person | 92.5 | |

|

| ||

| town | 92.3 | |

|

| ||

| architecture | 92 | |

|

| ||

| man | 92 | |

|

| ||

| vehicle | 91.9 | |

|

| ||

| group together | 90.6 | |

|

| ||

| carriage | 89.6 | |

|

| ||

| building | 88.8 | |

|

| ||

| three | 86.2 | |

|

| ||

| transportation system | 85.6 | |

|

| ||

| one | 84.1 | |

|

| ||

| retro | 81.8 | |

|

| ||

| administration | 80.9 | |

|

| ||

| vintage | 80 | |

|

| ||

Imagga

created on 2022-01-08

| architecture | 70.2 | |

|

| ||

| balcony | 64.2 | |

|

| ||

| building | 56.2 | |

|

| ||

| facade | 46.5 | |

|

| ||

| city | 40.8 | |

|

| ||

| structure | 40 | |

|

| ||

| old | 36.3 | |

|

| ||

| town | 33.5 | |

|

| ||

| window | 33.4 | |

|

| ||

| house | 31.4 | |

|

| ||

| urban | 27.1 | |

|

| ||

| street | 25.8 | |

|

| ||

| brick | 24.5 | |

|

| ||

| travel | 23.3 | |

|

| ||

| home | 23.2 | |

|

| ||

| stone | 22.8 | |

|

| ||

| ancient | 20.8 | |

|

| ||

| tourism | 20.7 | |

|

| ||

| exterior | 19.4 | |

|

| ||

| historic | 18.4 | |

|

| ||

| wall | 18.1 | |

|

| ||

| roof | 17.2 | |

|

| ||

| tourist | 16.3 | |

|

| ||

| history | 16.1 | |

|

| ||

| historical | 16 | |

|

| ||

| windows | 15.4 | |

|

| ||

| door | 15.2 | |

|

| ||

| sky | 14.7 | |

|

| ||

| residential | 14.4 | |

|

| ||

| public house | 14.4 | |

|

| ||

| buildings | 14.2 | |

|

| ||

| houses | 13.6 | |

|

| ||

| architectural | 13.5 | |

|

| ||

| arch | 12.6 | |

|

| ||

| medieval | 12.5 | |

|

| ||

| village | 11.6 | |

|

| ||

| residence | 11.3 | |

|

| ||

| center | 11.3 | |

|

| ||

| construction | 11.1 | |

|

| ||

| property | 10.7 | |

|

| ||

| apartment | 10.6 | |

|

| ||

| estate | 10.5 | |

|

| ||

| shop | 10.3 | |

|

| ||

| barbershop | 10 | |

|

| ||

| road | 10 | |

|

| ||

| palace | 9.9 | |

|

| ||

| prison | 9.9 | |

|

| ||

| cityscape | 9.5 | |

|

| ||

| dwelling | 9.1 | |

|

| ||

| landmark | 9 | |

|

| ||

| vacation | 9 | |

|

| ||

| antique | 8.7 | |

|

| ||

| lamp | 8.6 | |

|

| ||

| glass | 8.6 | |

|

| ||

| culture | 8.6 | |

|

| ||

| real | 8.6 | |

|

| ||

| destination | 8.4 | |

|

| ||

| traditional | 8.3 | |

|

| ||

| housing | 8.3 | |

|

| ||

| new | 8.1 | |

|

| ||

| alley | 8 | |

|

| ||

| holiday | 7.9 | |

|

| ||

| shutter | 7.9 | |

|

| ||

| scene | 7.8 | |

|

| ||

| modern | 7.7 | |

|

| ||

| downtown | 7.7 | |

|

| ||

| living | 7.6 | |

|

| ||

| monument | 7.5 | |

|

| ||

| place | 7.5 | |

|

| ||

| warehouse | 7.4 | |

|

| ||

| square | 7.3 | |

|

| ||

| tower | 7.2 | |

|

| ||

| wooden | 7 | |

|

| ||

Google

created on 2022-01-08

| Wheel | 95.6 | |

|

| ||

| Window | 93.5 | |

|

| ||

| Tire | 92.6 | |

|

| ||

| Building | 90.8 | |

|

| ||

| Vehicle | 88.4 | |

|

| ||

| Motor vehicle | 82.5 | |

|

| ||

| Door | 76.1 | |

|

| ||

| Facade | 75.4 | |

|

| ||

| History | 68.1 | |

|

| ||

| Street | 65.6 | |

|

| ||

| Classic | 60.9 | |

|

| ||

| Monochrome | 59.8 | |

|

| ||

| Classic car | 56.4 | |

|

| ||

| Antique car | 55.5 | |

|

| ||

| Monochrome photography | 54.4 | |

|

| ||

| Family car | 53.2 | |

|

| ||

| House | 52.8 | |

|

| ||

| Sash window | 52.7 | |

|

| ||

| Vintage clothing | 50.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 31-41 |

| Gender | Male, 99.8% |

| Disgusted | 34.7% |

| Angry | 31% |

| Calm | 12% |

| Sad | 9.9% |

| Confused | 4.8% |

| Fear | 4.2% |

| Surprised | 2.2% |

| Happy | 1.2% |

AWS Rekognition

| Age | 20-28 |

| Gender | Male, 99.4% |

| Calm | 97.9% |

| Sad | 1% |

| Disgusted | 0.8% |

| Angry | 0.1% |

| Confused | 0.1% |

| Surprised | 0% |

| Happy | 0% |

| Fear | 0% |

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 99.8% |

| Disgusted | 63.5% |

| Sad | 16% |

| Happy | 7% |

| Calm | 4.9% |

| Fear | 2.9% |

| Surprised | 2.3% |

| Confused | 1.8% |

| Angry | 1.6% |

AWS Rekognition

| Age | 37-45 |

| Gender | Male, 99.9% |

| Calm | 96.6% |

| Confused | 1.1% |

| Disgusted | 0.9% |

| Sad | 0.4% |

| Surprised | 0.3% |

| Angry | 0.3% |

| Fear | 0.2% |

| Happy | 0.2% |

AWS Rekognition

| Age | 24-34 |

| Gender | Female, 65.2% |

| Calm | 71.6% |

| Sad | 11.3% |

| Confused | 3.8% |

| Fear | 3.6% |

| Angry | 3.1% |

| Disgusted | 2.5% |

| Surprised | 2.1% |

| Happy | 2% |

AWS Rekognition

| Age | 27-37 |

| Gender | Male, 99.5% |

| Happy | 97.6% |

| Calm | 1.1% |

| Fear | 0.4% |

| Disgusted | 0.3% |

| Sad | 0.3% |

| Confused | 0.2% |

| Surprised | 0.1% |

| Angry | 0.1% |

AWS Rekognition

| Age | 47-53 |

| Gender | Male, 99.5% |

| Calm | 91.8% |

| Confused | 2.9% |

| Sad | 2.4% |

| Disgusted | 1.1% |

| Surprised | 0.9% |

| Angry | 0.6% |

| Fear | 0.2% |

| Happy | 0.2% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very likely |

Feature analysis

Categories

Imagga

| streetview architecture | 100% | |

|

| ||

Captions

Microsoft

created on 2022-01-08

| a vintage photo of an old building | 94.8% | |

|

| ||

| a vintage photo of a building | 93.7% | |

|

| ||

| a vintage photo of an old building in the background | 93.5% | |

|

| ||

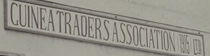

Text analysis

Amazon

ASSOCIATION

TRADERS

CUINEA

CUINEA TRADERS ASSOCIATION 195 IT

195

DE

IT

CUINEATRADERS ASSOCIATION B

CUINEATRADERS

ASSOCIATION

B