Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 2-8 |

| Gender | Female, 80.7% |

| Calm | 85.8% |

| Sad | 13.7% |

| Angry | 0.2% |

| Confused | 0.1% |

| Happy | 0.1% |

| Fear | 0.1% |

| Disgusted | 0.1% |

| Surprised | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99% | |

Categories

Imagga

created on 2021-12-15

| paintings art | 79.7% | |

| nature landscape | 11.6% | |

| pets animals | 4.3% | |

| people portraits | 2.4% | |

| streetview architecture | 1.2% | |

Captions

Microsoft

created by unknown on 2021-12-15

| a little girl posing for a photo | 71% | |

| a little girl posing for a picture | 70.9% | |

| a child posing for a photo | 66.3% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-15

a family photo of people.

Salesforce

Created by general-english-image-caption-blip on 2025-05-03

a photograph of a group of children standing next to each other

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-08

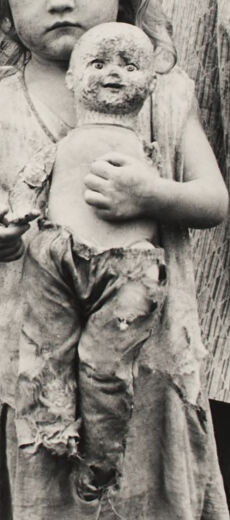

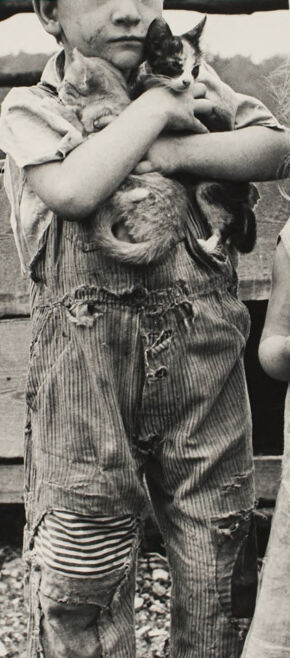

The image depicts two children standing outdoors near a wooden structure. Their clothing is heavily worn and tattered, reflecting signs of poverty or hardship. One child is holding a small animal, likely a cat, while the other clutches an object in their hands. The setting includes rustic, natural elements such as a wooden fence or building and rough ground with scattered debris.

Created by gpt-4o-2024-08-06 on 2025-06-08

The image portrays two children standing side by side, dressed in tattered and worn clothing. The child on the left is holding a kitten close to their chest, while the child on the right holds a piece of bread. The background features wooden logs and planks, suggesting a rustic or rural setting. The overall tone is black and white, giving a historical or vintage feel to the photograph.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-13

The image shows a man holding a cat, with a young girl standing next to him. The man and girl appear to be in a rural or outdoor setting, with a wooden structure or fence visible in the background. The girl is holding a doll or stuffed animal. The image has a black and white, vintage aesthetic.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-13

This is a black and white historical photograph that appears to be from the early-to-mid 20th century. It shows two children in tattered, worn clothing standing outside what appears to be a rustic wooden building. One child is wearing striped overalls with patches and holes, holding a cat. The other child is wearing a dress and holding what appears to be a doll or toy. The image seems to capture rural poverty during what might be the Great Depression era, given the children's worn clothing and the rustic setting. The photograph has a documentary-style quality typical of social documentary photography from that period.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-25

The image is a black-and-white photograph of two children standing in front of a wooden structure, with the boy holding a cat and the girl holding a doll. The boy is wearing tattered overalls, while the girl is dressed in a torn dress. The background features a wooden structure, possibly a barn or shed, with a pile of logs or firewood to the left. The overall atmosphere of the image suggests a rural or impoverished setting, with the children's worn clothing and the rustic surroundings evoking a sense of hardship and simplicity.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-25

The image is a black-and-white photograph of two children standing in front of a wooden structure, likely a barn or shed. The boy on the left is wearing tattered overalls and holding a cat in his arms. He has a serious expression on his face. The girl on the right is wearing a tattered dress and holding a doll in her arms. She also has a serious expression on her face.

In the background, there is a wooden structure with a door that appears to be made of wood planks. The ground is covered in dirt and rocks. The overall atmosphere of the image suggests that the children are living in a rural or impoverished area, possibly during a time of economic hardship. The photograph may have been taken during the Great Depression, given the clothing and the setting.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-25

The image is a black-and-white photograph of two children standing in front of a wooden structure, possibly a barn or a shed. The boy is holding a cat in his arms, while the girl is holding a doll with a sad expression. Both children are wearing worn-out clothes, and the girl's dress is torn and dirty. The boy's shirt is torn and dirty as well, and he has a serious expression on his face. Behind them is a wooden structure with a wheel and a wooden post.

Created by amazon.nova-pro-v1:0 on 2025-05-25

A black-and-white photo of two children standing next to each other, one boy and one girl, in front of a wooden structure. The boy is holding a cat, while the girl is holding a doll. Both the boy and the girl are wearing clothes that have holes. Behind them is a wooden structure that looks like a water wheel. On the left side of the structure, there is a tree stump.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-04-29

Here's a description of the image:

This black and white photograph depicts two children, likely from a time of hardship or poverty.

- The Boy: The boy is standing on the left, holding two kittens. He wears worn overalls, with a striped section on one leg and several patches and tears visible. His face appears serious.

- The Girl: The girl stands to the right. She also wears worn clothing and has a dirty face. She holds a doll, which is also in a dilapidated state, with missing limbs or tattered clothes.

- The Background: The setting appears to be outdoors, with a wooden structure or possibly a fence visible in the background. The ground is rocky and sparse.

The image has a strong emotional impact, conveying a sense of hardship, simplicity, and resilience. The children's clothing, the doll, and the overall environment suggest a difficult economic situation. It’s a powerful image that likely captures a moment in history.

Created by gemini-2.0-flash on 2025-04-29

The image is a black and white photograph of two children, a boy and a girl. The boy is on the left and is holding two kittens. He is wearing overalls that are torn and patched. The girl is on the right and is holding a doll. The doll is also torn and dirty. The children are standing in front of a wooden structure. The image has a gritty, documentary feel to it.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-27

This black-and-white photograph depicts two young children standing outdoors near a wooden structure that appears to be a fence or a wall. The children are dressed in worn and tattered clothing, suggesting a setting of poverty or hardship.

The child on the left, who appears to be a boy, is holding a small kitten in one arm and a puppy in the other. The child on the right, who appears to be a girl, is holding a doll that looks quite worn and dirty. Both children have serious expressions on their faces, reflecting a somber mood.

The background shows a rustic environment with a wooden fence or wall made of logs and some scattered debris on the ground, indicating a rural or impoverished setting. The overall tone of the image conveys a sense of hardship and struggle, possibly during a historical period of economic difficulty such as the Great Depression.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-05

This black-and-white photograph captures two children standing in front of a rustic wooden structure, possibly a shed or a cabin. The child on the left is wearing overalls with torn patches and is holding a small, fluffy kitten in their arms, appearing to look off into the distance. The child on the right, dressed in a tattered dress, holds a broken doll with one arm while looking directly at the camera. The background features a log wall and some foliage, contributing to the rural and somewhat impoverished setting of the scene. The overall mood of the photograph conveys a sense of innocence and hardship.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-05

This is a black-and-white photograph showing two young children standing outdoors near a wooden structure that appears to be part of a rustic building or fence. The child on the left is wearing torn overalls and is holding a small kitten. The child on the right is dressed in a torn and dirty dress and is holding a worn-out doll. Both children have serious expressions on their faces. The background includes a wooden fence and some natural elements, suggesting a rural or farm-like setting. The overall mood of the photograph is one of simplicity and perhaps hardship.