Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

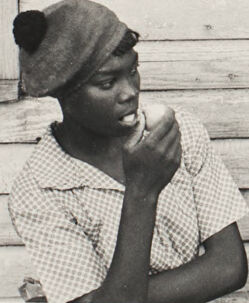

| Age | 5-15 |

| Gender | Female, 74.7% |

| Sad | 59.7% |

| Angry | 39% |

| Confused | 0.6% |

| Calm | 0.4% |

| Fear | 0.1% |

| Disgusted | 0.1% |

| Surprised | 0% |

| Happy | 0% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.7% | |

Categories

Imagga

created on 2021-12-15

| paintings art | 97.7% | |

| text visuals | 1.7% | |

Captions

Microsoft

created by unknown on 2021-12-15

| a person sitting on a bench | 87.1% | |

| a man and a woman sitting on a bench | 74.7% | |

| a man and woman sitting on a bench | 69.7% | |

Salesforce

Created by general-english-image-caption-blip on 2025-05-16

a photograph of a couple of people sitting on a bench

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-15

The image depicts two individuals standing in front of a wooden wall with horizontal planks. To the left, part of a window is visible, with glass showing smudged or painted markings. The person on the left is wearing a patterned shirt and a hat, while the person on the right is dressed in layered clothing, including a jacket or textured cardigan. Both individuals have their arms crossed or positioned in thoughtful stances. The composition conveys a casual and candid moment against an aged, textured background.

Created by gpt-4o-2024-08-06 on 2025-06-15

The image is a black and white photograph featuring two individuals standing in front of a wooden wall. The individuals are positioned casually, with one person leaning against the wall with their arms crossed and the other standing with a hand raised to the chin. They are dressed in mid-20th-century attire, with one wearing a patterned dress and a hat, and the other wearing a patterned jacket over a white shirt. To the left, a window with a wooden frame is visible, adding to the rustic appearance of the setting. The wooden siding in the background suggests a modest, perhaps rural, environment.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image shows two young African American women standing in front of a wooden building. They appear to be in a rural or small-town setting, with the wooden walls and window visible behind them. The women have serious expressions on their faces, and one has her arms crossed while the other has her hand on her chin, suggesting they may be engaged in conversation or contemplation. The image has a black and white, documentary-style aesthetic, capturing a moment in time and highlighting the subjects' pensive demeanor.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This is a black and white photograph that appears to be from a historical period, showing two women standing against a wooden siding wall of a building. One woman is wearing a checkered dress and a head covering or scarf, with her hand near her face in what appears to be a thoughtful or amused gesture. The other woman is wearing a patterned cardigan over a light-colored top and has her arms crossed, looking off to the side with a more serious expression. The wooden wall behind them has horizontal planks and there's a window visible on the left side of the frame. The composition and style suggests this may be a documentary or journalistic photograph, possibly from the mid-20th century.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-04

The image is a black-and-white photograph of two women standing in front of a wooden wall.

The woman on the left is wearing a checkered shirt and a hat. She is holding an apple in her right hand and appears to be taking a bite. The woman on the right is wearing a dark jacket over a white shirt. Her arms are crossed, and she is looking to her left.

The background of the image is a wooden wall with horizontal planks. There is a window on the left side of the wall, and the glass is covered with a piece of paper or fabric. The overall atmosphere of the image suggests that it was taken in a rural or outdoor setting, possibly during the 1950s or 1960s.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-04

The image is a black-and-white photograph of two young Black girls standing in front of a wooden wall. The girl on the left wears a checkered shirt and a hat with a pom-pom, while the girl on the right has her hair styled in an updo and wears a dark jacket over a white shirt. Both girls have their arms crossed, with the girl on the left holding an apple in her right hand.

In the background, there is a window on the left side of the image, which appears to be covered with a piece of glass or plastic that has been scratched or etched. The overall atmosphere of the image suggests a casual, everyday moment captured in time.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-27

The image is a black-and-white photograph of two women sitting on a wooden wall. The woman on the left is wearing a hat and is holding a piece of food in her hand. The woman on the right is wearing a jacket and has her arms crossed. Behind them is a window with a wooden frame. The wall is made of wood and has a worn-out look. The image has a vintage feel to it.

Created by amazon.nova-pro-v1:0 on 2025-05-27

The black-and-white image features two women standing against a wooden wall. The woman on the left is wearing a checkered shirt and a hat. She is holding something in her hand and appears to be eating. The woman on the right is wearing a jacket and a white inner shirt. She is looking to the left and has her arms crossed. Behind them is a window with a wooden frame.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

Here's a description of the image:

Overall:

The image is a black and white photograph showing two young Black women posing outdoors against a wooden wall. It has a vintage feel, suggesting it was taken some time ago.

Details:

- Setting: The background consists of a wall built with horizontal wooden planks. To the left of the frame is a window, its panes filled with what appears to be a white substance (likely paint or grime).

- People:

- On the left, a young woman wears a cap with a pom-pom and a checkered shirt. She is holding a piece of fruit (likely an apple) and appears to be taking a bite.

- On the right, another young woman is wearing a patterned jacket over a white shirt. She is holding a book, and her arms are crossed.

- Mood/Style: The photo has a candid and somewhat contemplative mood. The composition is simple but effective, with the figures contrasted against the plain wooden background. The black and white tones add to its timeless quality.

Created by gemini-2.0-flash on 2025-06-16

The black and white photograph shows two young women. To the left, one girl wears a hat with a pompom and is eating an apple. The girl to the right has her hair neatly curled to the side and wears a patterned tweed jacket over a white shirt. She holds a book against her chest with her arms crossed and looks off to the right. Both girls appear to be teenagers. They stand in front of a wooden wall with horizontal planks, next to a grimy, partially obscured window. The overall tone of the image is somber and contemplative.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-29

The image is a black-and-white photograph depicting two individuals standing against a wooden wall. The wall appears to be part of a rustic building, with horizontal wooden planks and a window to the left.

The individual on the left is wearing a checkered shirt and a headscarf, and seems to be eating something. The individual on the right is dressed in a patterned dress and has their arms crossed, looking off to the side with a somewhat serious expression. The setting and attire suggest a historical or rural context, possibly from the mid-20th century. The overall mood of the photograph is somber and reflective.