Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

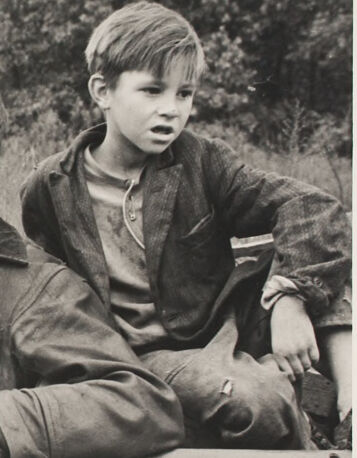

| Age | 2-8 |

| Gender | Female, 51.6% |

| Sad | 72.6% |

| Calm | 13.4% |

| Angry | 4.4% |

| Fear | 4% |

| Confused | 3.9% |

| Surprised | 0.7% |

| Happy | 0.6% |

| Disgusted | 0.4% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.2% | |

Categories

Imagga

created on 2021-12-15

| paintings art | 98.5% | |

| people portraits | 1.2% | |

Captions

Microsoft

created by unknown on 2021-12-15

| a man sitting on a bench | 76.2% | |

| an old photo of a man | 76.1% | |

| a man sitting on a boat | 61.7% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

photograph of a family in a car.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a group of people sitting in a truck

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-16

The image depicts a group of people seated in an open-top vehicle, possibly a vintage car. The vehicle shows simplicity in design, with a visible steering wheel and dashboard. The individuals are dressed in rugged, worn clothing, suggesting a rural or working-class environment. The background features a natural landscape with trees and vegetation, creating an outdoor, rustic atmosphere. The image conveys a historical or documentary-like quality.

Created by gpt-4o-2024-08-06 on 2025-06-16

The image depicts a vintage open-top vehicle with four individuals seated inside. The driver is wearing a heavy jacket and gloves, gripping the steering wheel with one hand while resting the other on the side of the vehicle. The passenger seat is occupied, and another person is seated in the back, dressed in casual, somewhat rugged attire. The backdrop shows a natural setting with trees and tall grass, suggestive of a countryside or rural environment. The overall appearance gives a sense of early to mid-20th century, both from the style of the vehicle and the clothing of the individuals.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image depicts a family sitting in an old, worn-down vehicle. The man in the center appears to be the father, with a gruff, weathered expression on his face. Next to him is a woman, likely the mother, and a young child, possibly a baby, being held by the father. There is also another young child, a boy, sitting in the vehicle with the family. The setting appears to be a rural, wooded area, with trees and foliage visible in the background. The overall scene conveys a sense of hardship and struggle, with the family's worn clothing and the dilapidated state of the vehicle suggesting difficult living conditions.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-18

The black and white image shows a man sitting in an old truck or tractor with two young boys. The man has a beard and is wearing a hat, jacket and gloves, suggesting he may be a farmer or laborer. One boy is sitting in the man's lap while the other stands next to them in the vehicle. They appear to be in a rural, wooded area based on the trees visible in the background. The photograph has an aged, vintage quality to it, capturing a slice of life from an earlier era.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This is a black and white photograph that appears to be from an earlier era, possibly the 1930s or 1940s. It shows four people sitting in what appears to be an old automobile or truck. The vehicle has a simple, utilitarian design with a basic steering wheel visible. The occupants are dressed in work clothes, including what appears to be denim or canvas work wear, and the adult in the middle is wearing a cap typical of working-class attire from that period. There's also someone wearing a knit hat, and a child is visible on the right side. The photograph has a documentary or journalistic quality to it, possibly capturing rural or working-class American life during that period. The background shows some hills or elevated terrain with vegetation.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image depicts a black-and-white photograph of a family of four, consisting of two adults and two children, seated in the back of an open-top vehicle. The man, wearing a cap and a jacket, sits in the front, with his left arm resting on the edge of the vehicle. The woman, dressed in a hat and a jacket, sits behind him, holding a baby wrapped in a blanket. A young boy, wearing a jacket, sits on the right side of the vehicle.

The vehicle appears to be an old-fashioned car or truck, with a steering wheel visible on the left side. The background of the image features a field with trees in the distance, suggesting a rural setting. The overall atmosphere of the image is one of simplicity and rustic charm, capturing a moment in time of a family's daily life.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a family of five in an open-top vehicle, possibly a truck or car, with a steering wheel on the left side. The family members are dressed in casual attire, with the father wearing a cap and the mother wearing a hat. There are three children, two boys and a baby, who appear to be between the ages of 1 and 10 years old.

The background of the image features trees and grass, suggesting that the family is outdoors, possibly on a farm or in a rural area. The overall atmosphere of the image is one of simplicity and rustic charm, capturing a moment in time for this family.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-08

The image is a black-and-white photograph depicting a family of four sitting in an old car. The car is parked in a grassy field, with trees and hills in the background. The family consists of two adults and two children. The man in the front seat is wearing a hat and gloves, and he is holding the steering wheel. The woman next to him is wearing a bonnet and holding a baby. The boy next to her is looking at the camera.

Created by amazon.nova-pro-v1:0 on 2025-06-08

The black-and-white photo shows a family sitting inside a car. The man is sitting in front of the steering wheel, wearing a hat and gloves, and looking at the camera. He is wearing a long-sleeve shirt with a pocket on the left side. Behind him is a woman wearing a hat, holding a baby in her arms. The boy is sitting next to the woman, wearing a jacket. Behind them are trees.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-16

Here is a description of the image:

The image is a black and white photograph depicting a family in a car, likely during the Great Depression era. The car appears to be an older model, with a visible steering wheel and windshield frame.

The family consists of four people:

- A man in the driver's seat, wearing a cap, work clothes, and gloves. He has a weathered face, suggesting hard labor.

- A woman in the back, wearing a hat, holding a baby. She looks concerned.

- A baby, wrapped in a hat and scarf, asleep in the mother's arms.

- A young boy sitting next to the driver, with a serious expression.

The background shows a forested area, and the overall mood of the image conveys a sense of hardship and concern. The photographer has captured a moment in time with a strong sense of realism and emotion.

Created by gemini-2.0-flash on 2025-06-16

Certainly! Here's a description of the image:

Overall Impression:

The image is a black and white photograph featuring a family in what appears to be an old, open-top car, possibly from the early to mid-20th century. The setting seems to be rural, given the background of trees and fields. The tone of the image is somber, and the expressions on the faces of the family members suggest a sense of hardship or worry.

Detailed Description:

The Family: The photograph shows a father, mother, a young boy, and a baby.

- The father is at the wheel. He appears rugged, with a weathered face and a cap on his head. He has a worn jacket and work gloves. His gaze is directed forward with a serious expression.

- The mother is behind him. She wears a knitted cap and has a concerned look on her face.

- The young boy is beside the father. He is in a jacket and appears to be looking off to the side with a somewhat worried expression.

- The baby is nestled in the mother's arms, appearing to be asleep or at least very quiet.

The Car: The car is an older model with a simple design, possibly a Ford Model T. The front section of the car is visible, including the steering wheel, windshield frame, and side panels.

Background: The background consists of a landscape with fields or meadows and trees. The sky is overcast.

Possible Interpretations:

The photograph may be depicting a family who is on the move due to economic hardship, like those who migrated during the Dust Bowl era in the United States. The worn appearance of the people, the simple vehicle, and the rural setting all suggest a life of difficulty. The image captures a sense of resilience in the face of adversity.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black-and-white photograph depicting a family of four seated in an old, open-top vehicle, possibly a truck or a farm vehicle. The setting appears to be rural, with a field and trees visible in the background.

In the foreground, an older man with a weathered face and wearing a cap is seated in the driver's position, with his hands resting on the vehicle's side. To his right, a young boy, who appears to be around 8-10 years old, is seated and looking directly at the camera. Behind the older man, a woman wearing a headscarf or hat is holding a baby, who is also looking at the camera. The expressions on their faces are serious, possibly reflecting the hardships or the solemnity of their circumstances.

The clothing worn by the individuals suggests a time period from the early to mid-20th century, and their attire is practical and typical of rural or farming communities. The overall mood of the photograph conveys a sense of resilience and perhaps a glimpse into the daily life of a farming family during that era.

Qwen

No captions written