Machine Generated Data

Tags

Amazon

created on 2019-04-07

| Person | 99.8 | |

|

| ||

| Human | 99.8 | |

|

| ||

| Person | 99.8 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Person | 99.5 | |

|

| ||

| Apparel | 99 | |

|

| ||

| Clothing | 99 | |

|

| ||

| Person | 98.9 | |

|

| ||

| Person | 96.2 | |

|

| ||

| People | 89.2 | |

|

| ||

| Shoe | 87.9 | |

|

| ||

| Footwear | 87.9 | |

|

| ||

| Shoe | 84.1 | |

|

| ||

| Shoe | 83.8 | |

|

| ||

| Shoe | 77.6 | |

|

| ||

| Shoe | 74.7 | |

|

| ||

| Shoe | 67.8 | |

|

| ||

| Housing | 64.4 | |

|

| ||

| Building | 64.4 | |

|

| ||

| Family | 64 | |

|

| ||

| Outdoors | 63.5 | |

|

| ||

| Robe | 63.3 | |

|

| ||

| Gown | 63.3 | |

|

| ||

| Evening Dress | 63.3 | |

|

| ||

| Fashion | 63.3 | |

|

| ||

| Sleeve | 60 | |

|

| ||

| Dress | 56.5 | |

|

| ||

| Female | 56 | |

|

| ||

Clarifai

created on 2018-09-18

Imagga

created on 2018-09-18

| kin | 61.8 | |

|

| ||

| statue | 20.7 | |

|

| ||

| people | 20.1 | |

|

| ||

| man | 16.2 | |

|

| ||

| world | 14.6 | |

|

| ||

| male | 14.4 | |

|

| ||

| sculpture | 13.5 | |

|

| ||

| ancient | 13 | |

|

| ||

| portrait | 12.9 | |

|

| ||

| old | 12.5 | |

|

| ||

| adult | 12.4 | |

|

| ||

| child | 12.4 | |

|

| ||

| travel | 12 | |

|

| ||

| culture | 12 | |

|

| ||

| person | 11.8 | |

|

| ||

| history | 11.6 | |

|

| ||

| clothing | 11.6 | |

|

| ||

| face | 11.4 | |

|

| ||

| mother | 11 | |

|

| ||

| traditional | 10.8 | |

|

| ||

| soldier | 10.7 | |

|

| ||

| art | 10.6 | |

|

| ||

| uniform | 10.3 | |

|

| ||

| dress | 9.9 | |

|

| ||

| women | 9.5 | |

|

| ||

| historical | 9.4 | |

|

| ||

| architecture | 9.4 | |

|

| ||

| monument | 9.3 | |

|

| ||

| city | 9.1 | |

|

| ||

| girls | 9.1 | |

|

| ||

| tourism | 9.1 | |

|

| ||

| parent | 8.9 | |

|

| ||

| antique | 8.7 | |

|

| ||

| stone | 8.5 | |

|

| ||

| religion | 8.1 | |

|

| ||

| army | 7.8 | |

|

| ||

| marble | 7.7 | |

|

| ||

| military | 7.7 | |

|

| ||

| fashion | 7.5 | |

|

| ||

| park | 7.4 | |

|

| ||

| historic | 7.3 | |

|

| ||

| lady | 7.3 | |

|

| ||

| smile | 7.1 | |

|

| ||

Google

created on 2018-09-18

| photograph | 96.1 | |

|

| ||

| black and white | 88.8 | |

|

| ||

| standing | 86.3 | |

|

| ||

| infrastructure | 86.1 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| vintage clothing | 81.2 | |

|

| ||

| photography | 79.8 | |

|

| ||

| dress | 78.7 | |

|

| ||

| monochrome photography | 75.8 | |

|

| ||

| family | 73.1 | |

|

| ||

| house | 67.8 | |

|

| ||

| girl | 65.4 | |

|

| ||

| monochrome | 62.6 | |

|

| ||

| street | 56.8 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

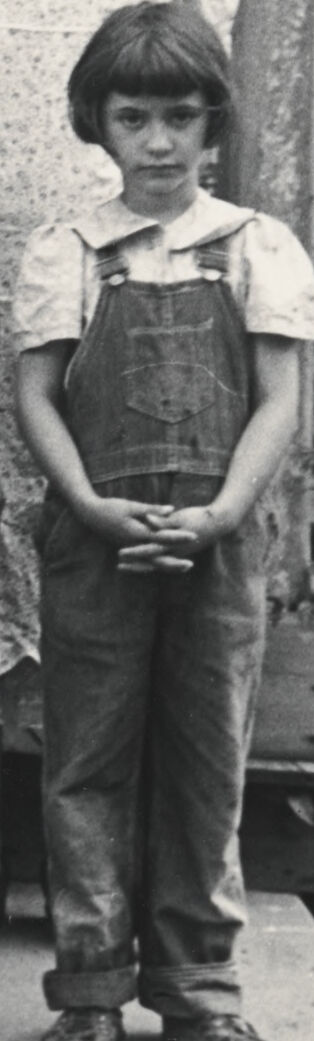

| Age | 10-15 |

| Gender | Female, 99.3% |

| Disgusted | 5.3% |

| Angry | 44.8% |

| Calm | 3.4% |

| Sad | 32.6% |

| Happy | 9.3% |

| Confused | 1.2% |

| Surprised | 3.5% |

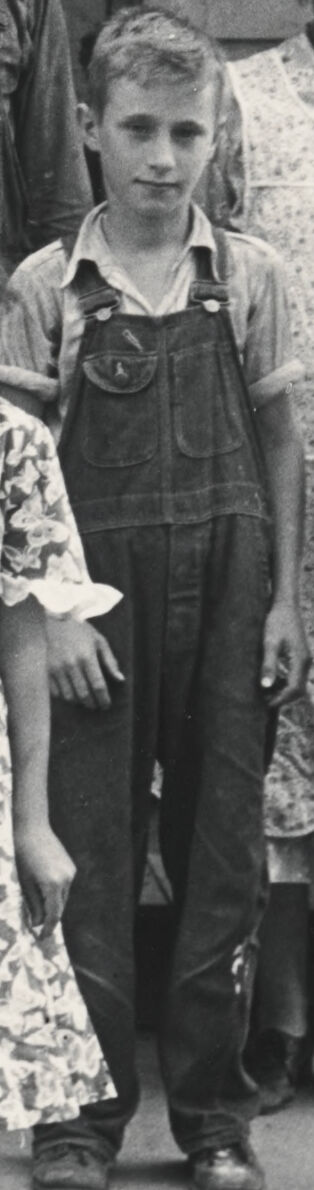

AWS Rekognition

| Age | 10-15 |

| Gender | Female, 99.7% |

| Confused | 7.8% |

| Calm | 32.1% |

| Angry | 32.1% |

| Sad | 23.6% |

| Surprised | 1% |

| Disgusted | 1.9% |

| Happy | 1.4% |

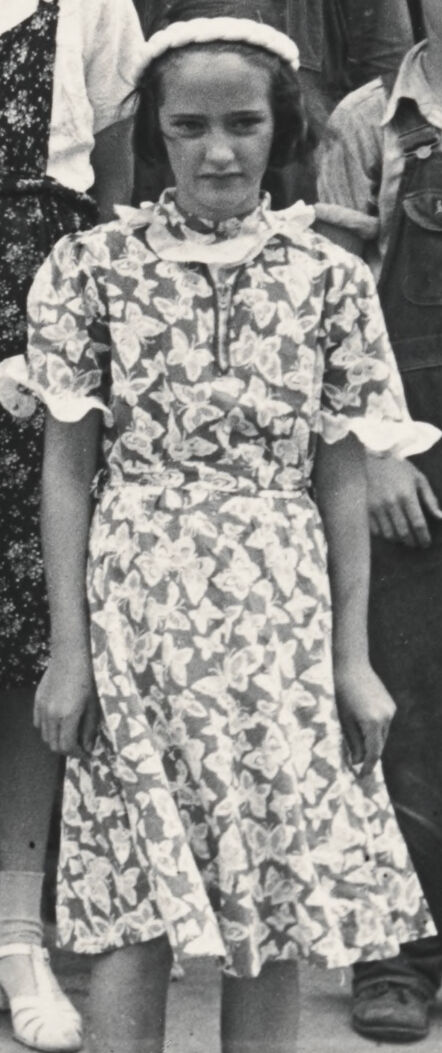

AWS Rekognition

| Age | 10-15 |

| Gender | Female, 98.1% |

| Calm | 8.7% |

| Confused | 4.3% |

| Surprised | 1.5% |

| Disgusted | 3% |

| Happy | 1.9% |

| Angry | 8.3% |

| Sad | 72.5% |

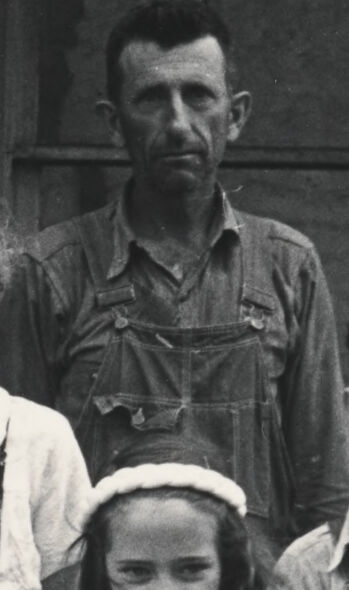

AWS Rekognition

| Age | 20-38 |

| Gender | Male, 91.7% |

| Sad | 86.8% |

| Angry | 3.2% |

| Disgusted | 0.9% |

| Surprised | 0.4% |

| Calm | 7.6% |

| Happy | 0.3% |

| Confused | 0.8% |

AWS Rekognition

| Age | 48-68 |

| Gender | Female, 94.8% |

| Angry | 12.1% |

| Disgusted | 5.8% |

| Happy | 6.8% |

| Sad | 30.2% |

| Calm | 38.3% |

| Confused | 2.8% |

| Surprised | 4% |

AWS Rekognition

| Age | 49-69 |

| Gender | Male, 94.1% |

| Happy | 0.6% |

| Surprised | 1% |

| Disgusted | 3.5% |

| Confused | 2.3% |

| Angry | 14.8% |

| Calm | 61.1% |

| Sad | 16.8% |

Microsoft Cognitive Services

| Age | 15 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 50 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 25 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 48 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 30 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 10 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| people portraits | 49.5% | |

|

| ||

| streetview architecture | 37.2% | |

|

| ||

| paintings art | 12% | |

|

| ||

| events parties | 0.7% | |

|

| ||

| nature landscape | 0.3% | |

|

| ||

| pets animals | 0.2% | |

|

| ||

| interior objects | 0.1% | |

|

| ||

Captions

Microsoft

created on 2018-09-18

| a group of people posing for a photo in front of a building | 97.5% | |

|

| ||

| a group of people posing for a photo in front of a brick building | 95.7% | |

|

| ||

| a group of people standing in front of a building | 95.6% | |

|

| ||

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-31

The image shows a family standing outside of a wooden building. The family consists of an older man, a middle-aged woman, a young woman, two young boys, and a young girl. They are dressed in clothing typical of the era, with the adults wearing simple work clothes and the children wearing dresses and overalls. The background shows a wooden structure with a flower planter outside. The overall scene has a rustic, rural feel to it.

Created by claude-3-opus-20240229 on 2024-12-31

The black and white image shows a family posing together on the porch of a rustic wooden house. There are six children of varying ages - four boys and two girls. The children are dressed in old-fashioned clothing styles typical of the early-to-mid 20th century, with the girls wearing dresses and the boys in overalls or dungarees. A man who appears to be the father is standing behind the children. Potted flowers can be seen in the foreground, adding some decoration to the simple wooden porch. Overall, the photograph captures a slice of rural American family life from a bygone era.

Created by claude-3-5-sonnet-20241022 on 2024-12-31

This is a vintage black and white photograph that appears to be from the 1930s or 1940s. It shows a family group standing on the porch of a wooden house. The people are dressed in typical rural American clothing of the era - the children in overalls and cotton dresses, while the adult male wears work overalls and the adult female wears a dress with an apron and head covering. The porch has wooden posts and there are flowers planted along the side, including what appears to be some small white blooms. The composition has a documentary-style quality typical of Depression-era photography. The wooden house has horizontal siding and there appears to be a hanging plant visible on the left side of the frame.