Machine Generated Data

Tags

Amazon

created on 2021-12-14

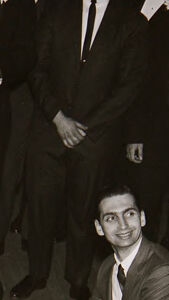

| Person | 99.7 | |

|

| ||

| Human | 99.7 | |

|

| ||

| Person | 99 | |

|

| ||

| Clothing | 98.3 | |

|

| ||

| Apparel | 98.3 | |

|

| ||

| Person | 97.9 | |

|

| ||

| Person | 97.8 | |

|

| ||

| Person | 96.3 | |

|

| ||

| Chair | 94.6 | |

|

| ||

| Furniture | 94.6 | |

|

| ||

| Person | 89.7 | |

|

| ||

| Dressing Room | 87.8 | |

|

| ||

| Room | 87.8 | |

|

| ||

| Indoors | 87.8 | |

|

| ||

| Person | 85 | |

|

| ||

| Overcoat | 82.8 | |

|

| ||

| Coat | 82.8 | |

|

| ||

| Person | 80.7 | |

|

| ||

| Person | 78.4 | |

|

| ||

| Shoe | 75.8 | |

|

| ||

| Footwear | 75.8 | |

|

| ||

| Person | 75.8 | |

|

| ||

| Shoe | 75.6 | |

|

| ||

| People | 59.2 | |

|

| ||

| Shoe | 59.1 | |

|

| ||

| Sitting | 56.7 | |

|

| ||

| Suit | 55.4 | |

|

| ||

| Shoe | 51.8 | |

|

| ||

Clarifai

created on 2023-10-15

| people | 99.7 | |

|

| ||

| group | 98.8 | |

|

| ||

| group together | 97.7 | |

|

| ||

| many | 95.9 | |

|

| ||

| man | 95.3 | |

|

| ||

| woman | 94.5 | |

|

| ||

| adult | 93.9 | |

|

| ||

| monochrome | 93 | |

|

| ||

| music | 91.9 | |

|

| ||

| leader | 86.6 | |

|

| ||

| recreation | 86.6 | |

|

| ||

| several | 85.3 | |

|

| ||

| administration | 84.4 | |

|

| ||

| war | 83.6 | |

|

| ||

| furniture | 82.6 | |

|

| ||

| child | 82.1 | |

|

| ||

| family | 81.6 | |

|

| ||

| street | 81.2 | |

|

| ||

| boy | 80.6 | |

|

| ||

| party | 80.3 | |

|

| ||

Imagga

created on 2021-12-14

| shoe shop | 88.3 | |

|

| ||

| shop | 69.7 | |

|

| ||

| mercantile establishment | 54 | |

|

| ||

| place of business | 35.9 | |

|

| ||

| man | 27.7 | |

|

| ||

| people | 25.1 | |

|

| ||

| male | 24.1 | |

|

| ||

| person | 21.2 | |

|

| ||

| establishment | 17.9 | |

|

| ||

| men | 16.3 | |

|

| ||

| business | 15.8 | |

|

| ||

| businessman | 13.2 | |

|

| ||

| adult | 13.2 | |

|

| ||

| room | 12.1 | |

|

| ||

| black | 12 | |

|

| ||

| education | 11.2 | |

|

| ||

| hand | 10.6 | |

|

| ||

| success | 10.5 | |

|

| ||

| team | 9.8 | |

|

| ||

| student | 9.8 | |

|

| ||

| old | 9.7 | |

|

| ||

| blackboard | 9.6 | |

|

| ||

| women | 9.5 | |

|

| ||

| happy | 9.4 | |

|

| ||

| to | 8.8 | |

|

| ||

| interior | 8.8 | |

|

| ||

| boy | 8.7 | |

|

| ||

| teacher | 8.4 | |

|

| ||

| portrait | 8.4 | |

|

| ||

| child | 8.3 | |

|

| ||

| sign | 8.3 | |

|

| ||

| human | 8.2 | |

|

| ||

| indoor | 8.2 | |

|

| ||

| cheerful | 8.1 | |

|

| ||

| suit | 8.1 | |

|

| ||

| group | 8.1 | |

|

| ||

| light | 8 | |

|

| ||

| home | 8 | |

|

| ||

| smiling | 7.9 | |

|

| ||

| couple | 7.8 | |

|

| ||

| school | 7.8 | |

|

| ||

| modern | 7.7 | |

|

| ||

| senior | 7.5 | |

|

| ||

| world | 7.5 | |

|

| ||

| symbol | 7.4 | |

|

| ||

| decoration | 7.4 | |

|

| ||

| girls | 7.3 | |

|

| ||

| family | 7.1 | |

|

| ||

| love | 7.1 | |

|

| ||

Google

created on 2021-12-14

| Photograph | 94.2 | |

|

| ||

| Coat | 92.9 | |

|

| ||

| Black | 89.6 | |

|

| ||

| Human | 89 | |

|

| ||

| Black-and-white | 86.2 | |

|

| ||

| Dress | 84.9 | |

|

| ||

| Style | 84.1 | |

|

| ||

| Suit | 81.8 | |

|

| ||

| Monochrome photography | 79.8 | |

|

| ||

| Adaptation | 79.5 | |

|

| ||

| Monochrome | 77.7 | |

|

| ||

| Vintage clothing | 76.2 | |

|

| ||

| Plant | 75.7 | |

|

| ||

| Formal wear | 74.6 | |

|

| ||

| Event | 73.4 | |

|

| ||

| Font | 69.5 | |

|

| ||

| Room | 68.7 | |

|

| ||

| History | 66.7 | |

|

| ||

| Stock photography | 66.3 | |

|

| ||

| Happy | 65.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 22-34 |

| Gender | Male, 99.1% |

| Happy | 96.1% |

| Surprised | 2.6% |

| Confused | 0.3% |

| Calm | 0.3% |

| Angry | 0.2% |

| Fear | 0.1% |

| Disgusted | 0.1% |

| Sad | 0.1% |

AWS Rekognition

| Age | 28-44 |

| Gender | Female, 97.8% |

| Happy | 97% |

| Angry | 0.9% |

| Calm | 0.8% |

| Fear | 0.6% |

| Sad | 0.3% |

| Surprised | 0.2% |

| Disgusted | 0.1% |

| Confused | 0.1% |

AWS Rekognition

| Age | 12-22 |

| Gender | Male, 90.6% |

| Calm | 56.9% |

| Sad | 37.9% |

| Confused | 1.6% |

| Surprised | 1.3% |

| Angry | 1% |

| Fear | 0.6% |

| Happy | 0.5% |

| Disgusted | 0.2% |

AWS Rekognition

| Age | 27-43 |

| Gender | Female, 94.9% |

| Sad | 68.1% |

| Happy | 19.4% |

| Fear | 5.1% |

| Calm | 2.1% |

| Surprised | 1.7% |

| Confused | 1.5% |

| Angry | 1.4% |

| Disgusted | 0.7% |

AWS Rekognition

| Age | 32-48 |

| Gender | Female, 50.5% |

| Sad | 82.1% |

| Calm | 9.1% |

| Surprised | 3.1% |

| Fear | 1.7% |

| Confused | 1.6% |

| Disgusted | 1.4% |

| Happy | 0.6% |

| Angry | 0.5% |

AWS Rekognition

| Age | 33-49 |

| Gender | Male, 96.3% |

| Calm | 79.9% |

| Surprised | 6.9% |

| Sad | 4% |

| Confused | 3.8% |

| Happy | 2.3% |

| Fear | 1.7% |

| Disgusted | 0.8% |

| Angry | 0.6% |

AWS Rekognition

| Age | 39-57 |

| Gender | Male, 90.8% |

| Calm | 53.6% |

| Surprised | 45.1% |

| Fear | 0.5% |

| Angry | 0.2% |

| Confused | 0.2% |

| Sad | 0.2% |

| Happy | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 5-15 |

| Gender | Male, 65% |

| Angry | 44% |

| Calm | 30% |

| Sad | 18.4% |

| Fear | 3.2% |

| Confused | 1.5% |

| Happy | 1.4% |

| Surprised | 0.7% |

| Disgusted | 0.7% |

AWS Rekognition

| Age | 28-44 |

| Gender | Male, 58.6% |

| Sad | 68.7% |

| Calm | 27.3% |

| Fear | 1.5% |

| Angry | 1% |

| Confused | 0.5% |

| Happy | 0.4% |

| Surprised | 0.4% |

| Disgusted | 0.3% |

AWS Rekognition

| Age | 27-43 |

| Gender | Female, 51.1% |

| Angry | 31.5% |

| Calm | 29.7% |

| Fear | 22.1% |

| Surprised | 12.9% |

| Sad | 1.3% |

| Happy | 1.3% |

| Confused | 0.7% |

| Disgusted | 0.5% |

AWS Rekognition

| Age | 29-45 |

| Gender | Male, 80% |

| Fear | 54.9% |

| Calm | 34.4% |

| Surprised | 4.7% |

| Angry | 3.2% |

| Happy | 2% |

| Sad | 0.5% |

| Disgusted | 0.2% |

| Confused | 0.1% |

AWS Rekognition

| Age | 15-27 |

| Gender | Male, 55.6% |

| Angry | 62.3% |

| Calm | 23.1% |

| Fear | 6% |

| Sad | 3% |

| Happy | 2.5% |

| Surprised | 1.4% |

| Disgusted | 1.1% |

| Confused | 0.7% |

AWS Rekognition

| Age | 11-21 |

| Gender | Female, 80.2% |

| Calm | 70.1% |

| Sad | 29% |

| Happy | 0.6% |

| Confused | 0.2% |

| Angry | 0% |

| Surprised | 0% |

| Fear | 0% |

| Disgusted | 0% |

Microsoft Cognitive Services

| Age | 33 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| people portraits | 50.6% | |

|

| ||

| paintings art | 28.3% | |

|

| ||

| food drinks | 16.6% | |

|

| ||

| text visuals | 1.3% | |

|

| ||

| events parties | 1.1% | |

|

| ||

Captions

Microsoft

created on 2021-12-14

| Mikhail Tal et al. standing in front of a crowd posing for the camera | 88.7% | |

|

| ||

| Mikhail Tal et al. posing for a photo in front of a crowd | 88.6% | |

|

| ||

| Mikhail Tal et al. standing in front of a crowd | 88.5% | |

|

| ||

Text analysis

Amazon

121

55%

p133

P133

55%0

P133

55%0