Machine Generated Data

Tags

Amazon

created on 2021-12-14

| Person | 99.4 | |

|

| ||

| Human | 99.4 | |

|

| ||

| Person | 99.3 | |

|

| ||

| Person | 98.2 | |

|

| ||

| Person | 98 | |

|

| ||

| Person | 97.7 | |

|

| ||

| Monitor | 97.7 | |

|

| ||

| Electronics | 97.7 | |

|

| ||

| Screen | 97.7 | |

|

| ||

| Display | 97.7 | |

|

| ||

| Person | 97.4 | |

|

| ||

| Person | 95.1 | |

|

| ||

| Military | 93.9 | |

|

| ||

| Military Uniform | 93.6 | |

|

| ||

| Person | 91.8 | |

|

| ||

| Army | 84.6 | |

|

| ||

| Armored | 84.6 | |

|

| ||

| People | 82.3 | |

|

| ||

| Person | 81.8 | |

|

| ||

| Person | 79.1 | |

|

| ||

| Person | 71.5 | |

|

| ||

| Person | 69.8 | |

|

| ||

| Soldier | 68.1 | |

|

| ||

| Officer | 67.6 | |

|

| ||

| Person | 62.2 | |

|

| ||

Clarifai

created on 2023-10-15

| people | 99.9 | |

|

| ||

| group | 99.2 | |

|

| ||

| adult | 98.3 | |

|

| ||

| monochrome | 98.2 | |

|

| ||

| woman | 96.9 | |

|

| ||

| man | 96.7 | |

|

| ||

| child | 95.4 | |

|

| ||

| furniture | 93.9 | |

|

| ||

| group together | 93.8 | |

|

| ||

| war | 93.7 | |

|

| ||

| wear | 93.5 | |

|

| ||

| indoors | 93.4 | |

|

| ||

| movie | 93.4 | |

|

| ||

| portrait | 93.2 | |

|

| ||

| family | 92.3 | |

|

| ||

| uniform | 90.4 | |

|

| ||

| medical practitioner | 90.2 | |

|

| ||

| room | 88.9 | |

|

| ||

| documentary | 88.7 | |

|

| ||

| administration | 87.8 | |

|

| ||

Imagga

created on 2021-12-14

Google

created on 2021-12-14

| Photograph | 94.2 | |

|

| ||

| Picture frame | 92.6 | |

|

| ||

| Rectangle | 82.7 | |

|

| ||

| Suit | 80.5 | |

|

| ||

| Font | 80.2 | |

|

| ||

| Technology | 76.4 | |

|

| ||

| Electronic device | 74.5 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Television set | 72.5 | |

|

| ||

| Event | 71.1 | |

|

| ||

| Display device | 68.7 | |

|

| ||

| Vintage clothing | 67.7 | |

|

| ||

| Monochrome photography | 67.7 | |

|

| ||

| Monochrome | 67.1 | |

|

| ||

| Stock photography | 66.6 | |

|

| ||

| Room | 66.4 | |

|

| ||

| Art | 64.7 | |

|

| ||

| Crew | 61.3 | |

|

| ||

| History | 61 | |

|

| ||

| Team | 60.9 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 34-50 |

| Gender | Female, 84.7% |

| Surprised | 75.1% |

| Calm | 19.5% |

| Happy | 2.1% |

| Fear | 1.2% |

| Sad | 0.7% |

| Confused | 0.5% |

| Angry | 0.4% |

| Disgusted | 0.3% |

AWS Rekognition

| Age | 16-28 |

| Gender | Female, 86% |

| Calm | 97.7% |

| Sad | 1.9% |

| Angry | 0.2% |

| Happy | 0.1% |

| Disgusted | 0% |

| Fear | 0% |

| Confused | 0% |

| Surprised | 0% |

AWS Rekognition

| Age | 13-25 |

| Gender | Female, 91% |

| Calm | 53.3% |

| Sad | 33.9% |

| Confused | 4.2% |

| Fear | 2.9% |

| Surprised | 2.2% |

| Happy | 2.2% |

| Angry | 1% |

| Disgusted | 0.3% |

AWS Rekognition

| Age | 11-21 |

| Gender | Female, 88.7% |

| Calm | 87.6% |

| Sad | 9.6% |

| Angry | 1.4% |

| Confused | 0.7% |

| Surprised | 0.3% |

| Fear | 0.2% |

| Happy | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 5-15 |

| Gender | Female, 81.7% |

| Sad | 64.3% |

| Calm | 33.9% |

| Angry | 0.7% |

| Fear | 0.5% |

| Happy | 0.2% |

| Confused | 0.2% |

| Surprised | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 49-67 |

| Gender | Male, 80.2% |

| Calm | 71.8% |

| Surprised | 20.5% |

| Sad | 4.1% |

| Fear | 2.6% |

| Angry | 0.5% |

| Confused | 0.3% |

| Happy | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 10-20 |

| Gender | Female, 99.7% |

| Calm | 89.6% |

| Sad | 8.5% |

| Disgusted | 0.6% |

| Angry | 0.5% |

| Happy | 0.3% |

| Confused | 0.2% |

| Fear | 0.1% |

| Surprised | 0.1% |

AWS Rekognition

| Age | 46-64 |

| Gender | Male, 60.2% |

| Calm | 90.9% |

| Sad | 7.1% |

| Fear | 0.9% |

| Surprised | 0.5% |

| Confused | 0.2% |

| Angry | 0.2% |

| Disgusted | 0.1% |

| Happy | 0.1% |

AWS Rekognition

| Age | 20-32 |

| Gender | Male, 91.6% |

| Calm | 87.3% |

| Sad | 6.6% |

| Happy | 3.2% |

| Angry | 0.9% |

| Confused | 0.6% |

| Surprised | 0.5% |

| Disgusted | 0.4% |

| Fear | 0.4% |

AWS Rekognition

| Age | 14-26 |

| Gender | Male, 62% |

| Calm | 92.5% |

| Fear | 3.6% |

| Happy | 1.3% |

| Surprised | 1.1% |

| Sad | 1% |

| Angry | 0.3% |

| Confused | 0.2% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 9-19 |

| Gender | Female, 64.9% |

| Calm | 80.9% |

| Sad | 11.7% |

| Angry | 2.2% |

| Disgusted | 1.7% |

| Surprised | 1% |

| Fear | 0.9% |

| Happy | 0.9% |

| Confused | 0.8% |

AWS Rekognition

| Age | 38-56 |

| Gender | Female, 81.8% |

| Surprised | 47.4% |

| Calm | 33.2% |

| Angry | 8.6% |

| Disgusted | 3.3% |

| Fear | 2.7% |

| Sad | 2.2% |

| Happy | 1.8% |

| Confused | 0.9% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very likely |

Feature analysis

Categories

Imagga

| paintings art | 96.3% | |

|

| ||

| text visuals | 2.5% | |

|

| ||

Captions

Microsoft

created on 2021-12-14

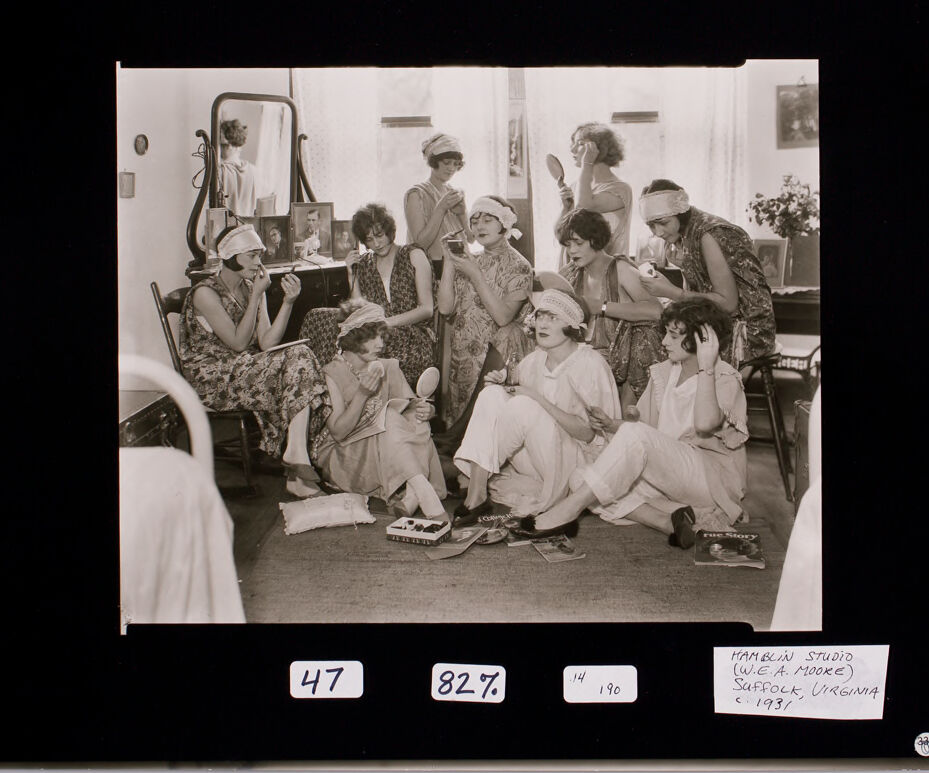

| a group of people posing for a photo | 93.4% | |

|

| ||

| a group of people posing for a photo in front of a window | 87.7% | |

|

| ||

| a screen shot of a group of people posing for a photo | 87.6% | |

|

| ||

Text analysis

Amazon

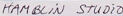

190

47

82%

VIRGINIA

MOOKE)

Suffock, VIRGINIA

(W.E.A. MOOKE)

HAMBLIN

HAMBLIN STUDIO

STUDIO

(W.E.A.

Suffock,

14

c.1931

age

THe Stor

82%

HAM BLIN STUDIO

(W.E.A. MOOK E)

SuffoLk, UIRGINIA

C.1931

47

14

190

THe

Stor

82%

HAM

BLIN

STUDIO

(W.E.A.

MOOK

E)

SuffoLk,

UIRGINIA

C.1931

47

14

190