Machine Generated Data

Tags

Amazon

created on 2019-11-10

Clarifai

created on 2019-11-10

Imagga

created on 2019-11-10

| kin | 78.6 | |

|

| ||

| statue | 33.9 | |

|

| ||

| sculpture | 26.9 | |

|

| ||

| religion | 22.4 | |

|

| ||

| old | 21.6 | |

|

| ||

| dress | 19.9 | |

|

| ||

| monument | 19.6 | |

|

| ||

| ancient | 19 | |

|

| ||

| people | 19 | |

|

| ||

| art | 18.3 | |

|

| ||

| culture | 16.2 | |

|

| ||

| history | 16.1 | |

|

| ||

| bride | 15.9 | |

|

| ||

| couple | 15.7 | |

|

| ||

| person | 15.6 | |

|

| ||

| portrait | 15.5 | |

|

| ||

| marble | 15.5 | |

|

| ||

| religious | 15 | |

|

| ||

| antique | 13.9 | |

|

| ||

| stone | 13.6 | |

|

| ||

| historical | 13.2 | |

|

| ||

| face | 12.8 | |

|

| ||

| groom | 12.5 | |

|

| ||

| adult | 12.4 | |

|

| ||

| detail | 12.1 | |

|

| ||

| fashion | 12.1 | |

|

| ||

| wedding | 12 | |

|

| ||

| catholic | 11.8 | |

|

| ||

| happiness | 11.7 | |

|

| ||

| architecture | 11.7 | |

|

| ||

| man | 11.5 | |

|

| ||

| celebration | 11.2 | |

|

| ||

| church | 11.1 | |

|

| ||

| love | 11 | |

|

| ||

| traditional | 10.8 | |

|

| ||

| tourism | 10.7 | |

|

| ||

| travel | 10.6 | |

|

| ||

| decoration | 10.5 | |

|

| ||

| women | 10.3 | |

|

| ||

| clothing | 10.3 | |

|

| ||

| famous | 10.2 | |

|

| ||

| mother | 10.2 | |

|

| ||

| two | 10.2 | |

|

| ||

| historic | 10.1 | |

|

| ||

| figure | 10 | |

|

| ||

| bouquet | 9.6 | |

|

| ||

| god | 9.6 | |

|

| ||

| closeup | 9.4 | |

|

| ||

| happy | 9.4 | |

|

| ||

| elegance | 9.2 | |

|

| ||

| romantic | 8.9 | |

|

| ||

| family | 8.9 | |

|

| ||

| party | 8.6 | |

|

| ||

| color | 8.3 | |

|

| ||

| vintage | 8.3 | |

|

| ||

| park | 8.2 | |

|

| ||

| landmark | 8.1 | |

|

| ||

| symbol | 8.1 | |

|

| ||

| carving | 8 | |

|

| ||

| male | 8 | |

|

| ||

| sepia | 7.8 | |

|

| ||

| holy | 7.7 | |

|

| ||

| faith | 7.7 | |

|

| ||

| head | 7.6 | |

|

| ||

| cross | 7.5 | |

|

| ||

| lady | 7.3 | |

|

| ||

| smiling | 7.2 | |

|

| ||

| memorial | 7.2 | |

|

| ||

| posing | 7.1 | |

|

| ||

| world | 7 | |

|

| ||

Google

created on 2019-11-10

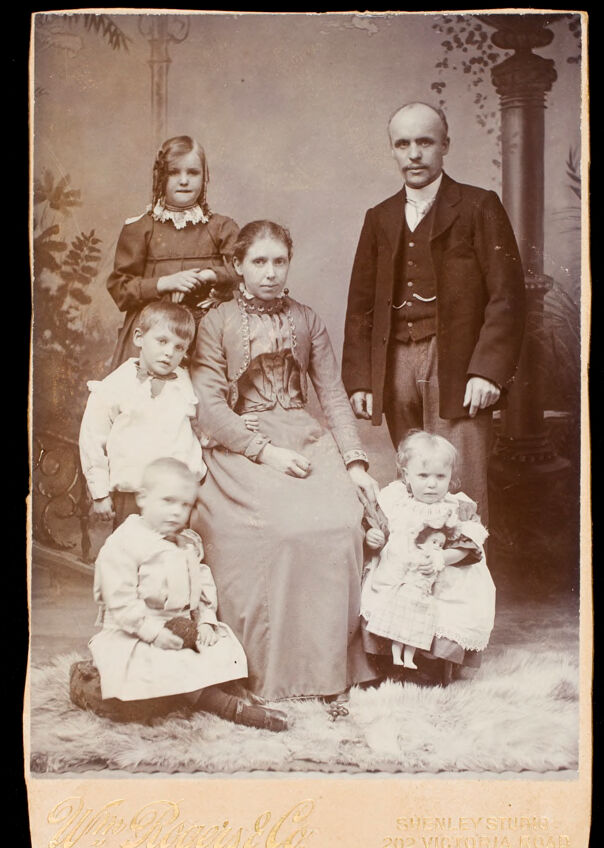

| Photograph | 97.4 | |

|

| ||

| People | 95.5 | |

|

| ||

| Snapshot | 86.7 | |

|

| ||

| Photography | 70.6 | |

|

| ||

| Family | 69.2 | |

|

| ||

| Vintage clothing | 68.9 | |

|

| ||

| Classic | 68.6 | |

|

| ||

| Stock photography | 68.5 | |

|

| ||

| Retro style | 53.4 | |

|

| ||

| Gentleman | 51.3 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 34-50 |

| Gender | Male, 95.8% |

| Sad | 0.2% |

| Surprised | 0.1% |

| Confused | 0.3% |

| Disgusted | 0.1% |

| Angry | 0.3% |

| Calm | 98.9% |

| Fear | 0% |

| Happy | 0% |

AWS Rekognition

| Age | 1-5 |

| Gender | Female, 51.5% |

| Sad | 54.2% |

| Confused | 45.1% |

| Disgusted | 45.1% |

| Calm | 45.5% |

| Happy | 45% |

| Angry | 45.1% |

| Fear | 45.1% |

| Surprised | 45% |

AWS Rekognition

| Age | 22-34 |

| Gender | Female, 54.1% |

| Surprised | 45% |

| Calm | 55% |

| Disgusted | 45% |

| Happy | 45% |

| Fear | 45% |

| Sad | 45% |

| Confused | 45% |

| Angry | 45% |

AWS Rekognition

| Age | 4-12 |

| Gender | Female, 53.1% |

| Disgusted | 45% |

| Confused | 45% |

| Angry | 45.4% |

| Fear | 45% |

| Calm | 51.2% |

| Happy | 45% |

| Sad | 48.2% |

| Surprised | 45% |

AWS Rekognition

| Age | 1-7 |

| Gender | Female, 54.5% |

| Angry | 46.2% |

| Sad | 46.7% |

| Calm | 51.5% |

| Confused | 45.1% |

| Surprised | 45.1% |

| Disgusted | 45.1% |

| Fear | 45.2% |

| Happy | 45.1% |

AWS Rekognition

| Age | 1-5 |

| Gender | Female, 53.3% |

| Confused | 45% |

| Disgusted | 45% |

| Happy | 45% |

| Angry | 45.1% |

| Calm | 47% |

| Surprised | 45% |

| Sad | 52.9% |

| Fear | 45% |

Microsoft Cognitive Services

| Age | 44 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 91.4% | |

|

| ||

| people portraits | 8.4% | |

|

| ||

Captions

Microsoft

created on 2019-11-10

| a vintage photo of a group of people posing for the camera | 90.9% | |

|

| ||

| a vintage photo of a group of people posing for a picture | 90.8% | |

|

| ||

| a vintage photo of a group of people posing for a photo | 89.8% | |

|

| ||

Text analysis

Amazon

202

SHENLEYSTAPE

VIGKORIA

Oais'

202 VIGKORIA KCA

ONE Oais' SHENLEYSTAPE

KCA

ONE

SHENLEY STuto

SHENLEY

STuto