Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 31-47 |

| Gender | Male, 54.9% |

| Disgusted | 45% |

| Surprised | 45.8% |

| Sad | 45.1% |

| Angry | 45.2% |

| Fear | 45.4% |

| Happy | 45% |

| Calm | 53.4% |

| Confused | 45% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.7% | |

Categories

Imagga

created on 2019-11-10

| nature landscape | 67% | |

| pets animals | 31.8% | |

Captions

Microsoft

created by unknown on 2019-11-10

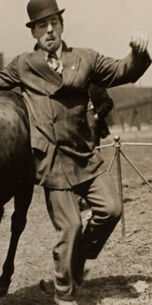

| a vintage photo of a person riding a horse | 94.2% | |

| a vintage photo of a person riding a horse in front of a building | 90.8% | |

| a vintage photo of a person on a horse | 90.7% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

a man with a horse.

Salesforce

Created by general-english-image-caption-blip on 2025-05-15

a photograph of a man riding a horse in a field

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-13

The image depicts a staged outdoor scene where a man is positioned near or interacting with a small donkey in an open field. The donkey is bridled with a rope around its mouth, while the man appears to be in motion, possibly trying to lead or control the animal. Several onlookers in formal and casual attire, including hats and suits, are gathered in the background observing the event. The setting includes an urban backdrop with industrial buildings faintly visible, suggesting that the photo was taken in a city or town environment during the early 20th century. A rope separates the participants from the spectators, indicating an organized event.

Created by gpt-4o-2024-08-06 on 2025-06-13

The image depicts a historical black-and-white scene in an outdoor setting. In the foreground, a donkey with a small, dark saddle and a muzzle on its snout. The donkey appears to be in motion as a man, dressed in a suit and hat, is stepping onto or off the saddle. A rope fence encircles the area where the donkey and the man are located, possibly signifying a performance or competition. In the background, several men dressed in early 20th-century attire, including suits, hats, and overcoats, are observing the scene. The skyline behind them shows industrial buildings, typical of an urban setting during that time period. The ground appears to be dirt, suggesting an outdoor field or fairground.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-20

The image appears to be a historical black and white photograph depicting a group of men standing around a donkey or small horse. The man in the center is holding the animal and seems to be demonstrating or presenting it to the other men, who are observing. The background suggests an urban setting, with buildings visible in the distance. The overall scene has a vintage, early 20th century feel to it.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-20

This is a vintage black and white photograph showing what appears to be a donkey auction or livestock event in an urban setting. A man in a bowler hat and suit is attempting to mount or demonstrate something with a donkey, while several onlookers in period clothing (early 1900s by the style) watch from the sides. There's a rope or barrier line visible, and industrial buildings with smokestacks can be seen in the background, suggesting this is taking place in a city or industrial area. The photograph has some aging and wear around the edges, typical of photographs from this era.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-11

The image is a sepia-toned photograph of a man riding a donkey, with a group of people watching in the background.

The man is wearing a bowler hat and a suit, and he is holding the reins of the donkey with his left hand. The donkey is dark brown with a white patch on its nose, and it appears to be running or trotting. The man's right arm is raised, and his left leg is bent at the knee, as if he is guiding the donkey or preparing to jump off.

In the background, there are several people standing behind a rope barrier. They are all wearing hats and coats, and some of them are looking at the man and the donkey. There are also some buildings and a city skyline visible in the distance.

The overall atmosphere of the image suggests that it was taken at a fair or circus, where the man is performing a stunt or trick with the donkey. The sepia tone gives the image a vintage or nostalgic feel, and the worn edges of the photograph suggest that it may be an old or antique image.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-11

The image depicts a sepia-toned photograph of a man riding a donkey, with a group of men standing behind them. The man is dressed in a suit and bowler hat, while the donkey wears a bridle. A rope is attached to the donkey's bridle, and another rope is held by the men standing behind them.

In the background, a cityscape is visible, featuring buildings and a sign that reads "CUP." The overall atmosphere suggests a scene from the early 20th century, possibly during a circus or carnival event.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-06

The image is a monochromatic photograph that depicts a man in a suit and hat attempting to catch a donkey. The donkey is running away from the man, who is stretching out his hands to try to grab it. The donkey is a small animal with a white muzzle and a bridle. Behind the man, there are several other men standing in a row, all of them wearing hats and shoes. The background shows a building with several windows and a clear sky.

Created by amazon.nova-pro-v1:0 on 2025-06-06

The image shows a man in a suit and hat running while holding a donkey's tail. The donkey is running in the same direction as the man. Behind them, there are other people standing and watching. The area is enclosed by a rope, and there is a man standing near the rope. In the distance, there are buildings and trees. The image has a vintage filter applied.

Google Gemini

Created by gemini-2.0-flash on 2025-05-08

This is a sepia photograph that appears to be from the early 20th century. In the center of the frame, a man in a suit and bowler hat is running alongside a donkey. The man's left hand is on the donkey's back, and his right arm is raised in the air. The donkey has a tag on its ear.

To the left, another man in a suit and hat stands holding a rope that appears to section off an area. Behind the man with the donkey, a group of five men are standing, observing. They are dressed in a variety of suits and hats, typical of the era.

In the background, there is a building complex with several tall structures, possibly industrial buildings or factories, suggesting an urban setting.

The ground is patchy with grass, and the photograph is slightly faded, adding to its antique appearance. The photo is surrounded by a black background.

Created by gemini-2.0-flash-lite on 2025-05-08

Here is a description of the image:

The image is a vintage black and white photograph, possibly from the early 20th century. It features a donkey being led by a man, and other men are observing the scene.

Here's a breakdown:

- Subject: The primary focus is on a dark-colored donkey. It's being led by a man wearing a suit and a bowler hat. The man appears to be running or striding forward, guiding the donkey.

- Setting: The scene seems to be taking place outdoors on a grassy area. There are a few simple, industrial-looking buildings in the background, suggesting it might be a town or city setting.

- Other People: Several other men are present, standing and watching the donkey and the man leading it. They are wearing suits and hats, typical attire of the time period. Some may be officials or spectators.

- Composition: The photograph is framed by a white border, which is consistent with the photography of that era. The composition gives a sense of action and movement, capturing a moment in time.

- Atmosphere: The overall tone is one of formality with the men in their suits and the donkey being part of the event.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-08

This sepia-toned photograph appears to be from the early 20th century. It depicts a man riding a small horse or pony in what seems to be a fair or exhibition setting. The man is dressed in a suit and a bowler hat, and he is holding the reins of the horse with one hand while waving with the other. The horse is adorned with a ribbon or decoration around its neck.

In the background, there are four other men, all dressed in suits and hats typical of the era. They appear to be observing or possibly judging the man on the horse. The setting is outdoors, with a rope barrier separating the participants from the observers. In the far background, there are industrial buildings, suggesting that this event is taking place in or near an urban area.

The overall atmosphere suggests a formal or semi-formal event, possibly a competition or exhibition related to horse riding or animal husbandry. The photograph has an aged appearance, with visible wear and tear around the edges, indicating it is quite old.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-08-08

The image is a historical black-and-white photograph depicting a man leading a small donkey by a rope. The man is dressed in a suit and a hat, and he appears to be in motion, gesturing with his right hand as if he is explaining or demonstrating something. The donkey is small and has a harness, suggesting it might be used for work or as a pet. There are several other individuals in the background, some of whom are also wearing hats and suits, and they are observing the scene. The setting looks like an open field or a rural area, with some buildings and structures visible in the distance. The photograph has a vintage look, indicating it might be from the early 20th century.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-08-08

This image is a black-and-white photograph, likely from the early 20th century, showing a group of men gathered in an outdoor setting, possibly a fair or a show. The central figure is a man dressed in a suit and hat, leading a donkey. The donkey appears to be reluctant or balky, as the man is pulling on its lead with some effort. The man's posture and facial expression suggest that he is trying to control the donkey. In the background, several other men are observing the scene, some of them also wearing hats and suits. The setting appears to be an open field with a fence in the background and some buildings in the distance. The overall atmosphere of the image suggests a sense of humor or lightheartedness, possibly capturing a moment of amusement or challenge at the event.