Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

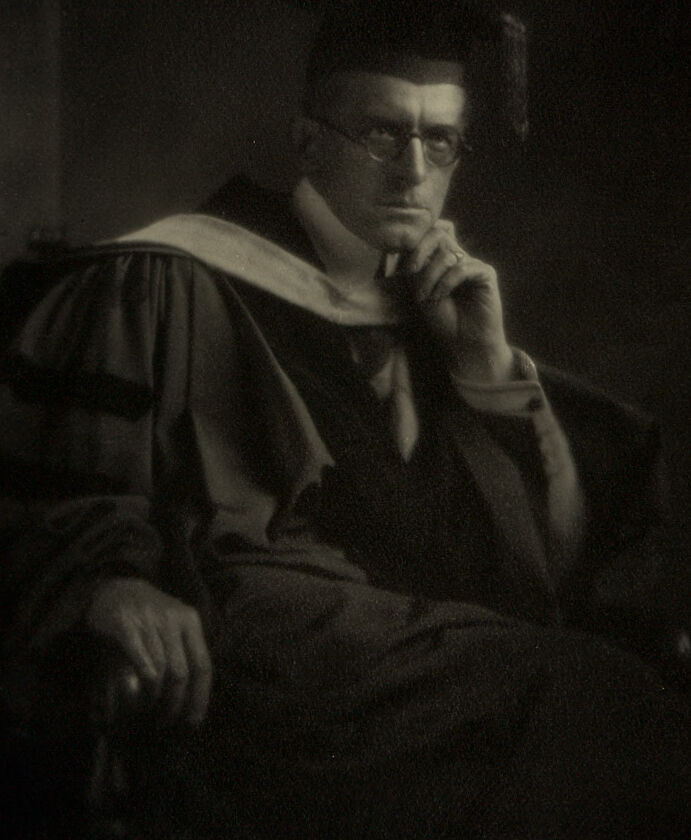

| Age | 35-43 |

| Gender | Male, 99.8% |

| Confused | 54.2% |

| Calm | 28.3% |

| Sad | 10.6% |

| Disgusted | 5.2% |

| Surprised | 0.6% |

| Angry | 0.6% |

| Happy | 0.3% |

| Fear | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 97.4% | |

Categories

Imagga

created on 2022-01-08

| streetview architecture | 44.9% | |

| paintings art | 24.3% | |

| people portraits | 11.9% | |

| events parties | 11.6% | |

| interior objects | 2.9% | |

| text visuals | 2.3% | |

Captions

Microsoft

created by unknown on 2022-01-08

| a man sitting on a bed | 65.6% | |

| a man sitting on a couch | 65.5% | |

| a man sitting in a chair | 65.4% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

portrait of a man wearing a suit and glasses.

Salesforce

Created by general-english-image-caption-blip on 2025-05-20

a photograph of a man in a graduation gown and glasses

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-08

The image shows a seated individual wearing academic regalia, which includes a gown and hood typically associated with higher education ceremonies. The lighting is subdued, creating a dramatic and solemn atmosphere, while the person appears to be resting their hand near their face in a contemplative pose. The overall tone of the photograph is dark and vintage, suggesting it may be an older portrait.

Created by gpt-4o-2024-08-06 on 2025-06-08

The image depicts a person wearing academic robes, typically associated with graduation or an academic ceremony, seated in a dignified pose. The robes are dark in color, featuring distinct large sleeves and a lighter-colored hood draped over the shoulders. The individual is resting in an armchair with one hand placed on the armrest while the other hand is positioned near the face. The lighting in the photograph creates a dramatic effect, highlighting the textures and folds of the robes. The overall composition gives a sense of formality and scholastic achievement.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-14

The image appears to be a black and white portrait photograph of a man. The man is seated, wearing a dark robe or cloak, and has a pensive, thoughtful expression on his face as he gazes directly at the camera. The lighting creates a dramatic, chiaroscuro effect, casting shadows across the man's features and creating a somber, introspective mood.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-14

This is a dramatic black and white portrait photograph, likely from the early 20th century, showing a scholarly figure in academic regalia. The subject is wearing glasses and academic robes with a distinctive collar or stole, and is posed in a contemplative position with one hand near their face. The lighting is moody and atmospheric, creating strong shadows and highlighting the subject's thoughtful expression. The composition and style is reminiscent of formal academic portraits from that era, with the dark background adding to the gravitas of the image. The photograph appears to have a soft focus quality typical of early photographic techniques.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-04

The image is a black-and-white photograph of a man in academic regalia, likely taken in the early 20th century. The man is seated and appears to be in a contemplative pose, with his hand resting on his chin.

- The man is wearing a dark-colored academic robe with a white sash or stole draped over his shoulders.

- The robe has a high collar and is fastened at the neck with a small pin or clasp.

- The sash or stole is made of a thick, white fabric and is draped over the man's shoulders in a diagonal pattern.

- The man is also wearing a dark-colored mortarboard cap, which is placed on top of his head at a slight angle.

- The cap is made of a stiff, black material and has a flat top and a narrow brim.

- It is adorned with a small, white tassel hanging from the front.

- The background of the image is dark and out of focus, but it appears to be a room with a wall and possibly a window or door.

- The wall is painted a light color, and there is a faint outline of a window or door frame visible behind the man.

- The overall atmosphere of the image is one of quiet contemplation, with the man's thoughtful expression and the dark, muted colors of the background creating a sense of introspection.

Overall, the image suggests that the man is a scholar or academic who has achieved a significant milestone, such as earning a degree or receiving an award. The formal attire and dignified pose convey a sense of respect and admiration for the man's accomplishments.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-04

This image is a black-and-white photograph of a man in academic attire, likely taken in the early 20th century. The man sits with his left hand resting on his chin and his right arm resting on his leg, exuding a contemplative demeanor.

He wears a dark robe with a white stole draped over his shoulders, which is characteristic of academic regalia. A ring adorns his left hand, adding a touch of elegance to his overall appearance. The background of the image is dark, with subtle hints of light that suggest the presence of a window or other source of illumination.

In the top-left corner of the image, a handwritten notation reads "March 1915," providing a specific date for the photograph. The overall atmosphere of the image is one of quiet reflection, as if the subject is lost in thought. The use of black and white photography adds a sense of timelessness to the image, making it feel like a relic from another era.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-28

A black-and-white photo shows a man wearing a graduation gown and hat. He is sitting on a chair and seems to be posing for a photo. He is wearing glasses and a ring and has his hand on his chin. The background is dark, and the light is focused on the man. The photo has a vintage look, and the image is slightly blurry.

Created by amazon.nova-pro-v1:0 on 2025-05-28

The image is a monochromatic photograph of a man wearing a graduation gown, cap, and tie. The man is wearing glasses and a ring on his right hand. He is sitting on a chair, and his right hand is on his chin, while his left hand is resting on his lap. The man's facial expression appears serious and contemplative. The background is dark, and the focus is on the man.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-01

Here's a description of the image:

Overall: The image is a portrait of a man in academic attire. It's a black and white photograph, likely from the early 20th century, given the style. The lighting creates a dramatic effect, with strong shadows.

The Man:

- He is seated and has a thoughtful, serious expression.

- He is wearing round spectacles.

- He is resting his chin on his right hand, fingers to his lips.

- He is wearing a dark suit, a white collared shirt, and a ceremonial academic robe or gown with a white collar or cape.

- He appears to be wearing a cap with a small tassle.

- A wedding band is visible on the ring finger.

Setting & Composition:

- The background is very dark, which makes the subject stand out.

- He's likely seated in a chair.

- The photograph seems to be studio portrait.

Style: The image evokes a sense of formality and gravitas, typical of academic or professional portraits of the era. The lighting emphasizes the man's face and expression, drawing the viewer's attention to his thoughtful pose.

Created by gemini-2.0-flash on 2025-05-01

Here is a description of the image:

This is a vintage black and white portrait of a man in academic attire, likely taken in the early to mid-20th century. The man is seated, facing slightly to the left of the frame, and looking directly at the viewer with a thoughtful expression. He is wearing a graduation robe with a white collar and what appears to be a mortarboard cap.

His right hand is raised to his chin in a contemplative pose, and he is wearing glasses. The lighting is subdued, creating a sense of seriousness and depth. The background is dark and indistinct, which draws the viewer's attention to the subject's face and demeanor. The overall image has a classic and formal feel, suggesting a portrait taken for academic or professional purposes.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black-and-white portrait photograph of a man. He is seated and appears to be in a contemplative pose, with his hand resting on his chin. The man is wearing glasses and is dressed in what seems to be academic regalia, including a robe and a hood, which suggests he may be a scholar, professor, or academic. The background is dark, emphasizing the subject and creating a dramatic and thoughtful atmosphere. The lighting is soft and focused on the man's face, highlighting his expression and features. The photograph has an old, possibly vintage, feel to it, indicated by the grainy texture and the style of the clothing and pose.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-06

This image is a black-and-white portrait of a man wearing academic regalia, indicating he is likely a graduate or faculty member. The man is seated and appears to be in a thoughtful or contemplative pose, with one hand resting on his chin. He is wearing glasses and has a serious expression. The lighting is dramatic, with a strong light source illuminating his face from the front, creating deep shadows behind him. The background is dark and out of focus, which draws attention to the subject. The photograph has a vintage quality, suggesting it is from an earlier time period.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-06

This is a black-and-white portrait of a man, seated and gazing thoughtfully at the camera. He is wearing a dark academic gown with a white sash or stole draped over his shoulders, suggesting a formal or scholarly context. His right hand is raised, with his fingers near his face, conveying a contemplative or introspective pose. The lighting is dramatic, with high contrast, emphasizing his features and the texture of his clothing. The background is dark and unadorned, which further focuses attention on the subject. The image has a vintage quality, indicating it may have been taken in the early 20th century.